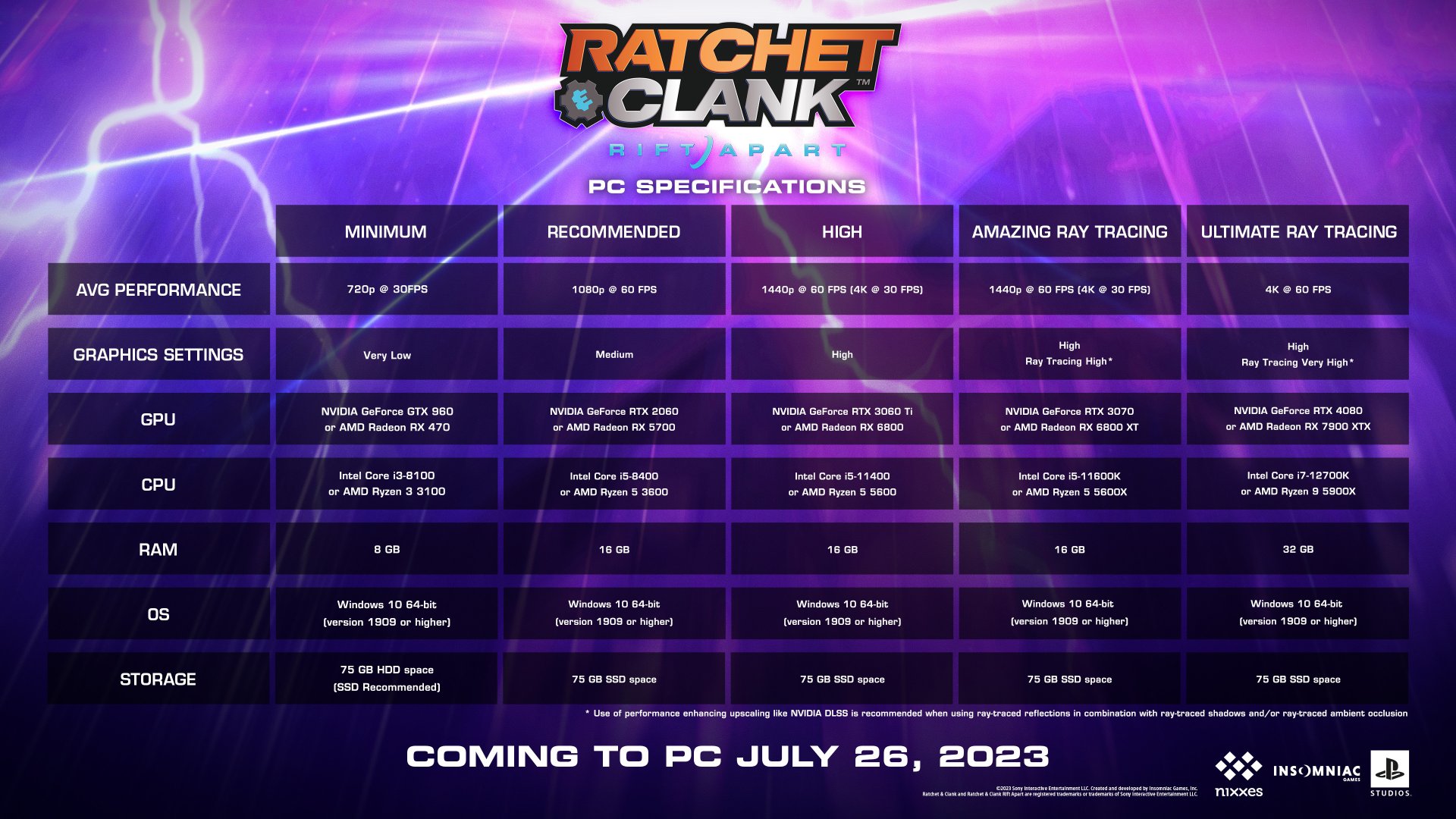

Nixxes Software has just revealed the official PC system requirements for Ratchet & Clank: Rift Apart. These PC specs cover all common resolutions (1080p, 1440p and 4K), as well as the framerate they target and their graphical settings.

These are among the best PC requirements we’ve seen, so kudos to Nixxes for detailing them. And yes, Nixxes has also included the PC specs required for running the game’s Ray Tracing effects.

For gaming at 1440p/High Settings with 60fps, Nixxes recommends an NVIDIA GeForce RTX 3070 or an AMD Radeon RX 6800. The developers also recommend using an SSD, and the game will need 16GB of total RAM for this preset.

For gaming at 4K/High Settings/Very High Ray Tracing with 60fps, Nixxes suggests using an NVIDIA GeForce RTX 4080 or an AMD Radeon RX 7900XTX. Do note that for its RT settings, Nixxes suggests using DLSS 2 or FSR 2.0.

From the looks of it, these PC system requirements for Ratchet & Clank: Rift Apart seem reasonable. Thus, it will be interesting to see how the game will perform on our platform. And yes, we’ll be sure to also test our Intel i9 9900K. My guess is that this CPU will be able to maintain 60fps with DLSS 3. And, if it does, it will show how transformative DLSS 3 can actually be for older PC systems.

Ratchet & Clank: Rift Apart will support NVIDIA DLSS 3, AMD FSR 2.0 and Intel XeSS at launch. Furthermore, the game will support Ray Tracing in order to enhance its reflections and exterior shadows.

Sony will release this game on PC on July 26th. For those wondering, we haven’t received any PC review code for it. What this means is that we likely won’t have a day-1 PC Performance Analysis. However, and as with most titles, we’ve already purchased it and we’ll be sharing our initial performance impressions the moment it becomes available.

Enjoy and stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email