Intel will release its new CPUs that are based on the Kaby Lake architecture in January, however things are not looking good. Expressview has tested this brand new CPU and compared it with the previous i7 CPU, the i7-6700K. And while the i7-7700K appears to be slightly faster than the i7-6700K at default settings, that’s mostly due to its increased Turbo boost and not due to its new architecture.

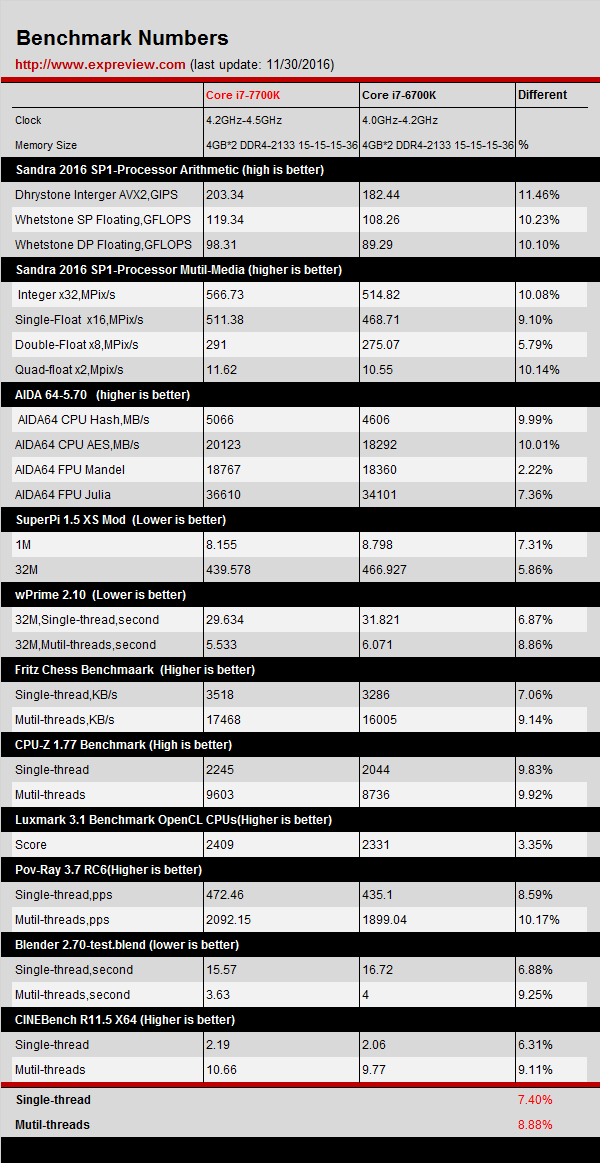

Expressview initially put both the Core i7-7700K and the Core i7-6700K through 11 different single and multi-threaded CPU tests at default settings. According to the results, the i7-7700K was around 7.40% faster in single-threaded and 8.88% faster in multi-threaded tests.

Here is the catch however. The i7-7700K is clocked around 7% higher than the i7-6700K.

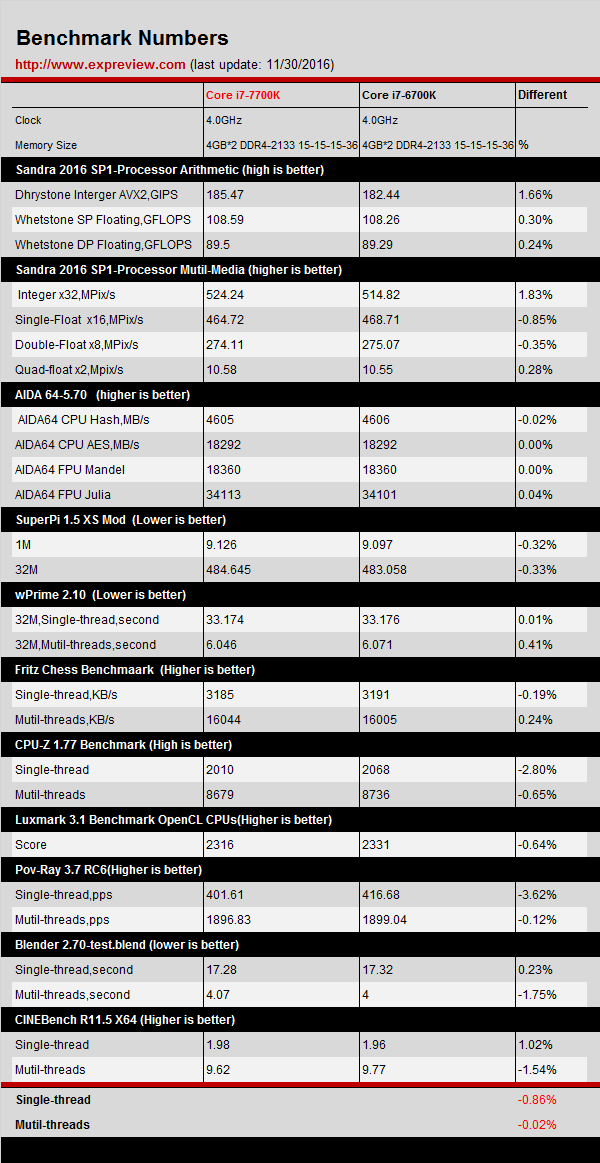

When Expressview clocked both of these chips at 4.0 GHz, it got some really disappointing results. According to the tests, the i7-7700K was actually 0.86% slower in single-threaded and 0.02% slower in multi-threaded tests.

Needless to say that these results will disappoint everyone that was looking forward to this brand new CPU.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email