Intel has just shared its first details about ExtraSS, its answer to NVIDIA DLSS 3 Frame Generation and AMD FSR 3.0 FG. According to the blue team, ExtraSS is a novel framework that combines spatial super sampling and frame extrapolation to enhance real-time rendering performance.

Now the big difference between ExtraSS and DLSS3/FSR 3.0 is the frame generation technique. DLSS 3 and FSR 3.0 use Interpolation, whereas ExtraSS will be using Extrapolation.

The benefit of Extrapolation is that it will not increase input latency. The bad news is that this technique can introduce more artifacts than Interpolation.

As Intel explained:

“Frame interpolation and extrapolation are two key methods of Temporal Super Sampling. Usually frame interpolation generates better results but also brings latency when generating the frames. Note that there are some existing methods such as NVIDIA Reflex [NVIDIA2020 ]decreasing the latency byusing a better scheduler for the inputs, but they cannot avoid the latency introduced from the frame interpolation and is orthogonal to the interpolation and extrapolation methods.

The interpolation methods still have larger latency even with those techniques. Frame extrapolation has less latency but has difficulty handling the disoccluded areas because of lacking information from the input frames. Our method proposes a new warping method with a lightweight flow model to extrapolate frames with better qualities to the previous frame generation methods and less latency comparing to interpolation based methods.”

So, in theory, ExtraSS should provide a better gaming experience. Whether Intel will be able to match the image quality of DLSS 3 and FSR 3.0 though remains to be seen.

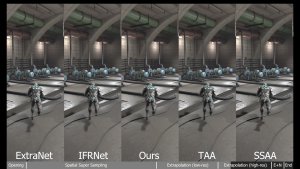

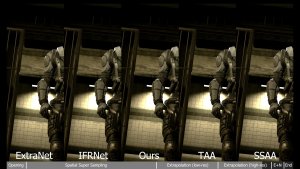

Intel has also shared some comparison screenshots between ExtraSS and some other aliasing technologies. These screenshots look promising. However, we’ll have to test ExtraSS ourselves to see whether it can compete with DLSS 3 and FSR 3.0.

Let’s not forget that in its initial tests, AMD FSR 3.0 also seemed great. When it came out, though, it had major issues. Things got better with time, but FSR 3.0 is still inferior to DLSS 3.

Finally, ExtraSS will work on all GPU vendors. Contrary to DLSS 3, ExtraSS won’t be locked on the company’s GPUs. So, it will be interesting to see whether developers will prefer ExtraSS over FSR 3.0.

Stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email