Ubisoft has just released a new patch for Assassin’s Creed Origins, claiming that it improves overall stability and performance. And we are happy to report that Ubisoft has delivered. This latest patch has improved the game’s performance by up to 16% on our Intel hexa-core CPU, and resolved all of our stuttering issues.

Moreover, the game still stressed our CPU. Ubisoft claimed that this has nothing to do with its protection system (VMProtect over Denuvo). Still, and while this seems a bit fishy, Ubisoft has been able to improve overall performance, so kudos to them.

We re-tested the game on our PC system which consists of an Intel Core i7 4930K (overclocked at 4.2Ghz) with 8GB of RAM and an NVIDIA GTX980Ti. As we can see below, our GTX980Ti was used to its fullest during the built-in benchmark tool, even in cities.

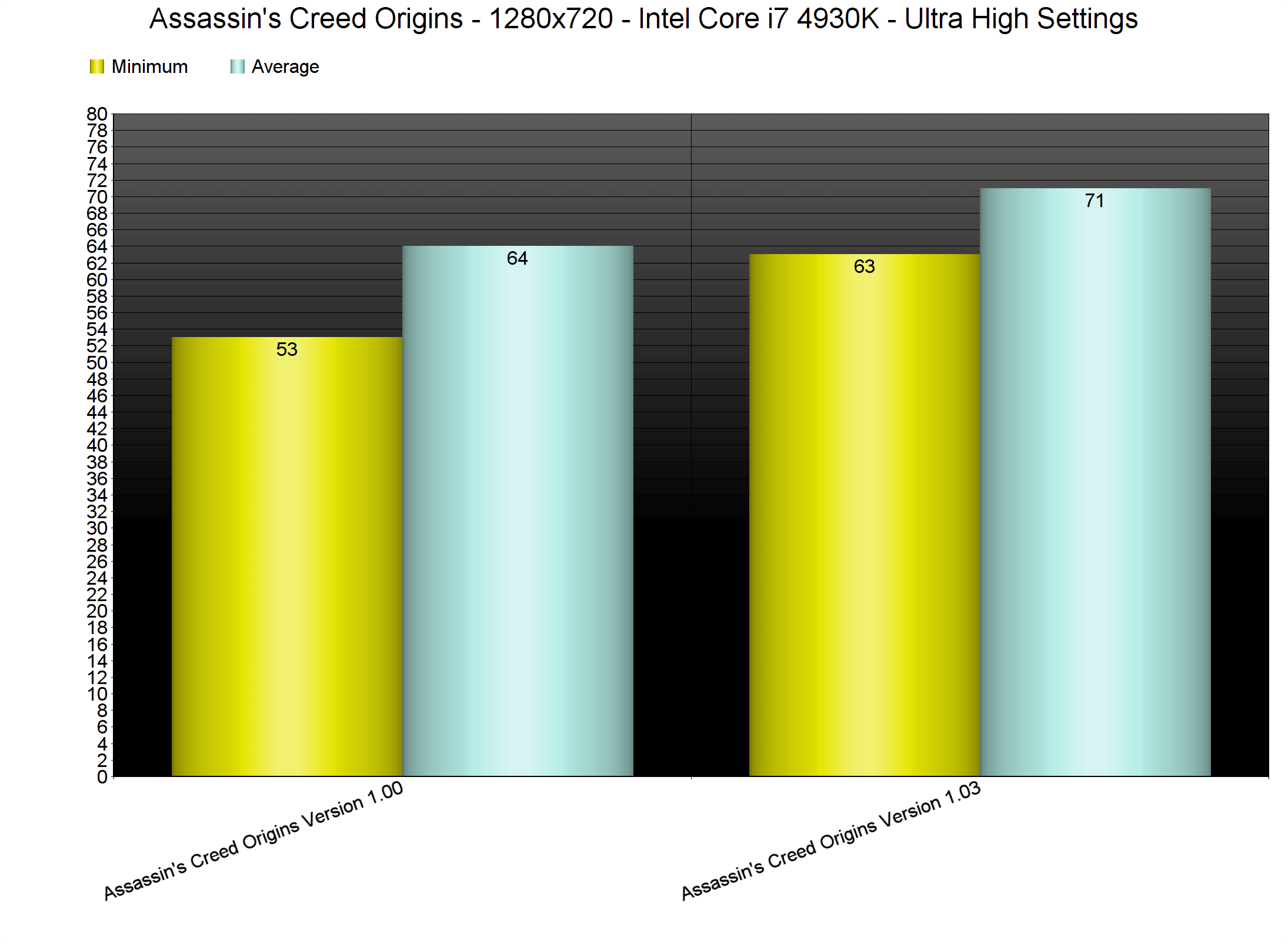

In order to find out whether there are further CPU improvements, we’ve dropped our resolution to 720p. We also set the Resolution Scaler to 50% so we could avoid any GPU bottleneck. Our hexa-core was able to push a minimum of 63fps and an average of 72fps. Regarding minimum framerates, that’s a 16% boost.

At 1080p, the benchmark never dropped below 58fps, making the whole experience better than before. For comparison purposes, our pre-patch minimum framerate at 1080p was 51fps. Furthermore, Ubisoft has addressed the micro-stuttering and stuttering issues that were present in it. As such, the game now feels smoother than before.

We should note that the TOD for the benchmark has been changed. This results in a different lighting situation, so we don’t know whether this TOD is less demanding than the previous one (perhaps there are less shadows on some scenes). Still, the CPU improvements are irrelevant of this change, so there is definitely a performance boost here.

In conclusion, Ubisoft has managed to improve overall performance, and has resolved the game’s stuttering issues. While the game still needs a powerful CPU, it does run better than its launch version, so kudos to them for at least improving things!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email