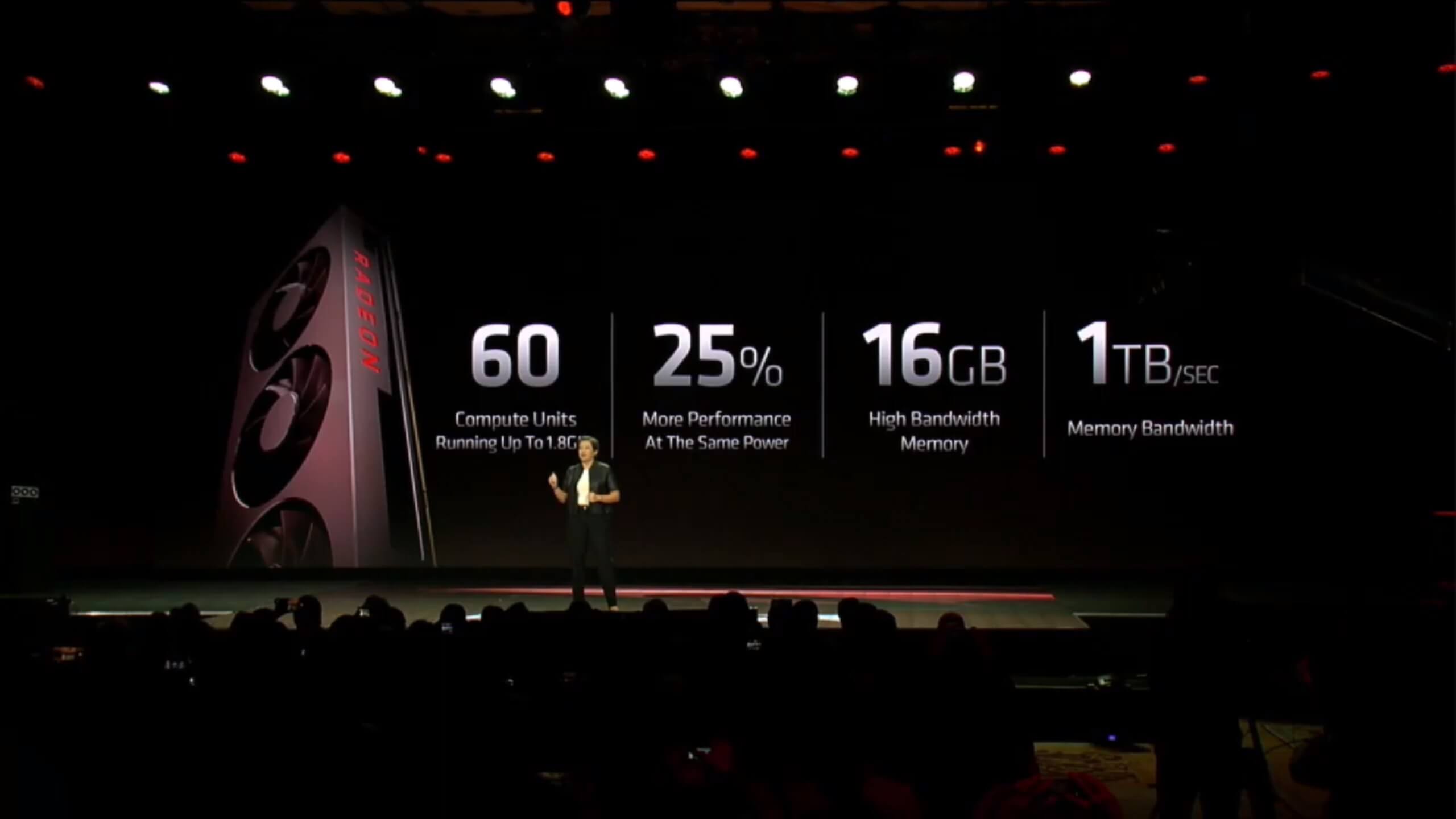

AMD has officially announced its next-generation high performance gaming graphics card, the AMD Radeon VII. The AMD Radeon VII will target high-end gamers, will be the first 7nm graphics card, and will come with 16GB of VRAM.

The AMD Radeon VII comes with 60 compute units running up to 1.8Ghz, will provide 25% more performance at the same power, and will offer 1TB/sec memory bandwidth.

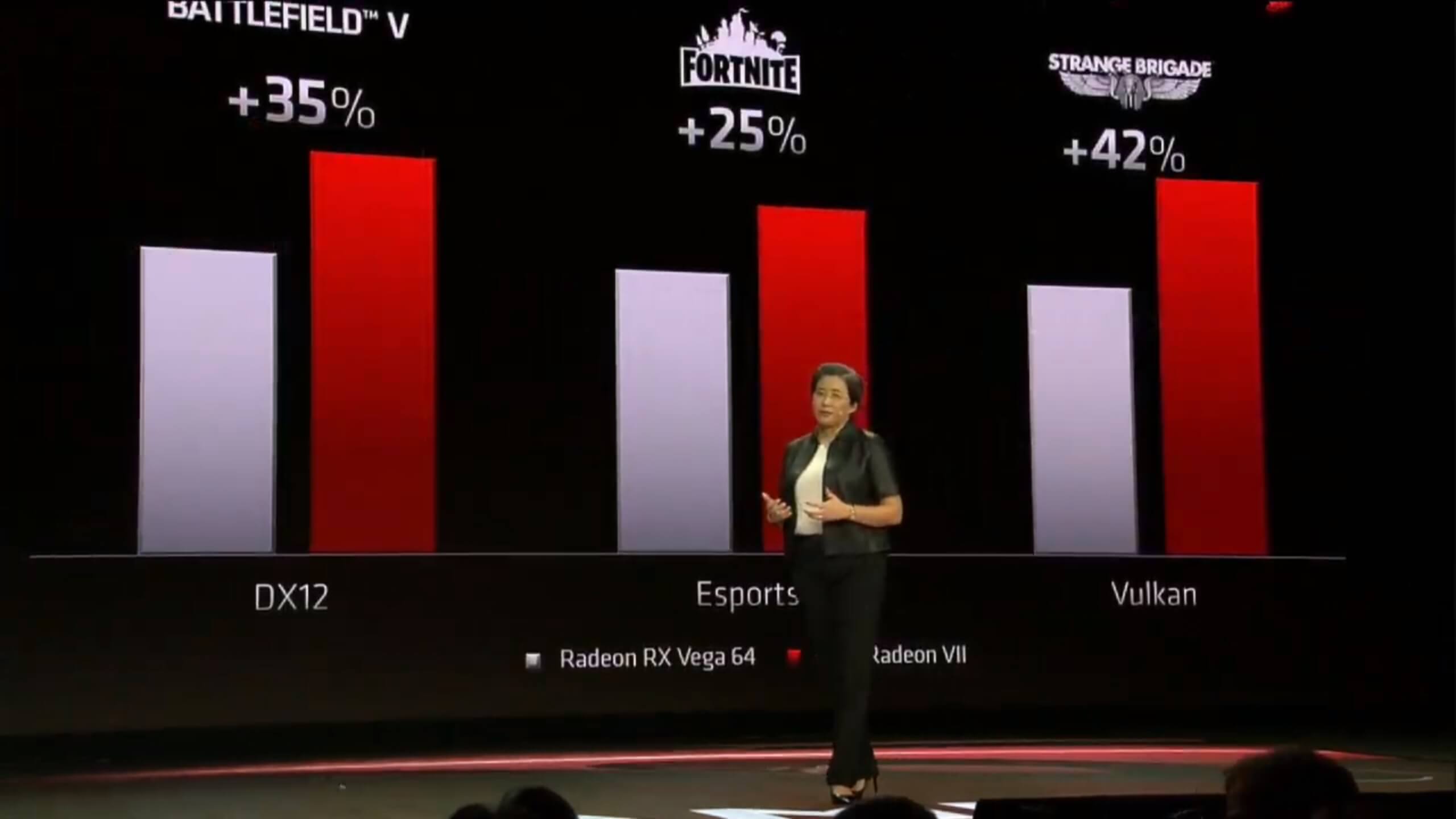

AMD has also revealed some initial benchmarks in which the AMD Radeon VII is 35% faster than Radeon RX Vega 64 in Battlefield 5, 25% faster in Fortnite and 42% faster in Strange Brigade.

Naturally, AMD Radeon VII will support FreeSync 2, Async Compute, Rapid Packed Math and Shader Intrinsics.

The model that AMD showcased at CES 2019 featured three fans, was a dual-slot GPU, lacked any DVI output and appeared to feature DisplayPort and HDMI outputs.

The red team did not reveal when the GPU will be available or how much it will cost.

The red team has announced that Radeon VII will be available on February 7th and will be priced at $699!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email