According to one blog post written by Adit Bhutani, the Product Marketing Specialist for Radeon and Gaming at AMD, current 4GB VRAM cards are not much sufficient for gaming these days.

He emphasizes the important of VRAM these days, and as we already know that these days most of the AAA PC games, if not all, eat up a lot of VRAM to fill the textures, and some games even push the limits of hardware as well. Even though 4GB should suffice for some games, having extra VRAM never hurts.

AMD is now stressing that the future is “beyond 4GB” GPUs, and recommends going for either a 6GB or 8GB VRAM capable GPU, like the current RX 570, RX 580, RX 590, and RX 5000 series of GPUs. This makes sense given the high VRAM requirements of today’s PC games, but this is not a “mandatory” criteria for playing games though.

But as we approach the release of future next-gen Consoles, VRAM might slowly become a necessity. Both “PS5” and “Xbox Series X” might feature 16GB of combined memory, which will make 4GB VRAM cards insufficient for today’s standards.

AMD stresses on the “Game Beyond 4GB” motto. AMD tested some select RX 5500 XT models with 4GB and 8GB of VRAM, to check how much of a performance difference the GPU’s frame buffer aka VRAM can make at 1080p, and according to their report, we get a performance improvement on average of up to 19% across all the games tested.

As the blog reads:

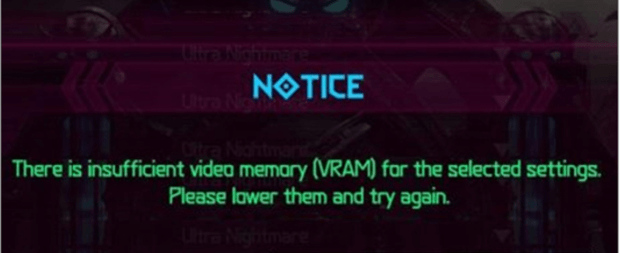

“Recent releases have shown marked performance increases when switching from a RadeonTM 5500 XT 4GB to a RadeonTM 5500 XT 8GB. In DOOM Eternal, the 8GB card runs the game at Ultra Nightmare settings at 75FPS (1080p), while the 4GB card can’t apply the graphics settings with that level of VRAM1. Looking at titles such as Borderlands 3, Call of Duty Modern Warfare, Forza Horizon 4, Ghost Recon Breakpoint, and Wolfenstein 2: The New Colossus, there is a performance improvement on average of up to 19% across these games when using the same card and increasing the amount of VRAM from 4GB to 8GB2 “.

Low VRAM GPUs might suffer more stutters during gaming, and also increased texture pop-ins.

” When gaming with insufficient levels of Graphics Memory, even at 1080p, gamers might expect several issues:

- Error Messages and Warning Limits

- Lower Framerates

- Gameplay Stutter and Texture Pop-in Issues “.

AMD has already released 8GB GPUs for the “mainstream” market with 8GB RX 470 and RX 480 GPU models since 2016. But as we approach the launch of next-gen consoles, VRAM requirements are going to rise, as more and more Game developers rely on this design.

Today 3GB and 4GB GPUs struggle with most of the AAA PC games, and even though 4GB is the minimum “baseline” VRAM recommended these days, running out of VRAM might cause several issues.

According to the blog post, ” AMD is leading the industry at providing gamers with high VRAM graphics solutions across the entire product offering. Competitive products at a similar entry level price-point are offering up to a maximum of 4GB of VRAM, which is evidently not enough for today’s games. Go Beyond 4GB of Video Memory to Crank Up your settings. Play on RadeonTM RX Series GPUs with 6GB or 8GB of VRAM and enjoy gaming at Max settings “.

Thanks, OC3D.

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email