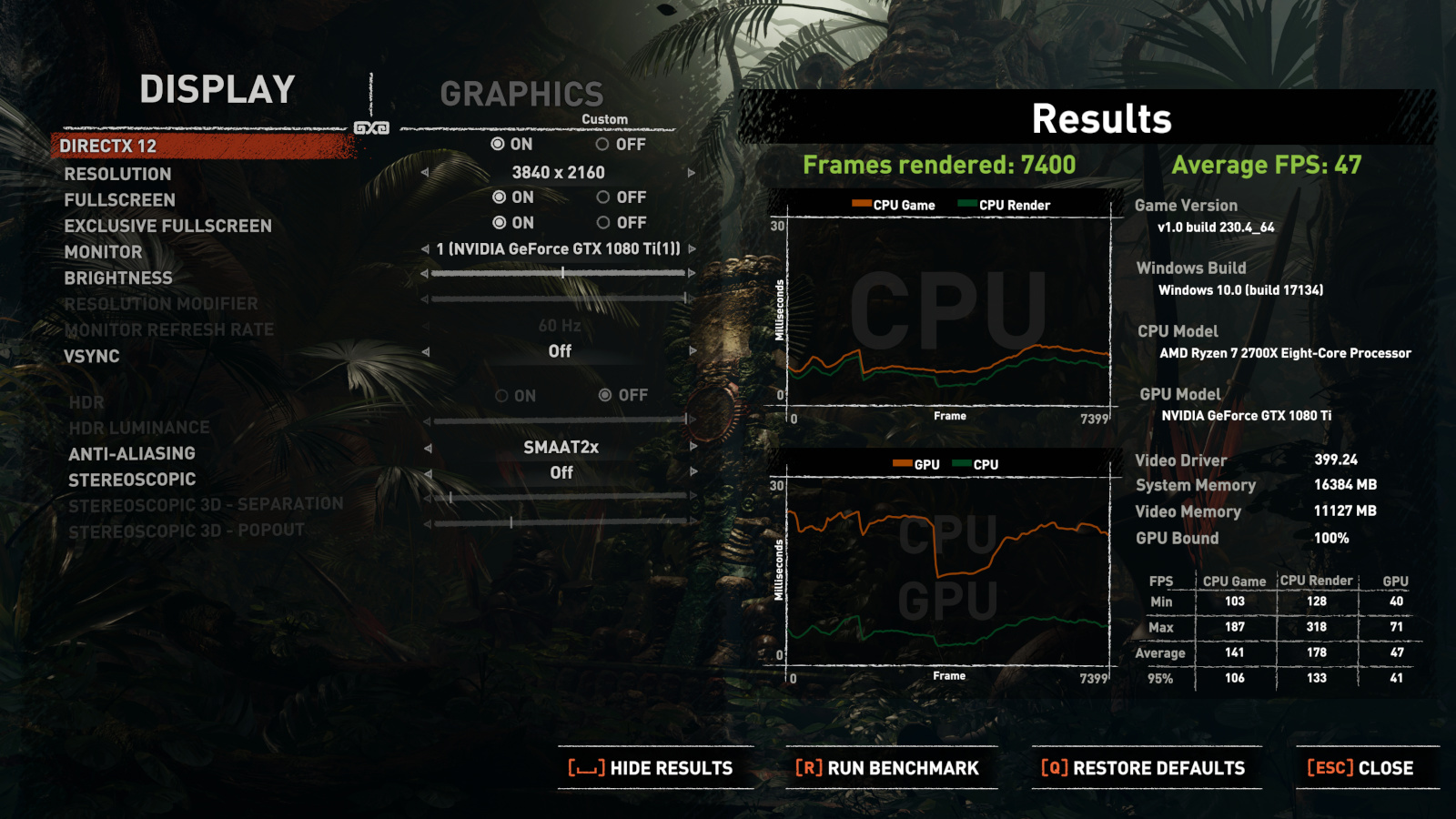

Shadow of the Tomb Raider releases in a few days and from the looks of it, it will be a demanding title in 4K. Insomnia’s Spartan117 has shared an image from the built-in benchmark in which the NVIDIA GeForce GTX 1080Ti can run the game with an average of 47fps and a minimum of 40fps in 4K on Ultra settings.

NVIDIA has stated that its upcoming graphics card, the NVIDIA GeForce RTX 2080Ti, is around 25-35% faster than the GeForce GTX1080Ti. This basically means that in this GPU-bound title, the NVIDIA RTX 2080Ti should run the game with a minimum of 50fps and an average of 59fps in the worst case scenario (25%) and with a minimum of 52fps and an average of 63fps in the best case scenario (35%).

However, we also know that Shadow of the Tomb Raider will support Deep Learning Super Sampling. DLSS is a new technique that applies deep learning and AI to rendering techniques, resulting in crisp, smooth edges on rendered objects in games. According to NVIDIA’s own graph, the RTX graphics cards are around 75% faster when DLSS is enabled than their previous generation counterparts. This means that the NVIDIA GeForce RTX 2080Ti should, theoretically, be offering a minimum of 70fps and an average of 82fps.

We won’t be certain about these figures unless we test Shadow of the Tomb Raider ourselves with an NVIDIA GeForce RTX 2080Ti. For what it’s worth, we’ve already purchased one and we’re patiently awaiting for it (though it will most likely not arrive at launch day).

Still, and if DLSS works as it’s intended, this should give a smooth gaming experience in 4K. While in theory the NVIDIA GeForce RTX 2080Ti will be able to run with an average of 60fps Shadow of the Tomb Raider in native 4K, we are almost certain that a lot of RTX2080Ti owners will prefer using DLSS in order to maintain higher framerates.

On the other hand, and as we’ve already stated, this new graphics card has a lot of trouble currently with real-time ray tracing. At Gamescom 2018, and for most of the time, Shadow of the Tomb Raider ran with 40fps on the RTX 2080Ti. EIDOS Montreal and Nixxes claimed that they will further optimize the ray tracing effects so hopefully the final product will run better.

Stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email