One of the biggest features of the RTX 50 series GPUs is the inclusion of Multi-Frame Gen in DLSS 4. And, since this is a topic that interests a lot of gamers, we’ve decided to have a separate article for it. So, time now to see what Multi-Frame Gen does, and whether it’s worth enabling it.

DLSS 4 Multi-Frame Gen generates up to three additional frames per traditionally rendered frame, working in unison with the complete suite of DLSS technologies to multiply frame rates by up to 8X over traditional brute-force rendering. In short, it’s Frame Gen on steroids.

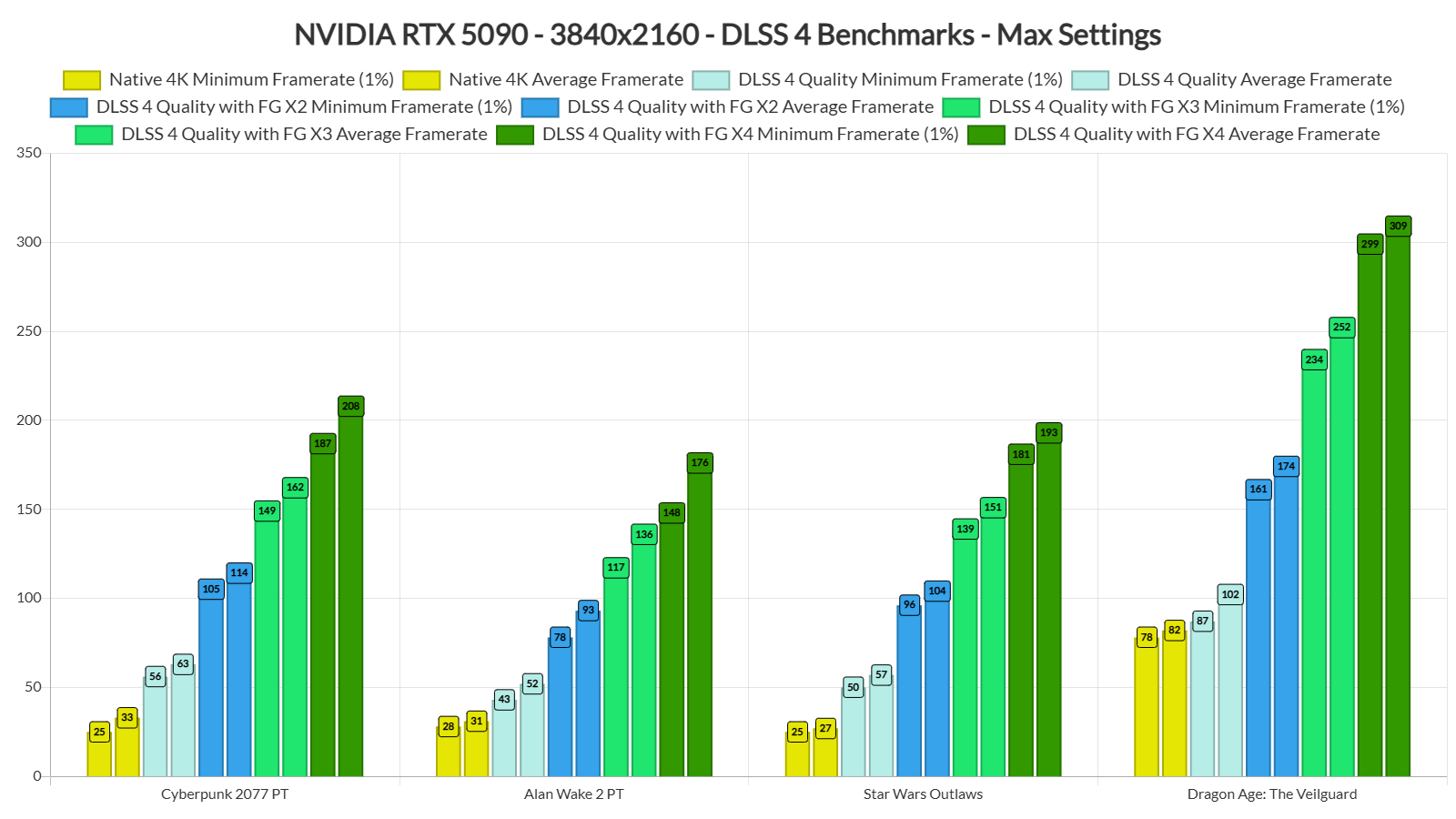

NVIDIA only had a select number of games to test DLSS 4 Multi-Frame Gen. On January 30th, the team will launch a new version of the NVIDIA App which will add support for 75 titles. So, we’ll have more DLSS 4 benchmarks when the app comes out. For now, let’s look at Cyberpunk 2077, Alan Wake 2, Dragon Age: The Veilguard and Star Wars Outlaws.

For our benchmarks, we used an AMD Ryzen 9 7950X3D with 32GB of DDR5 at 6000Mhz, and the NVIDIA GeForce RTX 5090. We also used Windows 10 64-bit, and the GeForce 571.86 driver. Moreover, we’ve disabled the second CCD on our 7950X3D. Plus, we’re using the ASUS ROG SWIFT PG32UCDM monitor, which is a 32” 4K/240Hz/HDR monitor.

Let’s start with Cyberpunk 2077. With DLSS 4 Quality Mode, we were able to get a minimum of 56FPS and an average of 63FPS at 4K with Path Tracing. Then, by enabling DLSS 4 Multi-Frame Gen X3 and X4, we were able to get as high as 200FPS. Now what’s cool here is that I did not experience any latency issues. The game felt responsive and it looked reaaaaaaaaaaaaaally smooth.

And that’s pretty much my experience with all the other titles. Since the base framerate (before enabling Frame Generation) was between 40-60FPS, all of the games felt responsive. And that’s without NVIDIA Reflex 2 (which will most likely reduce latency even more).

I cannot stress enough how smooth these games looked on my 4K/240Hz PC monitor. And yes, that’s the way I’ll be playing them. DLSS 4 MFG is really amazing, and I can’t wait to try it with Black Myth: Wukong and Indiana Jones and the Great Circle. With DLSS 4 X4, these games will feel smooth as butter.

And I know what some of you might say. “Meh, I don’t care, I have Lossless Scaling which can do the same thing“. Well, you know what? I’ve tried Lossless Scaling and it’s NOWHERE CLOSE to the visual stability, performance, control responsiveness, and frame delivery of DLSS 4. If you’ve been impressed by Lossless Scaling, you’ll be blown away by DLSS 4. Plain and simple.

Now while DLSS 4 is mighty impressive, it still has some issues. For instance, in CP2077, you can notice some black textures in trees during the benchmark sequence. Other than that, I could not spot any major visual artifacts. Star Wars Outlaws and Dragon Age: The Veilguard also seemed artifact-free. However, in Alan Wake 2, there were some visual artifacts with the DLSS 4 Multi-Frame Gen X3 and X4. In the following areas, when turning the camera with quick moves, the flashlight causes artifacts. There are also artifacts around Saga when turning around. I’ve already informed NVIDIA about these issues so hopefully they’ll address them via future drivers.

Speaking of DLSS 4, the Transformer Model is better than the previous CNN Model. All of the aforementioned games looked better than before. So, kudos to NVIDIA for delivering a better image quality. Oh, and the Transformer Model will become available to all the previous RTX GPUs.

Before closing, I should note that all the performance overlay tools (with the exception of CapFrameX) do not report the correct frametimes when using DLSS 4 Frame Gen with the Transformer Model. Right now, most of the tools measure MsBetweenPresentsChange for the frametime graph. However, for the RTX50 series GPUs, you’ll have to measure MsBetweenDisplayChange. So, that weird frametime you see in the screenshots? It has nothing to do with the actual frametime you’re getting. So, don’t pay attention to it.

Overall, DLSS 4 Multi-Frame is one of the best new features of the Blackwell RTX 50 series GPUs. By using it, you will finally be able to enjoy path-traced games at super high framerates. And you know what? I’d take 200FPS with the response times of 50-60FPS any day over simply gaming at 50-60FPS. And good luck getting a similar experience with “TV interpolation” or Lossless Scaling. DLSS 4 is on an entire next-gen level. So go ahead and cope all you want, make all the excuses or memes you can. Personally, after actually getting my hands on it and gaming with DLSS 4 X4, I’ll be using it in pretty much all the games that support it!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email