Nixxes and FYQD-Studio have released new patches for Marvel’s Spider-Man Remastered and Bright Memory Infinite that add support for DLSS 3. As such, we’ve decided to benchmark and test DLSS 3 against DLSS 2 and native 4K.

For our benchmarks, we used an Intel i9 9900K with 16GB of DDR4 at 3800Mhz and NVIDIA’s RTX 4090 Founders Edition. We also used Windows 10 64-bit, and the GeForce 522.25 driver. As always, we’ll be also using the Quality Mode for both DLSS 2 and DLSS 3.

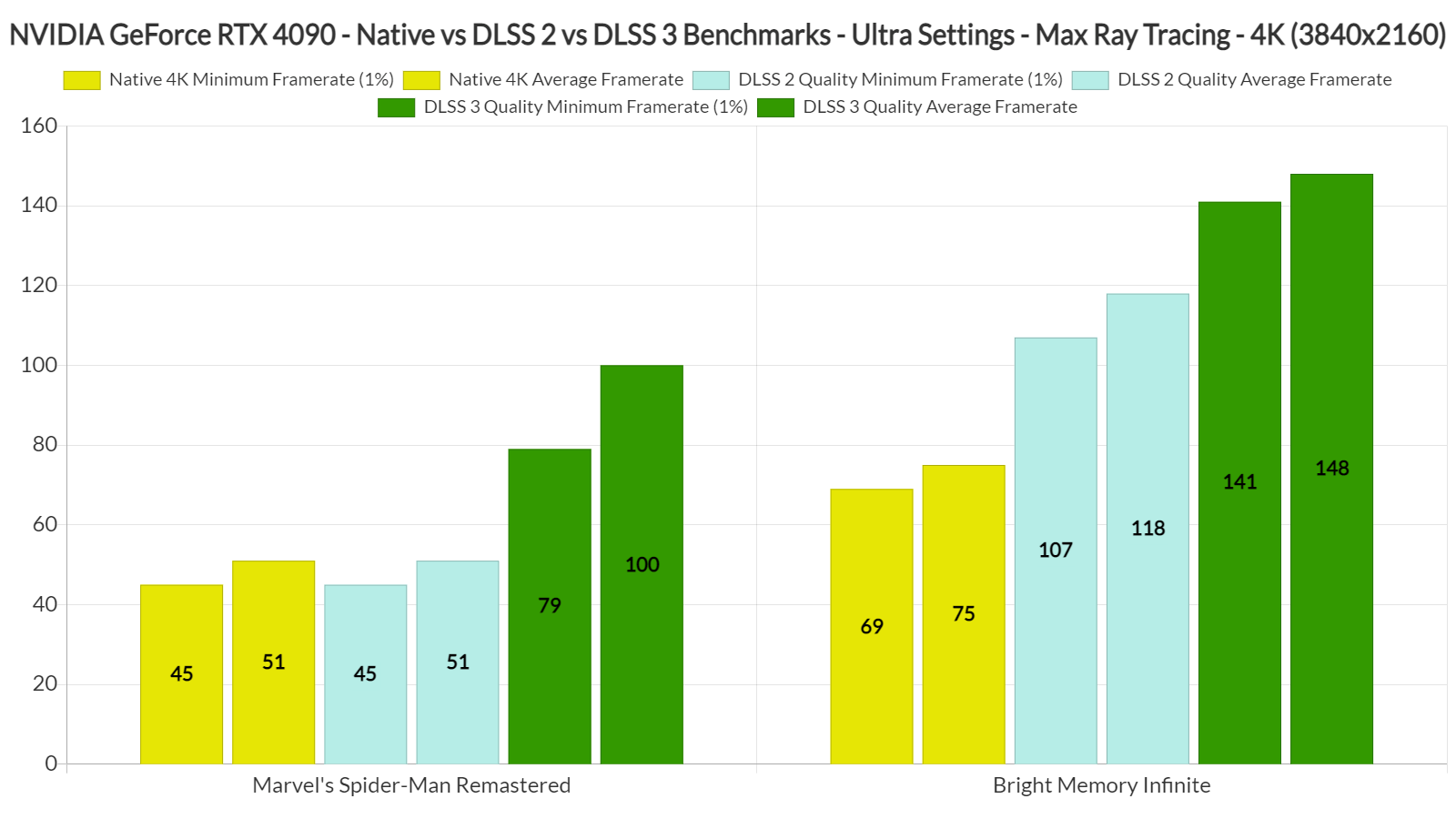

Let’s start with Marvel’s Spider-Man Remastered. This game is very CPU-heavy when you max it out, even at 4K. On Ultra settings and with maximum Ray Tracing, we were getting a minimum of 45fps and an average of 51fps. The game was simply unplayable (regarding both input latency and smoothness). DLSS 2 Quality did not bring any performance improvements.

By enabling DLSS 3 Quality, we were able to get a minimum of 79fps and an average of 100fps. This made the game enjoyable on our PC system as it was buttery smooth. The game was also really responsive while playing, and felt MUCH BETTER than what we were getting at 50fps. This is another case of a CPU-bound game in which DLSS 3 does wonders. As for visual artifacts, we did not spot anything game-breaking. If you pause and examine individual frames, you may find some artifacts. While playing, though, we could not spot any.

Bright Memory Infinite, on the other hand, was a mixed bag. While DLSS 3 Quality improves overall performance, it introduces extra latency that you can immediately feel. It’s not game-breaking (as I’ve demonstrated in the following video, I was able to headshot my enemies and parry enemy attacks). However, and since DLSS 2 Quality offers framerates higher than 100fps, I suggest using that over DLSS 3 Quality. By using DLSS 2 Quality, you’ll have the best of both worlds (a smooth gaming experience with really low latency).

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email