And the time has come to say goodbye to our beloved Intel Core i9 9900K. DSOGaming has upgraded its main PC gaming system to an AMD Ryzen 9 7950X3D. As such, we’ve decided to benchmark it in the ten recent most CPU-heavy PC games.

Before continuing, we should list our full PC specs:

-

- Corsair 7000X iCUE RGB TG Full Tower

- Corsair PSU HX Series HX1200 1200W

- Gigabyte Aorus Master X670E

- AMD Ryzen 9 7950X3D

- G.Skill Trident Z5 Neo RGB 32GB DDR5-6000Mhz CL30

- Corsair CPU Cooler H170i iCUE Elite

- Sandisk SSD Ultra 3D 2TB

- Samsung 980 Pro SSD 1TB M.2 NVMe PCI Express 4.0

- Samsung 970 Evo Plus SSD 1TB M.2 NVMe PCI Express 3.0

Here are also some images from our PC system.

Instead of using Windows 11, we’ve decided to stick with Windows 10-64Bit. From what we could tell, there aren’t, at least for now, any major performance differences between these two operating systems.

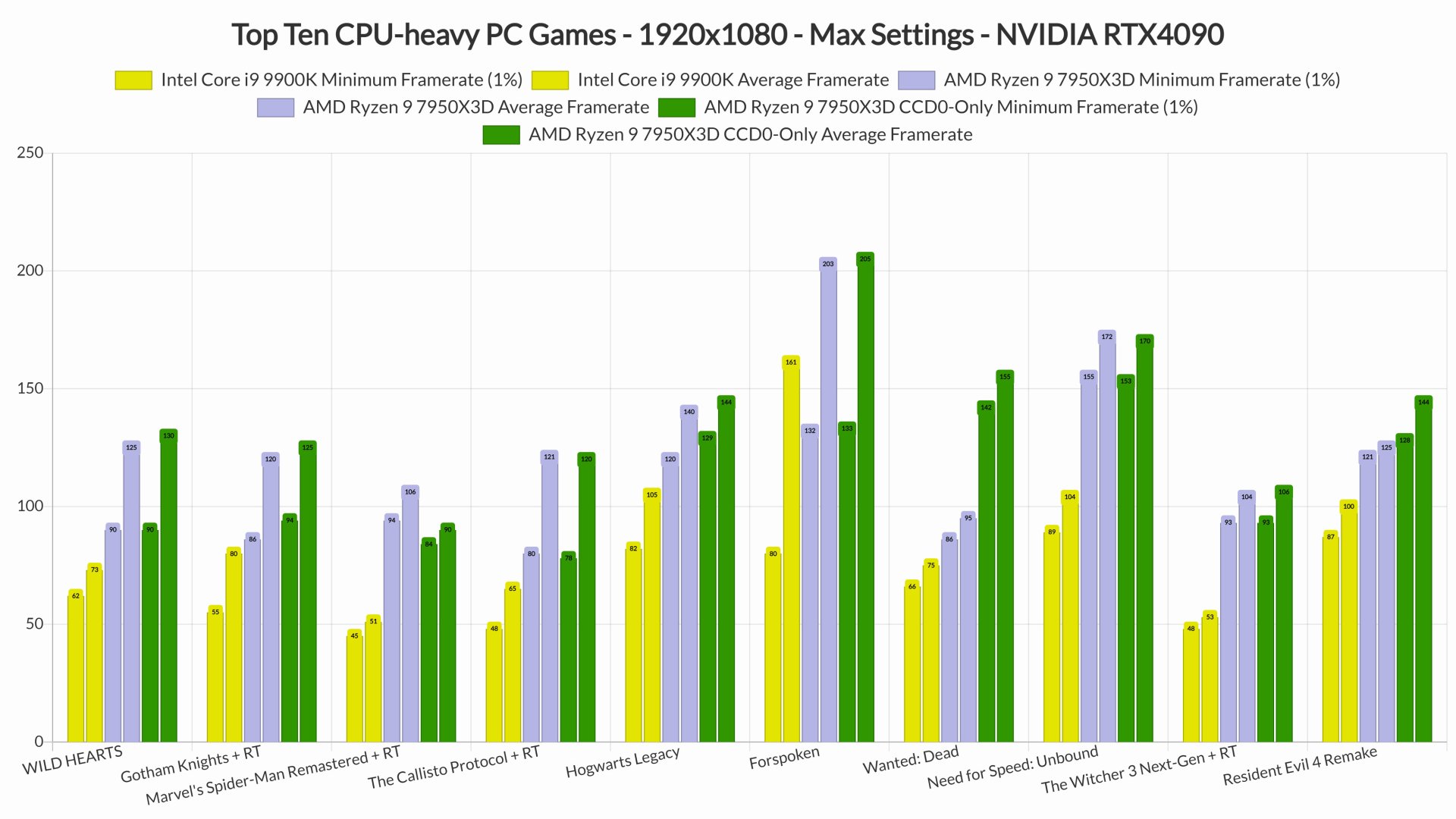

For the following benchmarks, we used an NVIDIA GeForce RTX 4090 with the latest GeForce WHQL Drivers. Since we’re talking about CPU benchmarks, we ran all games at 1080p. Furthermore, we’ve disabled SMT as it makes no sense to have it enabled for gaming. PC games use usually 4-6 CPU threads and the AMD Ryzen 9 7950X3D has two CCDs with 8 CPU cores each. By enabling SMT, we are basically cutting in half the performance of each core. Take for example Resident Evil 4 Remake. As we can clearly see, SMT brings a noticeable performance hit.

The AMD Ryzen 9 7950X3D is a beast and had no trouble running all of the following CPU-heavy games. As we’ve already reported, some of these games rely heavily on one-two CPU threads. However, even with Ray Tracing in games like Gotham Knights, The Witcher 3 Next-Gen or The Callisto Protocol, the AMD Ryzen 9 7950X3D can provide smooth framerates. And that’s without enabling NVIDIA’s DLSS 3.

What’s also interesting is how these games behave on AMD’s CPU. For our benchmarks, we used AMD Ryzen Master to enable/disable the second CCD. And although we’ve heard reports about the 7950X3D underperforming in Hogwarts Legacy when both CCDs are enabled, we did not really see any major performance differences. Not only that, but Marvel’s Spider-Man Remastered was faster when both of the CCDs were enabled. After all, Marvel’s Spider-Man Remastered is one of the few games that can take advantage of more than 8 CPU threads.

In our tests, only two games performed worse when both of our CCDs were active. These two games were Wanted: Dead and Resident Evil 4 Remake. For whatever reason, these two games were using the second CCD (and not the one that had the 3D V-Cache). By disabling the second CCD, we forced these two games to run on the appropriate CCD which resulted in a significant performance increase.

For all our future benchmarks, we’ll be testing both of the 7950X3D’s CCDs. Given the fact that our CPU has 16 CPU cores, though, we won’t be testing SMT/Hyper-Threading!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email