Rise of the Tomb Raider is a highly anticipated game that a lot of PC gamers were looking forward to. Originally released for Xbox One, this game found its way to the PC after a few months. Rise of the Tomb Raider officially releases tomorrow and since NVIDIA has released its Game-Ready drivers for it, it’s time now to see how the game performs on the PC platform.

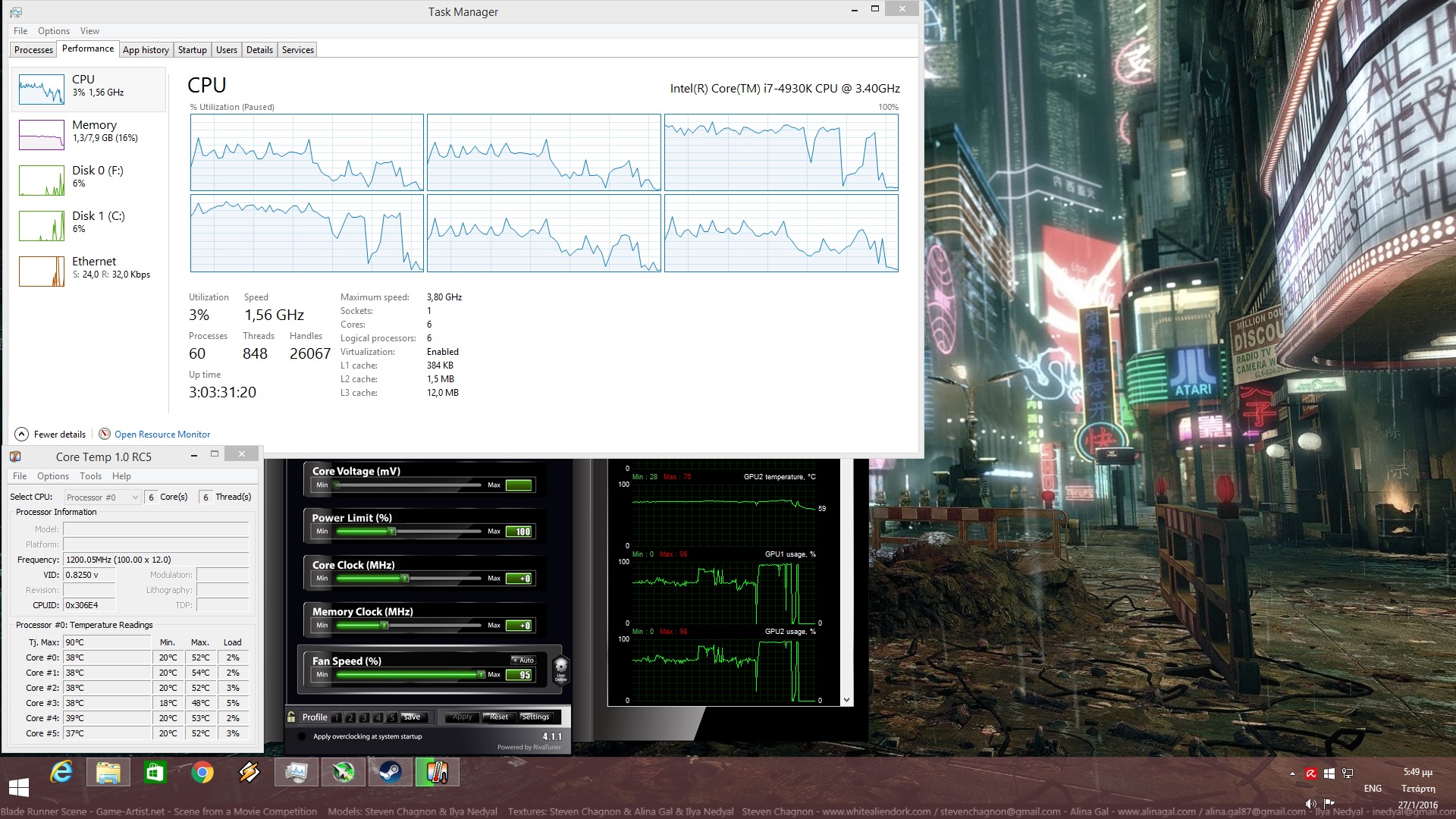

As always, we used an Intel i7 4930K (turbo boosted at 4.0Ghz) with 8GB RAM, NVIDIA’s GTX690, Windows 8.1 64-bit and the latest WHQL version of the GeForce drivers. And while NVIDIA has introduced an SLI profile for Rise of the Tomb Raider, the SLI scaling is all over the place. This makes the game unpleasant in pretty much all SLI systems. Not only that, but there is annoying flickering issues during the snowstorm in the prologue level and the water flooding at the end of the second level.

Take for example the following screenshots. A simple change of the camera can result in awful SLI usage. As you can see, on the left we have amazing SLI scaling. As soon as we slightly change the camera viewpoint, SLI scaling drops at around 60%. This is a big disappointment, especially since both NVIDIA and Square Enix claimed that the game would support multi-GPUs from the get-go. Here is hoping that NVIDIA will fix the game’s SLI flickering issues and its disappointing SLI scaling in a future driver.

Since SLI is seriously messed up, we ran the game in single GPU and unfortunately the results were mixed. At 1080p with Very High settings, Rise of the Tomb Raider ran with a minimum of 32fps on an NVIDIA GTX680 (in single GPU mode, our NVIDIA GTX690 behaves like an NVIDIA GTX680). This basically means that the game runs better with greater visuals on the GTX680 than on Xbox One. However, we were unable to hit a constant 60fps on the GTX680 at 1080p, even when using the Lowest available options. On High settings, the game ran with a minimum of 35fps, on Medium settings we got a minimum of 39fps and on the Lowest settings we got a minimum of 47fps. This means that those with weaker GPUs should kiss 60fps gaming goodbye, unless of course they are willing to lower their resolution.

Regarding its CPU requirements, Rise of the Tomb Raider does not require a high-end CPU to shine. In order to find out whether an old CPU was able to offer an ideal gaming experience, we simulated a dual-core CPU with and without Hyper Threading. For this particular test, we lowered our resolution to 800×600 (in order to avoid any possible GPU limitation). Our simulated dual-core system was able to run the game with 60fps but with noticeable stuttering when Hyper Threading was disabled, and with constant 60fps without stuttering when Hyper Threading was enabled.

What also surprised us with Rise of the Tomb Raider was its RAM usage. The game uses up to 4.5GB of RAM when Very High textures are enabled. As a result of that, PC systems with 8GB of RAM may experience various stutters (especially if there are programs running in the background). By lowering the Textures to High, our RAM usage dropped to 2.5GB.

At this point, we should also note that the game’s Very High textures require GPUs with at least 3GB of VRAM. While enabling them, we noticed minor stutters. By dropping their quality to High, we were able to enjoy the game without any stutters at all. This means that owners of GPUs that are equipped with 2GB of VRAM should avoid the game’s Very High textures.

What is made crystal clear so far is that Rise of the Tomb Raider is a GPU-bound title. Crystal Dynamics and Nixxes have included a respectable amount of graphics options to tweak. Furthermore, some special options that have been included (like the ability to feature different subtitles for different characters) is a nice welcome.

Graphics wise, Rise of the Tomb Raider is a really beautiful game. Most of the game’s textures are of high quality (higher quality than those found on Xbox One), and NVIDIA’s HBAO+ solution does wonders on the game’s scenes. The environmental effects are amazing, and AMD’s TressFX tech (named as PureHair in Rise of the Tomb Raider) is awesome (though not flawless as you will notice various clipping issues). The lip syncing of most characters – and especially Lara’s – is among the best we’ve seen, and all the 3D models (be it animals or characters) are highly detailed. The game also sports scripted environmental destruction, as well as proper foliage physics and tessellation on surfaces in order to make them more detailed than ever.

All in all, Rise of the Tomb Raider performs very good on the PC, provided you are equipped with a high-end single GPU. Rise of the Tomb Raider is a GPU-bound title, and can run without performance problems on a modern-day dual-core Intel CPU. The game needs a modern-day GPU in order to be enjoyed, however it can run with more than 30fps on older GPUs like the NVIDIA GTX680. By also lowering their in-game resolution, owners of weaker systems can hit a constant 60fps at the cost of the overall image quality. We are also happy to report that we did not notice any mouse acceleration or smoothing issues, and that Crystal Dynamic and Nixxes have included a lot of options to tweak. The only downside here is that the game’s SLI support is currently messed up, something that will disappoint a lot of NVIDIA owners, and that its Very High textures will introduce performance issues on systems with less than 8GB of RAM.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email