It’s been a couple of days since the launch of Watch_Dogs and we are here today to see how this new open world title performs on low to mid end systems. We’ve been extensively covering Watch_Dogs these past months, therefore there is no reason to discuss (or prove) at all whether it’s been downgraded or not. Yes, Watch_Dogs has been downgraded and as you may have heard these past days, it also suffers from various performance issues.

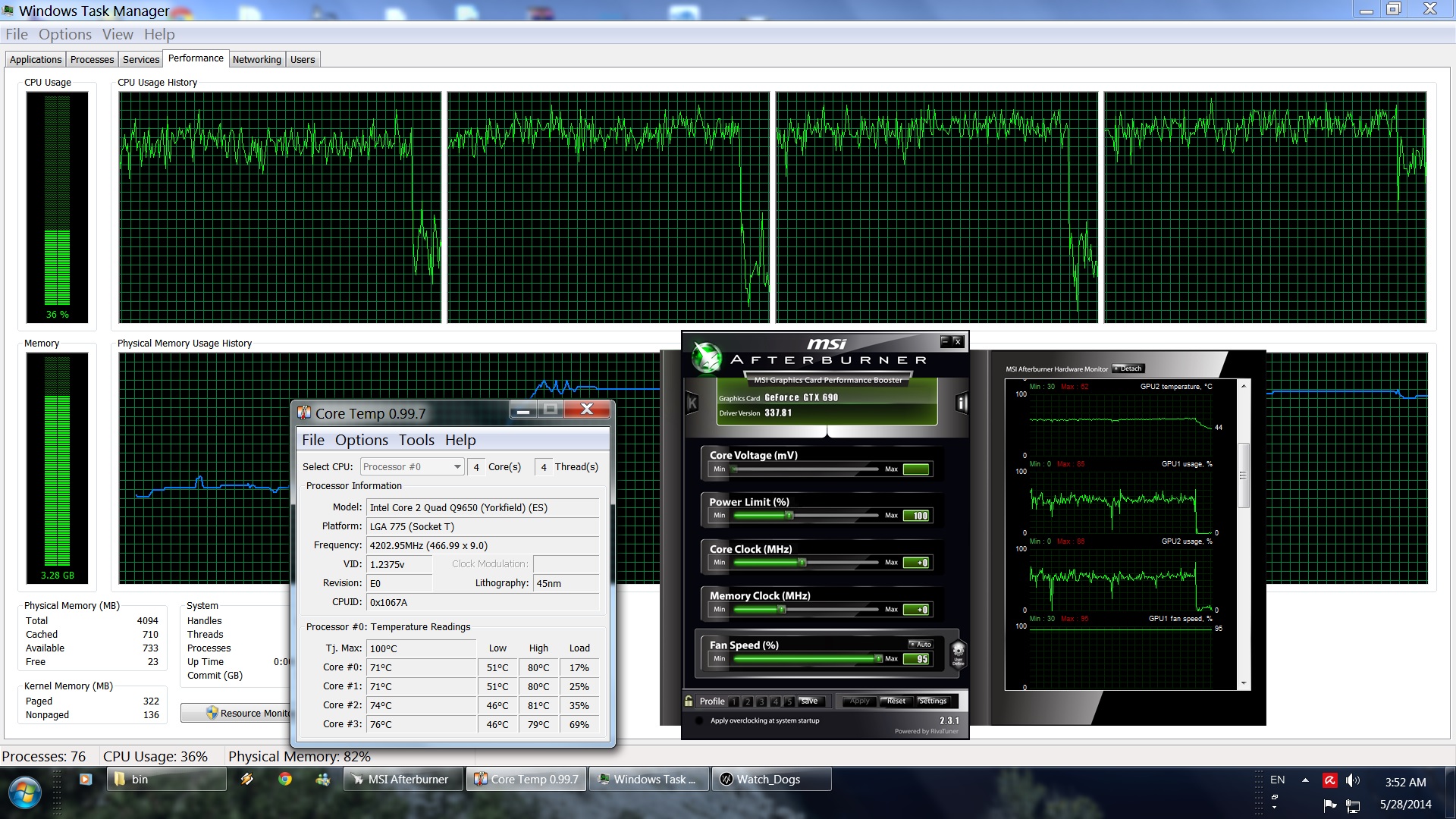

For this article, we used an overclocked Q9650 (4.2Ghz) with 4GB RAM, an Nvidia GTX690, Windows 7-64Bit and the latest version of the GeForce ForceWare drivers. Nvidia has already included an SLI profile for this game, however we were CPU limited and we could not determine whether SLI scaling was ideal or not. An option to find out whether SLI is behaving as it should be would be to further increase the game’s graphics settings, however in this case we were also bottlenecked by our VRAM. Each GPU core of our GTX 690 features 2GB of VRAM. As a result of that, we experienced major stuttering side-effects the moment we enabled AA or Ultra settings for Shadows and Reflections. Hell, even enabling HBAO+ High resulted in a stuttering mess.

Our low/mid end system was able to run Watch_Dogs with 30-50fps at 1080p and with High settings. While driving, our framerate was taking a minor hit and our framerate was dropping at 26-28fps. Not only that, but even at the lowest settings we were unable to hit constant 60fps. This proves that our CPU is the bottleneck here. Also, and despite Ubisoft’s claims, Watch_Dogs ran perfectly fine with our 4GB RAM. For PC standards, our Q9650 is simply not powerful enough for Watch_Dogs. For console standards, the game runs as good as on PS4 or on Xbox One.

What also surprised us was the game’s minimal performance difference between a tri-core and a quad-core CPU. Watch_Dogs’ Disrupt engine scales wonderfully on a quad-core, however the performance difference between a tri-core and a quad-core is only 3fps. This makes us wonder; why is the game stressing all four CPU cores if there are no benefits between tri-cores and quad-cores? Below you can find comparison shots between a dual-core, a tri-core and a quad-core system. Moreover, we’ve included a video showing the similar performance of the game running on a tri-core and on a quad-core.

So, Watch_Dogs requires a high-end CPU to shine and dual-cores (or even older quad-cores if you are targeting 60fps) are not able to provide an enjoyable performance. But that’s not all. The game has also some ridiculously high VRAM requirements. Nvidia claimed on its performance guide that a GTX690 is able to handle the game with Ultra settings (albeit using High textures). Well, that’s far from truth as there is ridiculous amount of stuttering with Nvidia’s settings. In order to enjoy a stuttering-free experience, we had to completely disable AA and run the game at High settings (and 1080p). With the aforementioned settings, there was no stuttering even while driving. We don’t know whether Ubisoft or Nvidia will be able to provide a performance boost for 2GB GPUs so until we get a new driver or a patch, we strongly suggest avoiding the Ultra settings (unless you have a 4GB or a 6GB GPU).

Graphics wise, Watch_Dogs looks okay-ish at High settings. Its visuals are washed out and the game desperately needs some SweetFX treatment. Graphical effects – such as Parallax Occlusion Mapping, smoke effects, debris from explosions and the number of reflected lights – have been reduced or completely removed. The lighting system also feels ‘simplistic’ and ‘weak’, and is nowhere as good as Ubisoft advertised. Specular and normal maps are also underused. And while the game requires more than 2GB of VRAM for its Ultra textures, those textures are nowhere as good as those found in Crysis 3 or Battlefield 4. Truth be told, those games aren’t sandbox titles so we can’t really compare them. Still, Watch_Dogs’ textures feel kind of underwhelming for their high requirements. And while the game looks great when downsampled (with every graphical setting cranked up), most PC gamers won’t be able to even experience that due to the game’s ridiculous VRAM requirements.

All in all, Watch_Dogs is a mess. Its performance is underwhelming, its VRAM requirements are unjustified for what is being displayed on screen, and most gamers will encounter major stuttering side-effects. Although the Disrupt engine scales great on quad-cores, there is only minimal performance difference between a tri-core and a quad-core CPU. Ironically, Ubisoft’s heavily modified Unreal Engine 2.5-powered Splinter Cell: Blacklist shows better CPU scaling on all cores. Not only that, but there is a noteworthy performance difference between tri-cores and quad-cores in Splinter Cell: Blacklist.

Enjoy and stay tuned for our second part, in which we’ll put Intel’s i7 4930K to the test!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email