NVIDIA has just lifted the review embargo for the NVIDIA GeForce RTX 5080. And, since the green team has provided us with a review sample, it’s time to benchmark it in the most graphically demanding games that are available on PC.

For these benchmarks, we used an AMD Ryzen 9 7950X3D with 32GB of DDR5 at 6000Mhz. We also used Windows 10 64-bit, and the GeForce 572.02 driver. Moreover, we’ve disabled the second CCD on our 7950X3D. That’s the ideal thing you can do for gaming on this particular CPU.

Before continuing, here are the full specs of the RTX 5080. This GPU has 10752 NVIDIA CUDA Cores, uses a 256-bit memory interface and comes with 16GB of GDDR7. It has 3x DisplayPort 2.1b with UHBR20 and 1x HDMI 2.1b connectors. Plus, it supports Dolby Vision for HDR.

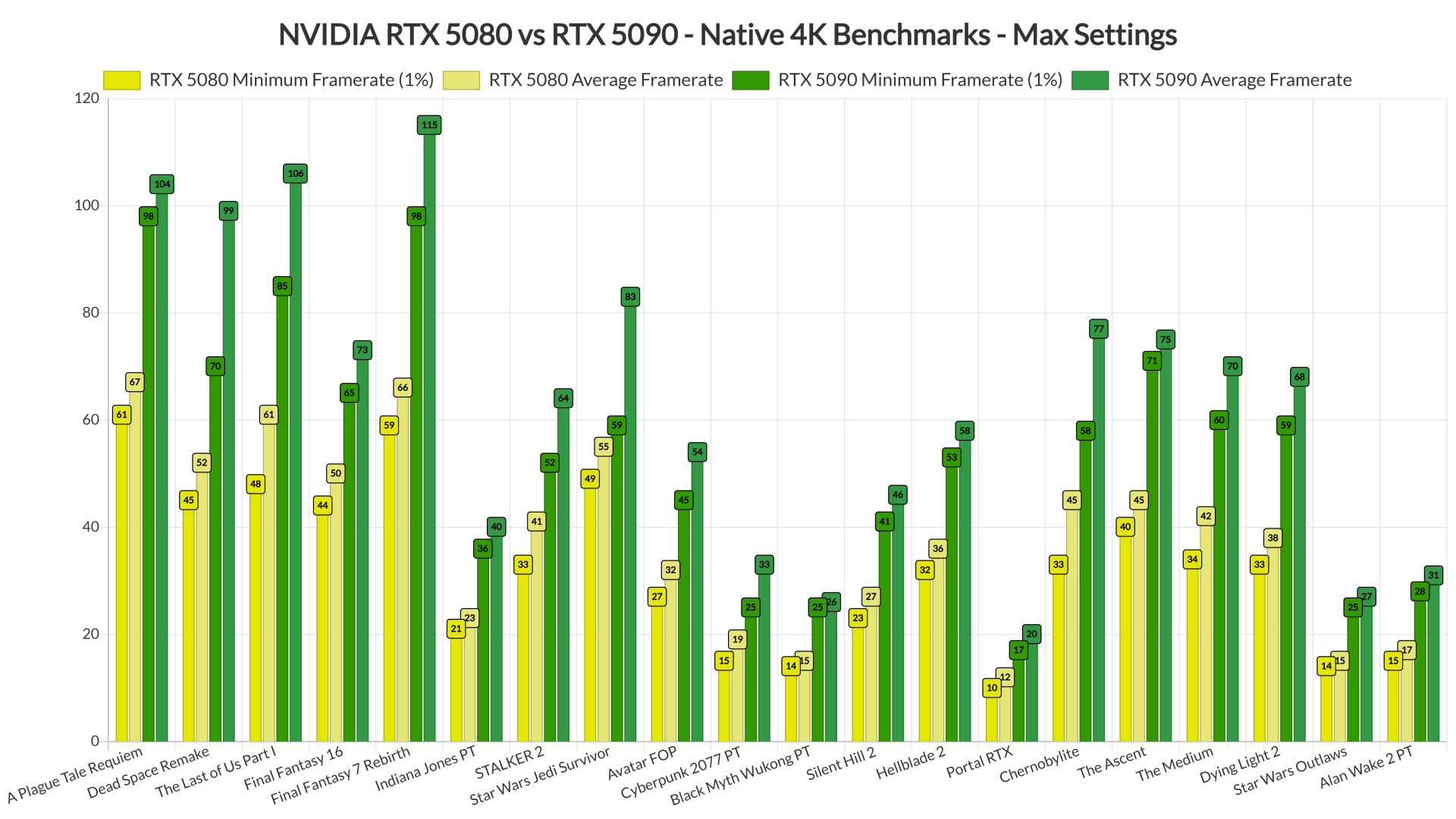

Let’s start with our Native 4K benchmarks. For these tests, we’ve used the most demanding games you will find on PC. As you will see, we’ve also replaced Starfield with Final Fantasy 7 Rebirth. Starfield was CPU-bound even at 4K, so it made no sense to include it in our graphs.

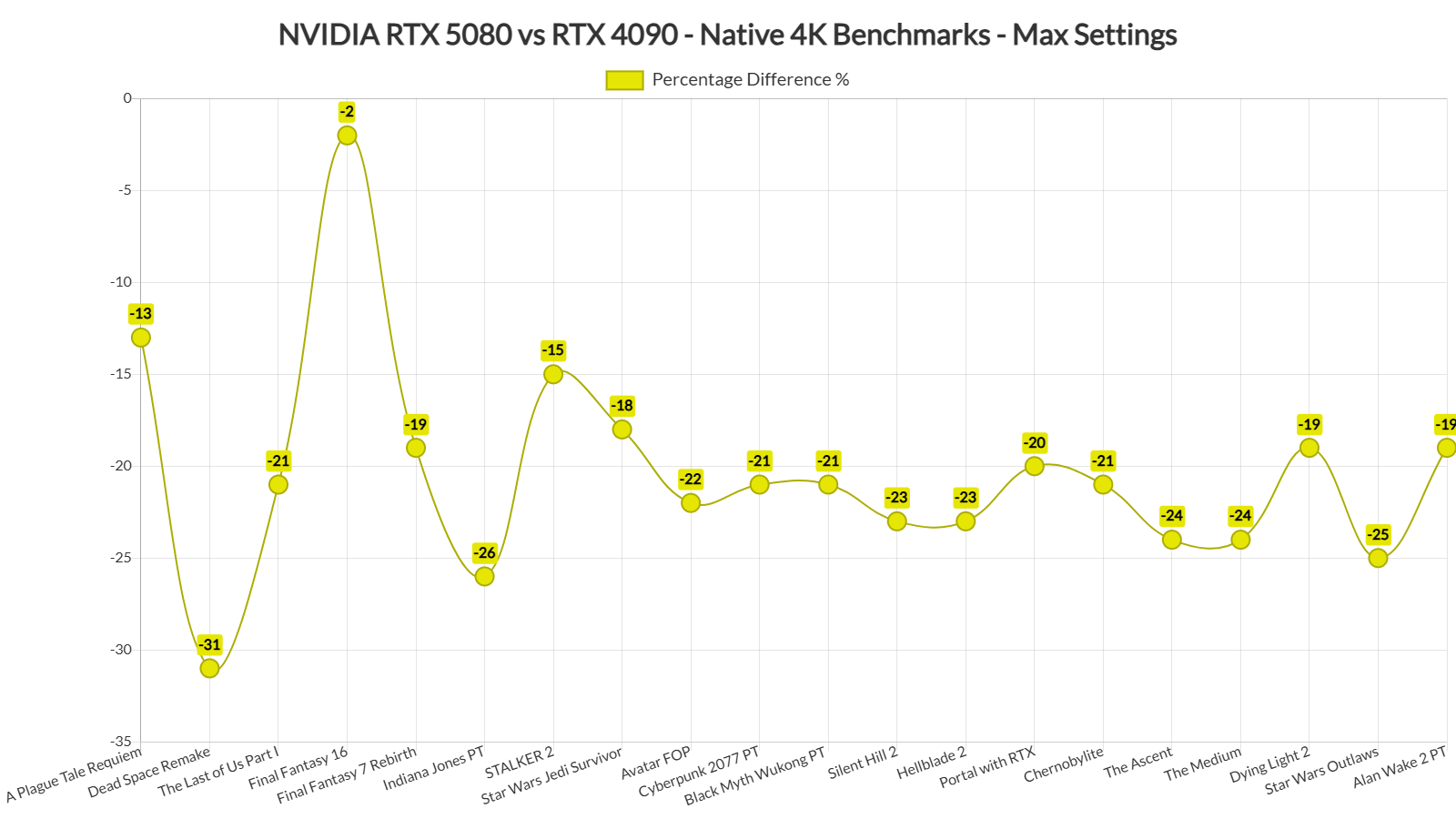

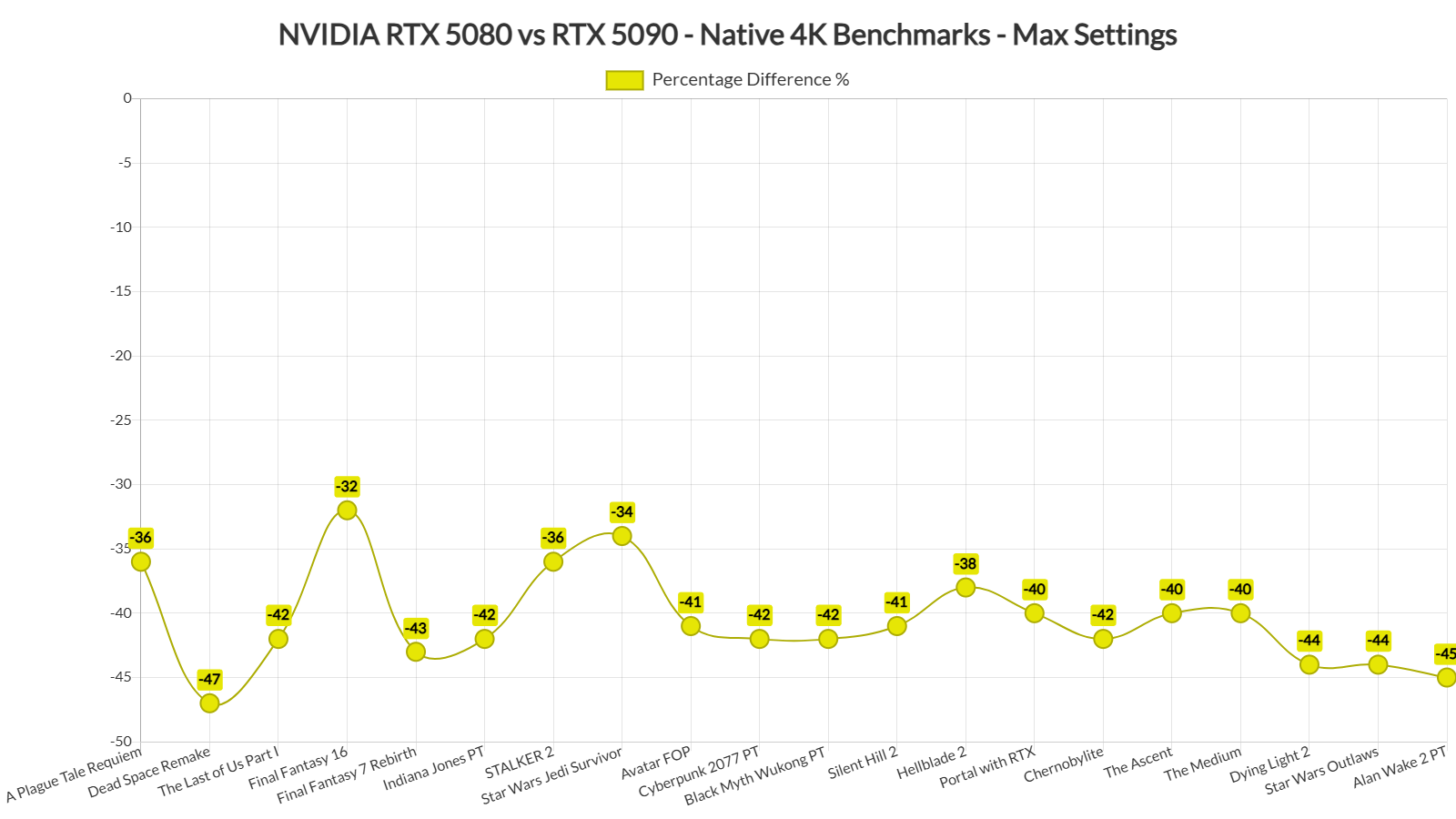

Without DLSS, the NVIDIA RTX 5080 struggles to run non-RT games at 4K. Overall, the NVIDIA RTX 5080 is 20% slower than the RTX 4090 and 41% slower than the RTX 5090.

Sadly, we don’t have an RTX 4080 so we could compare it with the RTX 5080. So, we don’t know whether NVIDIA’s claims of its performance over the RTX 4080 are valid or not. For this, we highly recommend reading other reviews. Despite that, the good news is that the NVIDIA RTX 5080 is priced similarly to the RTX 4080. So, even if the RTX 5080 is only 5% faster than the RTX 4080, it still offers a better value than it. It may not set the world on fire. However, it has a better value.

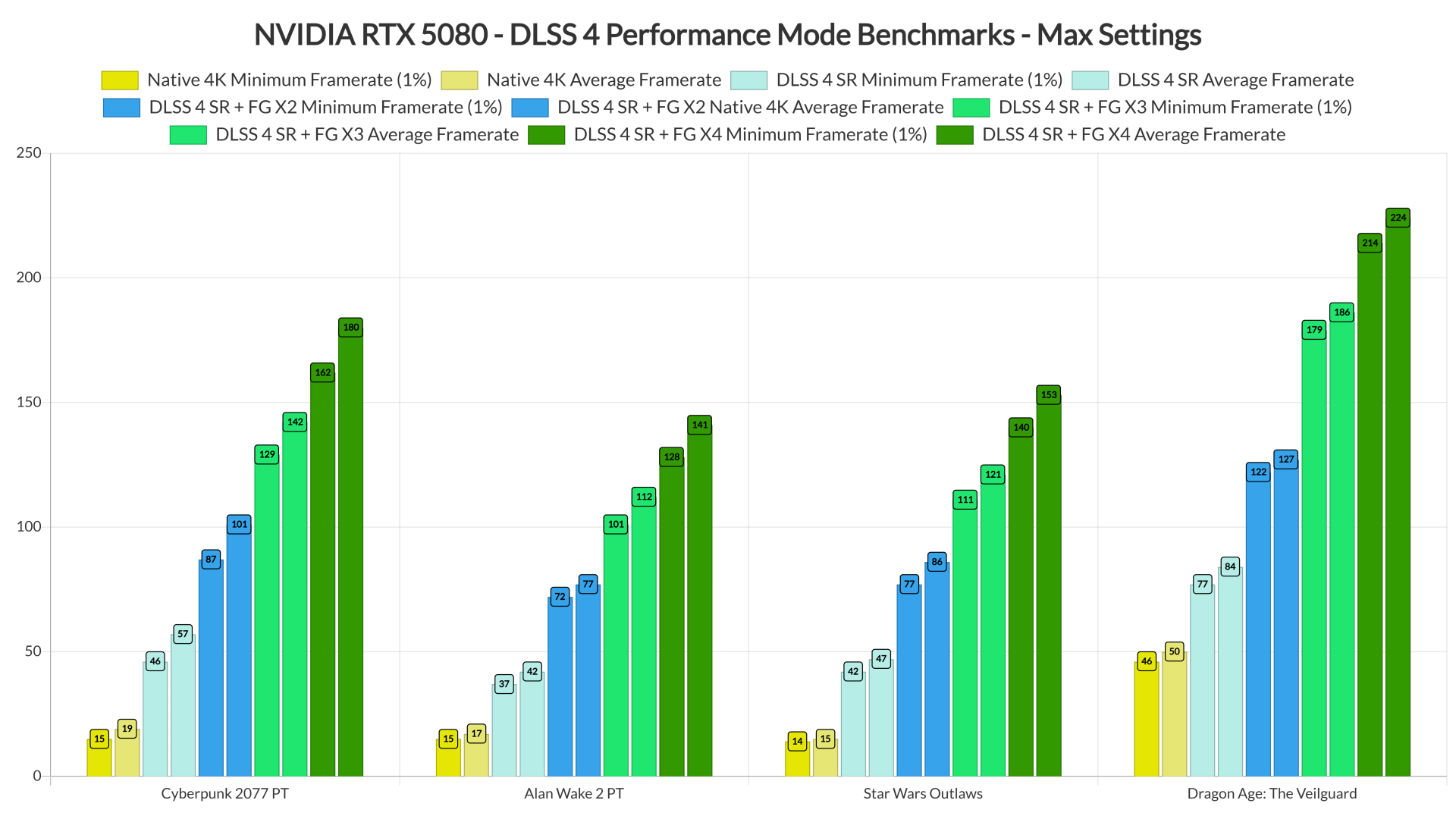

But what about DLSS 4? Well, now that’s a subject we need to talk about. Let’s start with the 4K benchmarks and the framerate benefits you’ll get with DLSS 4 X4 (using Super Resolution Performance Mode).

Sadly, there are some issues with DLSS 4 that can be more easily noticed on the RTX 5080 than on the RTX 5090. For example, Alan Wake 2 had noticeable input latency issues. Not only that, but it also suffered from the same visual artifacts we’ve experienced with the RTX 5090. Yes, you’ll get a smoother performance at 4K (than without using DLSS 4 Super Resolution or Frame Gen). However, this isn’t a free performance boost. It’s more like movement smoothing.

Cyberpunk 2077 also suffered from some visual artifacts, especially when it came to in-game icons and quest markers. They were quite noticeable on my end. These artifacts are similar to those I had in Dragon Age: The Veilguard when running it on the RTX 5090 at 8K with DLSS 4 X4. Basically, the base framerate (before using Frame Gen) is so low that MFG has trouble calculating the location of some icons or HUD elements. So, during quick movements, you’ll get stuttery in-game icons.

In short, the RTX 5080 exposes the issues of MFG. When you have a high base framerate, you’ll get a great gaming experience with MFG. Dragon Age: The Veilguard was a perfect example of this on the RTX 5080. But when your framerate is around 35-45FPS, you’ll get noticeable visual artifacts and, in some games, extra input lag. Thankfully, NVIDIA will release Reflex2 which might be able to reduce the input latency. Sadly, we didn’t have access to it so I can’t comment about it. As for the visual artifacts, I really hope the green team will fix them via future drivers/versions. There is a lot of potential here. Right now, though, MFG is not as ideal as it was on the RTX 5090. Which… well… makes perfect sense as the base framerate on the RTX 5090 was higher.

Another thing to note is that MFG mostly targets high refresh rate monitors. If you have a 4K/120Hz monitor, MFG is not something you’d want to use. In these situations, DLSS 4 FG X2 should be enough for gaming without exceeding the refresh rate of your monitor. So, if you don’t have a 240Hz or 360Hz monitor, MFG is a nothing-burger.

NVIDIA has been trying to push MFG as a “free performance upgrade” but we all know that’s not the case. If it was, it would not introduce extra input lag or visual artifacts when using it at 30FPS. It’s a cool tech, but it’s not a free performance boost. It also currently suffers from the issues I’ve described above. Once NVIDIA manages to iron them, MFG will feel and look better, and it will be easier to recommend (for anything other than an RTX 5090).

So, for whom is this GPU? If you have an RTX 4080, you should not consider upgrading to an RTX 5080. The performance difference between them is not that big. For those that are still with an RTX30 series GPUs, the RTX 5080 is a “turbo-boosted” RTX 4080 Super with MFG. For the same price, you’ll get a faster GPU with new features. It’s not a bad deal. The RTX 5080 is not a bad GPU. It just doesn’t feel like a next-gen GPU. It doesn’t have that “wow” factor. So, if you are expecting or hoping for something truly amazing, you’ll be disappointed!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email