Ubisoft has just provided us with a PC review code for Avatar: Frontiers Of Pandora. So, in this article, we’ll take a look at the AMD FSR 3.0 implementation, and share our initial PC performance impressions.

For this article, we used an AMD Ryzen 9 7950X3D, 32GB of DDR5 at 6000Mhz, and an NVIDIA RTX 4090. We also used Windows 10 64-bit, and the GeForce 546.29 driver. Moreover, we’ve disabled the second CCD on our 7950X3D.

Ubisoft has implemented various graphics settings to tweak. And yes, the game does support ray-traced shadows and ray-traced reflections. Interestingly enough, we couldn’t find any setting for RTGI. My guess is that RTGI is enabled by default. The bad news here, though, is that you cannot adjust its quality. As such, in some dark places, you will spot some visual artifacts.

AMD FSR 3’s Super Resolution appears to be great and on par with NVIDIA DLSS 2. Below you can find a comparison between DLAA (left), DLSS 2 Ultra Quality (middle) and FSR 3.0 Ultra Quality (right). AMD FSR 3.0 is noticeably sharper than DLSS 2, something that will please a lot of gamers. Not only that but in still images, it provides similar results to DLSS 2. This is by far one of the best implementations of FSR we’ve seen to date.

Now while FSR 3 Super Resolution is great, FSR 3 Frame Generation is still not perfect. To its credit, FSR 3.0 is no longer a stuttery mess. However, there are still tearing issues when using a G-Sync monitor. We don’t really know why, but the game never felt smooth with FSR 3.0 Frame Generation. So yes, Avatar’s FSR 3.0 implementation is still not perfect.

Thankfully, Avatar offers a framerate limiter and a refresh rate option. By dropping the refresh rate to 100hz, and by setting the framerate limiter to 100fps, we were able to achieve a somehow acceptable experience. That was with a constant framerate of 100fps.

In terms of input latency, I did not experience any major issues when using FSR 3.0 Frame Generation. With a baseline framerate of 60fps, everything felt responsive. So, if you can hit 50-60fps without FSR 3.0, Frame Generation is an extra way to improve the game’s performance. Right now, the game supports Frame Generation only with FSR 3.0. In a future update, Ubisoft will add support for DLSS 3.

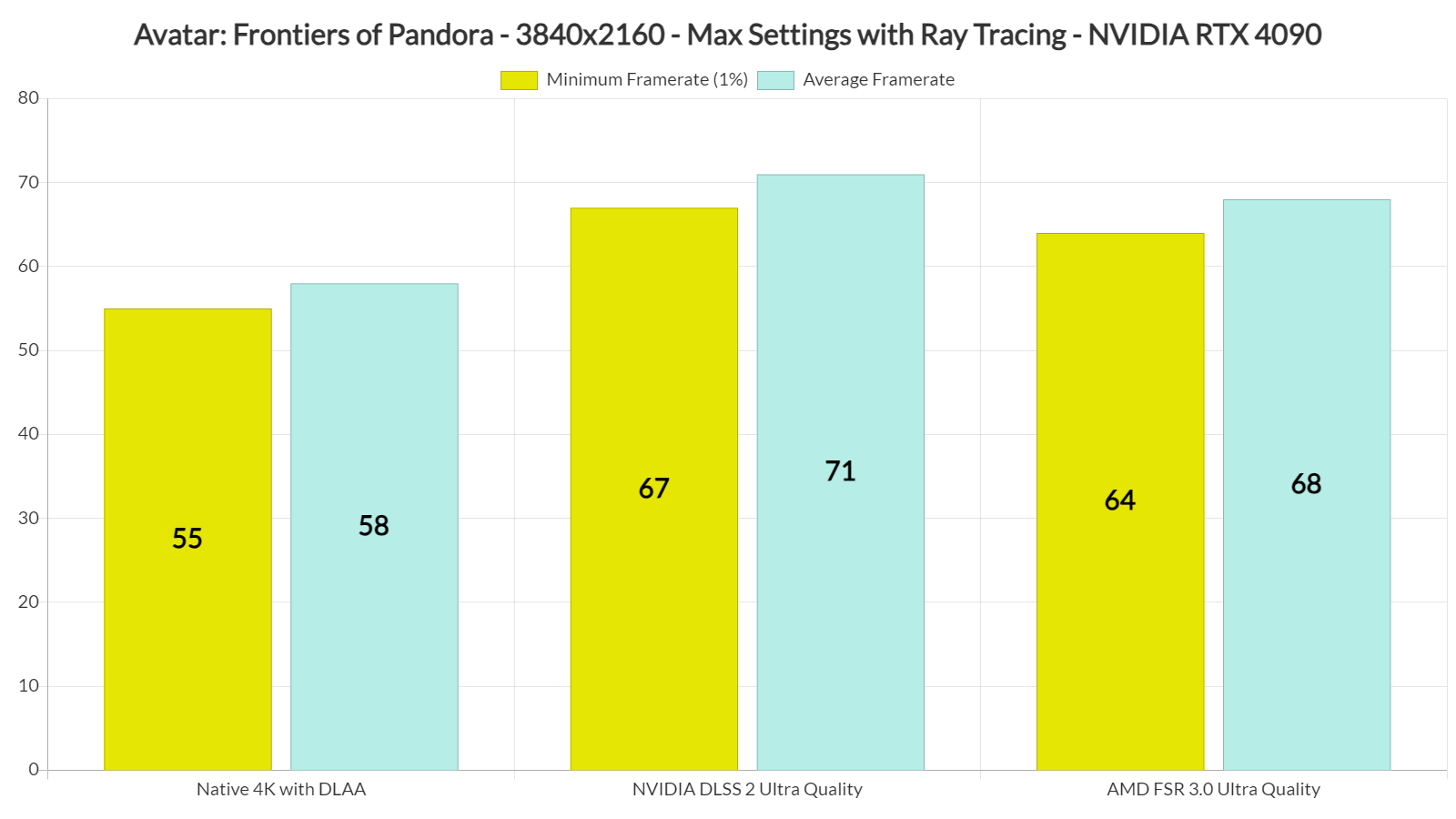

Lastly, at Native 4K/Max Settings, the NVIDIA RTX 4090 was able to push a minimum of 55fps and an average of 58fps in the first open-world area. With DLSS 2 Ultra Quality, we were getting 71fps. And with FSR 3 Ultra Quality, we were at 68fps. And yes, you read that right. Both DLSS 2 and FSR 3.0 have an Ultra Quality Mode.

Stay tuned for our PC Performance Analysis, in which we’ll benchmark more AMD and NVIDIA GPUs in various resolutions!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email