The Lord of the Rings: Gollum releases tomorrow and from the looks of it, NVIDIA’s high-end GPU, the RTX 4090, can run it with 48fps at Native 4K with Ray Tracing.

For those unaware, Daedalic Entertainment has used Ray Tracing in order to enhance the game’s reflections and shadows. Moreover, the game will support DLSS 3 from the get-go.

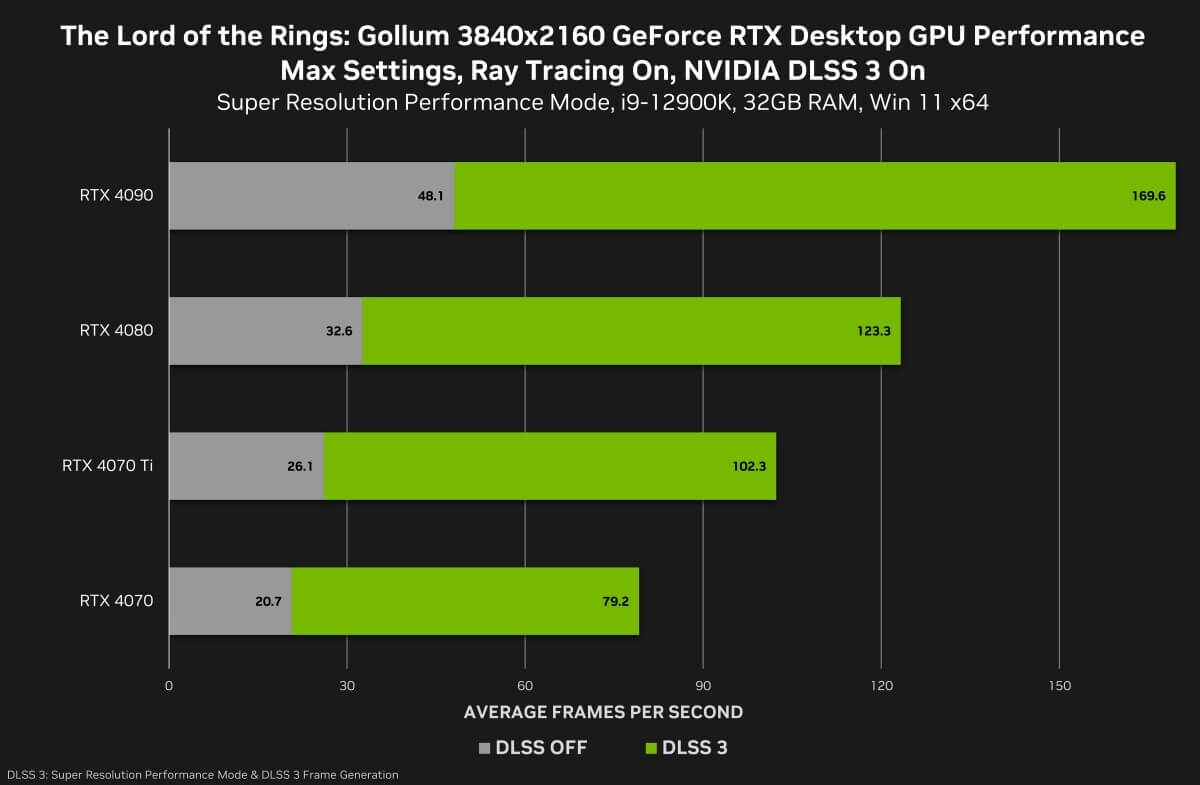

According to NVIDIA, the RTX 4090 can push 48fps at Native 4K with Ray Tracing. Its second most powerful GPU, the RTX 4080, can push an average of 30fps at Native 4K with Ray Tracing.

In short, PC gamers will need DLSS 2 (or DLSS 3) in order to enjoy the game with smooth framerates at 4K. NVIDIA has shared some performance numbers, however, DLSS 3’s Super Resolution was set to Performance Mode. Thus, expect lower framerates when using DLSS 3’s Quality Mode.

Although Daedalic has not provided us with a review code, we’ll be sure to test and benchmark the game once it releases.

Stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email