Focus Entertainment has just released Atomic Heart on PC. The game is using Unreal Engine 4 and unfortunately, it launched without its advertised Ray Tracing effects. However, it appears that the game can at least run smoothly on modern-day PC configurations. At Native 4K and Max Settings, our NVIDIA GeForce RTX 4090 was able to push over 85fps at all times.

In order to capture the following gameplay footage, we used an Intel i9 9900K, 16GB of DDR4 at 3800Mhz, and NVIDIA’s RTX 4090. We also used Windows 10 64-bit, and the GeForce 528.24 driver.

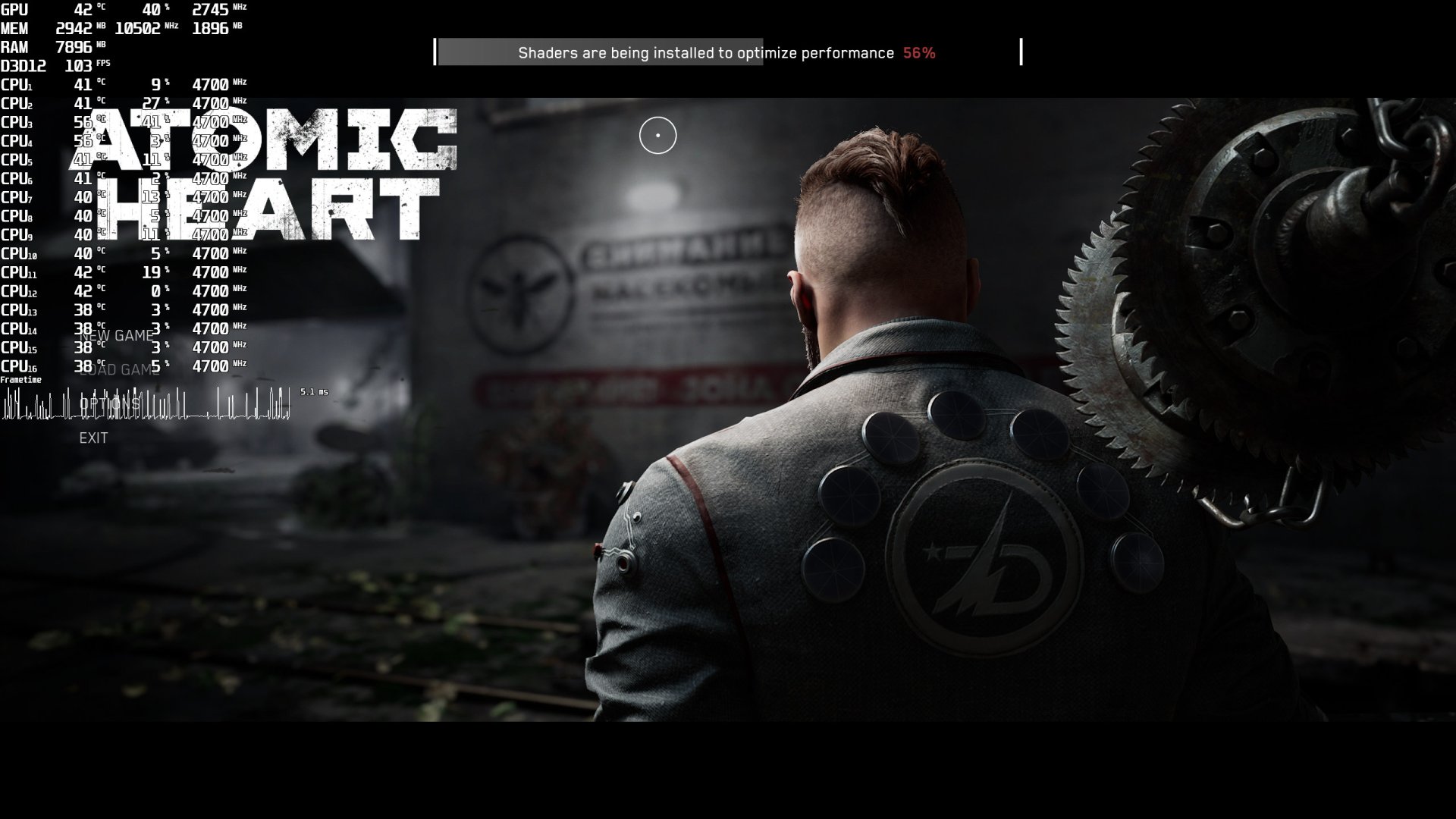

Atomic Heart allows PC gamers to compile its shaders before beginning the single-player campaign. Therefore, and in order to avoid any shader compilation stutters, we suggest letting the game compile all of its shaders.

The game also supports DLSS 2/3, as well as FSR 2.0. Furthermore, and contrary to reports, the game doesn’t appear to have any mouse acceleration or smoothing issues.

For the most part, the NVIDIA GeForce RTX4090 is able to run Atomic Heart with 100fps at native 4K with Max Settings. However, we were able to drop our framerate to 85fps while using the Scanner. During that scene, our framerate dropped from 120fps to 85fps (with the GPU being used to its fullest).

Our PC Performance Analysis for Atomic Heart will go live later this week. Since the game does not have any built-in benchmark tool, we’ll be using the Scanner scene. After all, that’s one of the most demanding scenarios early in the game. For those wondering, we also did not experience any major stutters.

Stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email