It appears that AMD is back. The red team has lifted the embargo for the AMD Radeon RX 6800 and RX 6800XT and as we can see, AMD has finally delivered a competitive product. In fact, the AMD Radeon RX 6800XT can, in some rasterized games, surpass even the NVIDIA GeForce RTX3090.

Now I want to make it crystal clear that for the most part, the AMD Radeon RX 6800XT offers performance slightly lower than the RTX3080. However, there are some situations in which AMD’s GPU can surpass NVIDIA’s high-end RTX3090. Also, do note that AMD is also working on a more powerful GPU, the Radeon RX 6900XT. Thus, we should theoretically see this GPU beating the RTX3090 in more rasterized games.

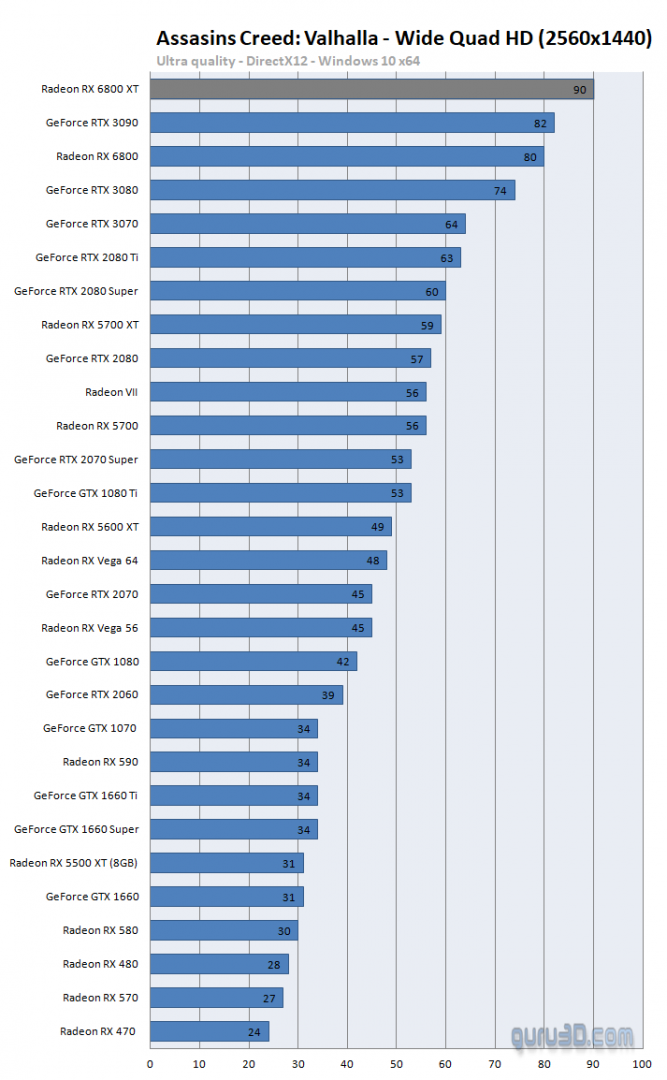

For instance, the AMD Radeon RX 6800XT beats the NVIDIA RTX3090 in Assassin’s Creed Valhalla. Of course, this should not surprise us as Valhalla favours AMD’s GPUs.

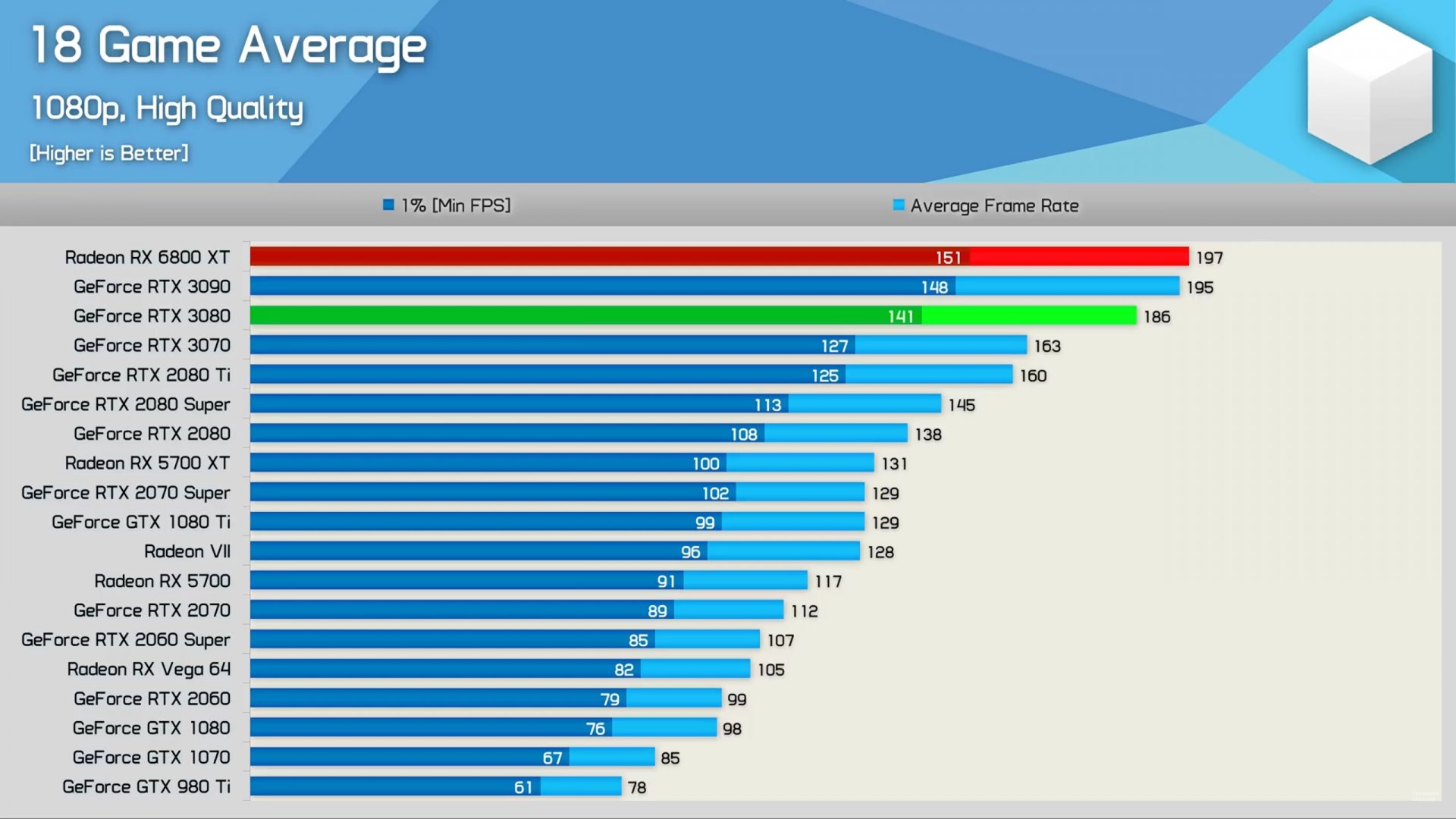

Now what’s really interesting is the amazing 1080p performance of the AMD Radeon RX 6800XT. Multiple websites have reported that the performance of this GPU can come close to the one of RTX3090. However, when raising the resolution to 1440p or 4K, the gap between these two GPUs widens. Both HardwareUnboxed and Guru3D reported these performance differences.

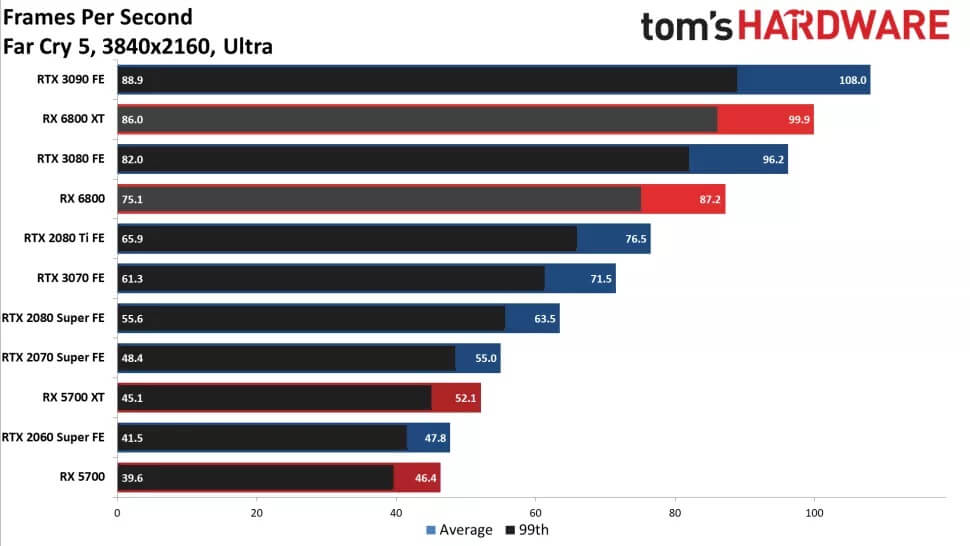

Tomshardware also reports that the AMD Radeon RX 6800XT is faster in some games than the RTX 3080, but slower than the RTX 3090.

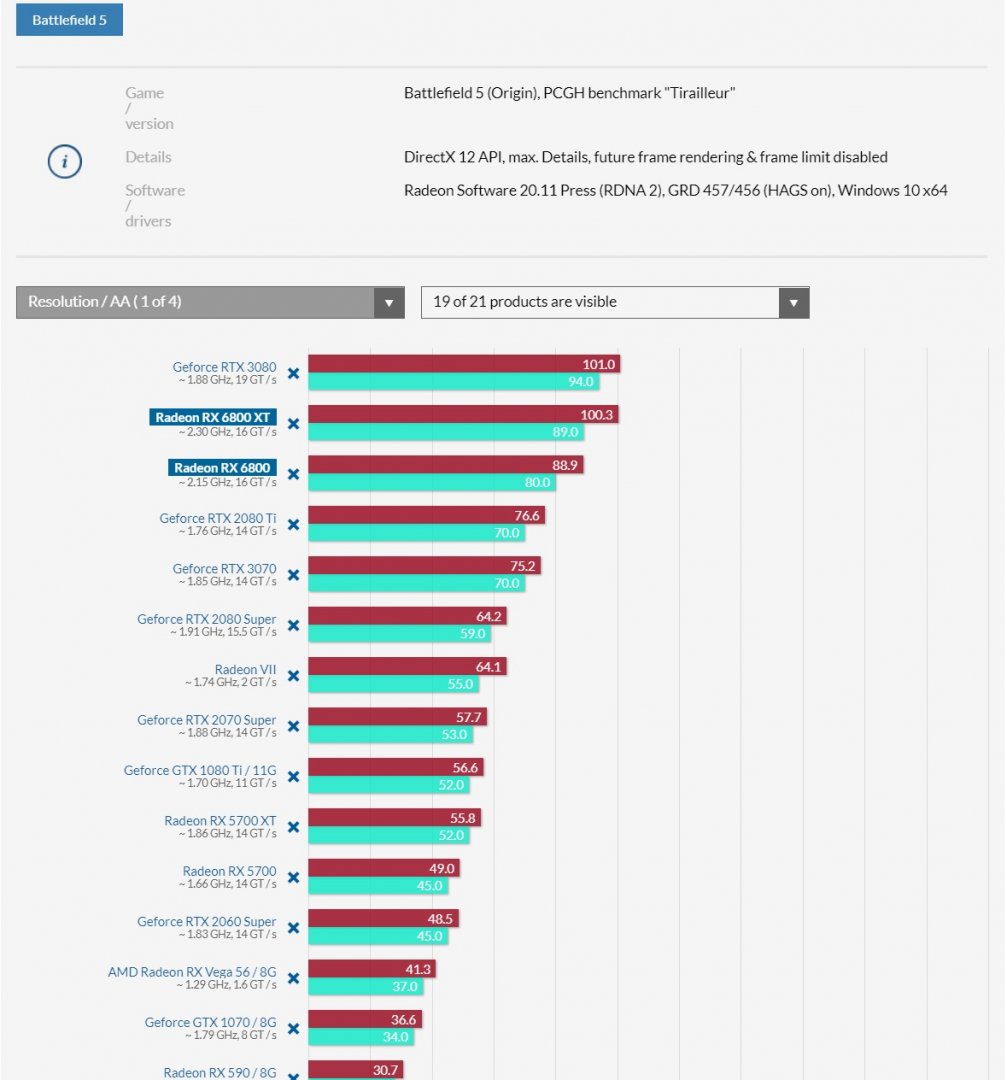

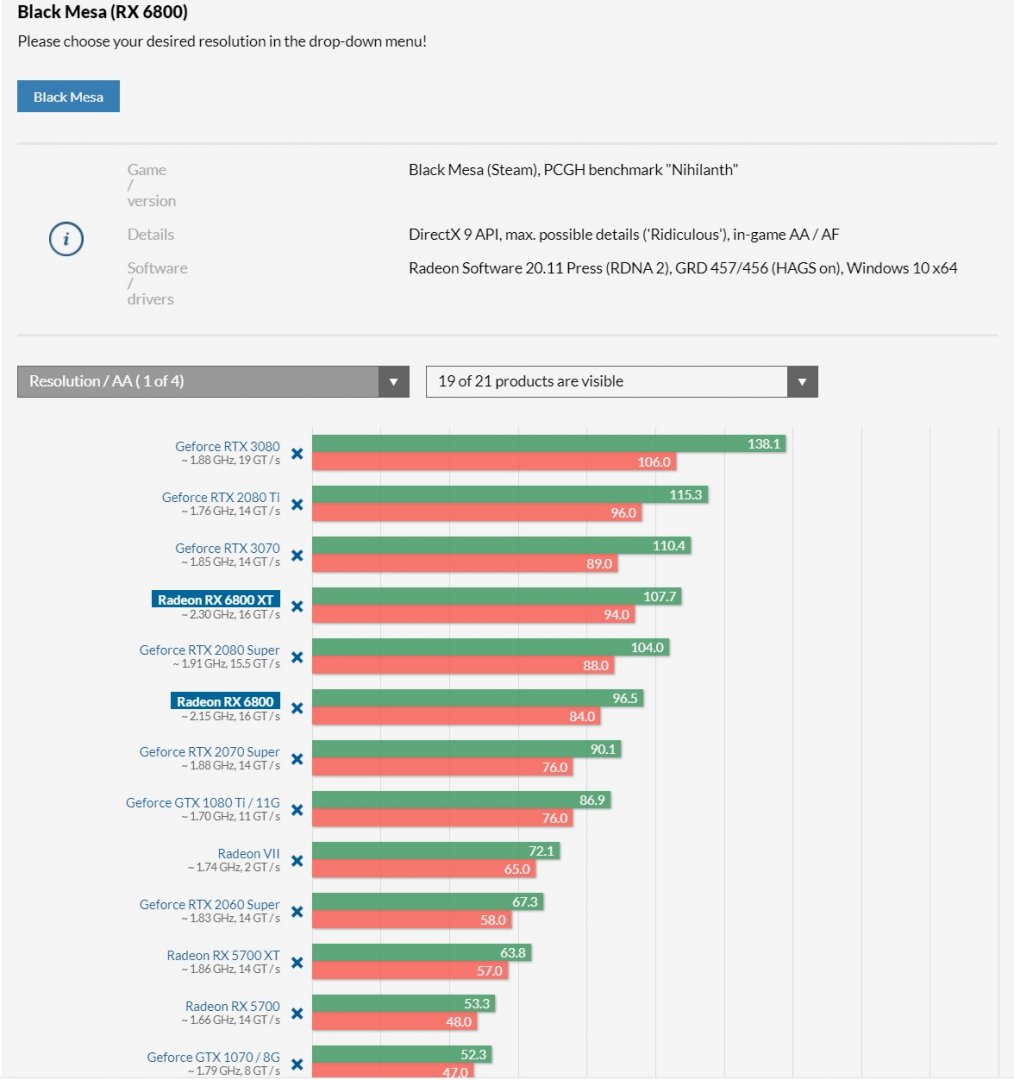

PCGamesHardware reports that the AMD Radeon RX 6800XT is faster than the RTX 3080 in Battlefield 5 at 1440p. On the other hand, in some games that favour NVIDIA GPUs (like Black Mesa), the RX 6800XT can be even slower than the RTX2080Ti.

It’s also interesting looking at the 4K numbers, especially since RTX 3080 comes with 10GB of VRAM, whereas the RX 6800XT comes with 16GB of VRAM. Despite that, the NVIDIA GPU is noticeably faster in lots of games in 4K. Kitguru reports that in Ghost Recon Breakpoint, Watch Dogs Legion and Red Dead Redemption 2, the AMD RX 6800XT is slower than the RTX3080 in 4K.

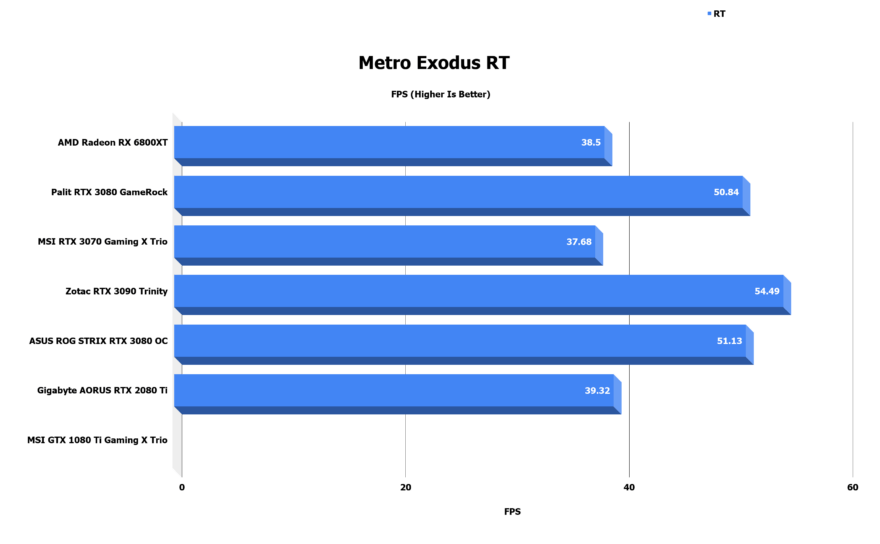

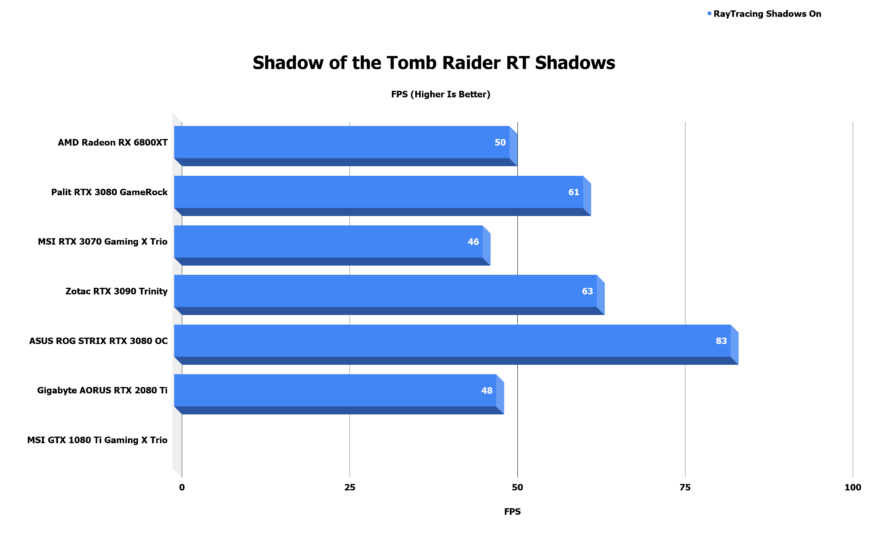

Unfortunately, we haven’t seen a lot of Ray Tracing benchmarks. As HardwareUnboxed reported, in Dirt 5 (which is a game that favours AMD GPUs), its Ray Tracing effects run faster on the RX 6800XT. However, in other games (like Shadow of the Tomb Raider), the RX 6800XT performs similarly to the RTX2080Ti. Similarly, Eteknix reports that in Metro Exodus, the RT performance of the RX 6800XT is similar to the RTX2080Ti. So yeah, the Ray Tracing performance of the RX 6800XT appears to be inconsistent. Not only that, but AMD has not implemented yet its DLSS-like technique to any RT games.

Overall, AMD has finally delivered a competitive product. However, and in the majority of games, it does not beat the RTX3080 or the RTX3090 in higher resolutions. We are also really puzzled at the 4K results. We don’t know whether this is a driver issue that AMD can resolve via future updates. After all, the RX 6800XT can outperform even the RTX3090 in lower resolutions. Additionally, its Ray Tracing performance does not appear to be that great, especially if we take account NVIDIA’s DLSS tech. Not only that, but we haven’t seen the FidelityFX Super Resolution. As such, we don’t know whether it will be as good as DLSS 2.0 or as awful as DLSS 1.0. Lastly, and at least for now, there are no benefits to the 16GB of VRAM.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email