Total War Saga: Thrones of Britannia is a new Total War game that has just been released on the PC. Total War Saga: Thrones of Britannia promises to combine huge real-time battles with engrossing turn-based campaign, set at a critical flashpoint in history. SEGA has provided us with a review code so it’s time to benchmark it and see how it performs on the PC platform.

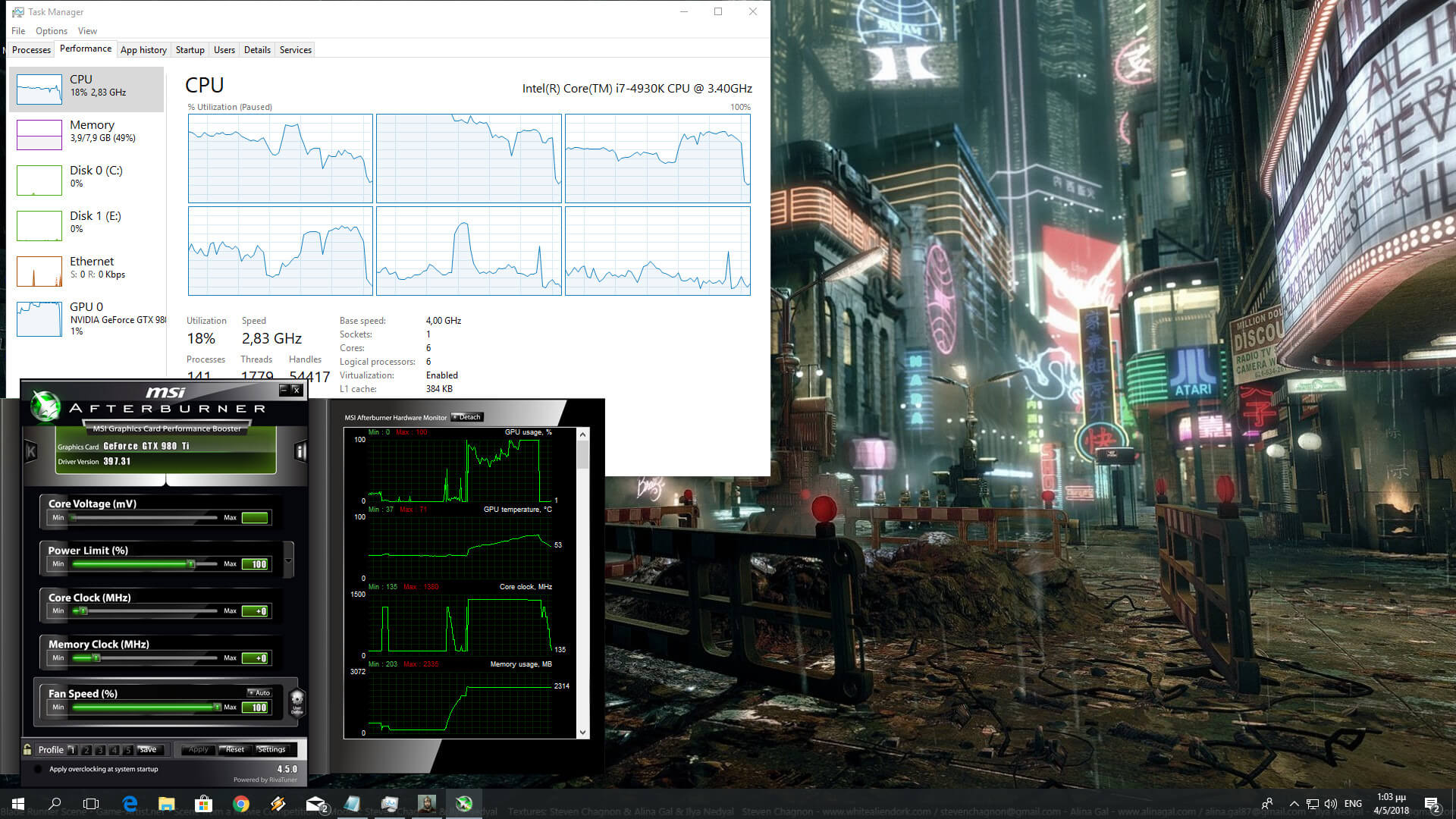

For this PC Performance Analysis, we used an Intel i7 4930K (overclocked at 4.2Ghz) with 8GB RAM, AMD’s Radeon RX580 and RX Vega 64, NVIDIA’s GTX980Ti and GTX690, Windows 10 64-bit and the latest version of the GeForce and Catalyst drivers. NVIDIA has not included any SLI profile for this game, meaning that our GTX690 performed similarly to a single GTX680. For our tests we used the in-game benchmark tool.

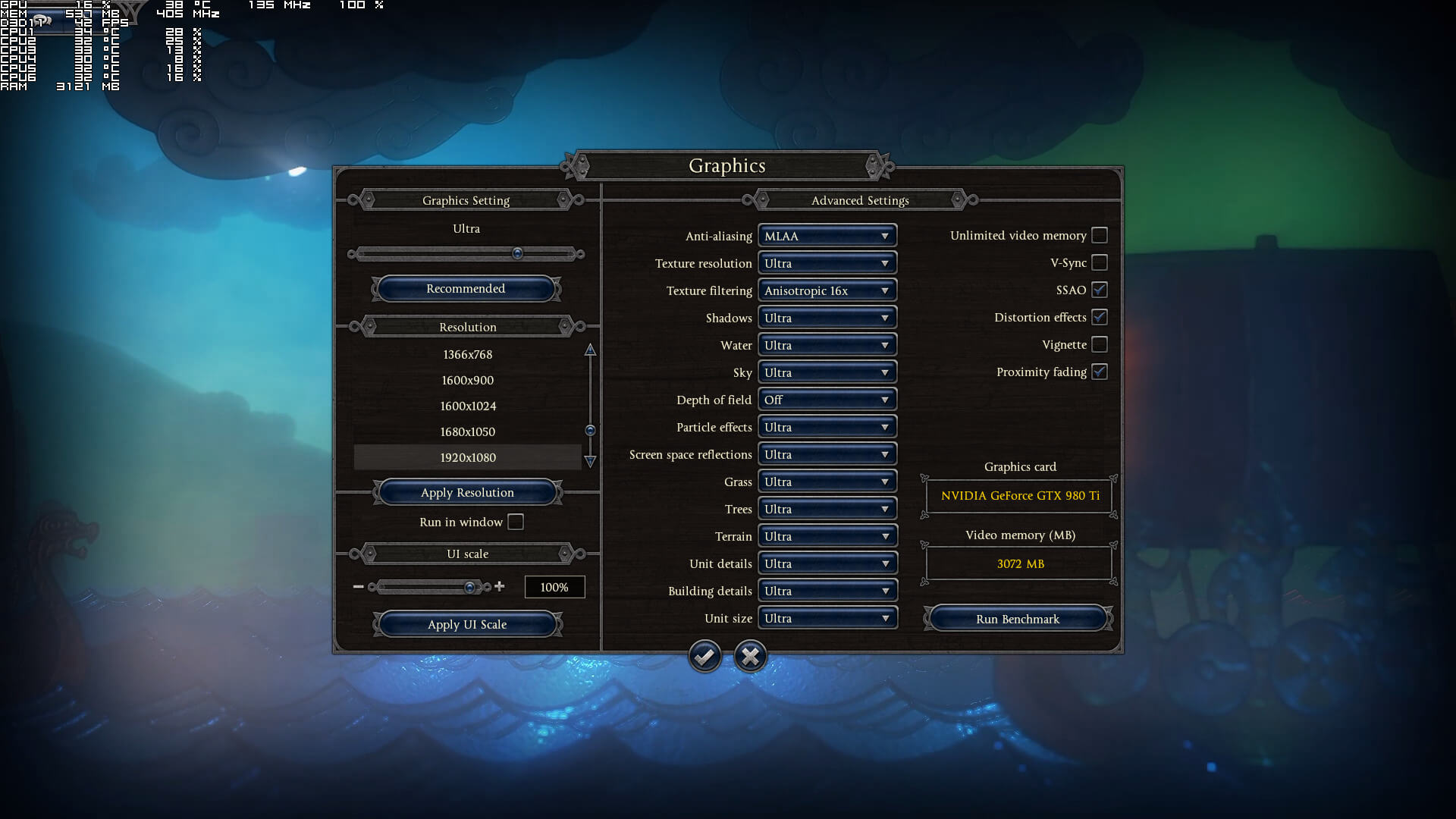

Creative Assembly has implemented lots of graphics settings to tweaks. PC gamers can adjust the quality of anti-aliasing, textures, texture filtering, shadows, water, sky, particle effects, screen space reflections, grass, trees, terrain, unit details, building details and unit sizes.

[nextpage title=”GPU, CPU metrics, Graphics & Screenshots”]

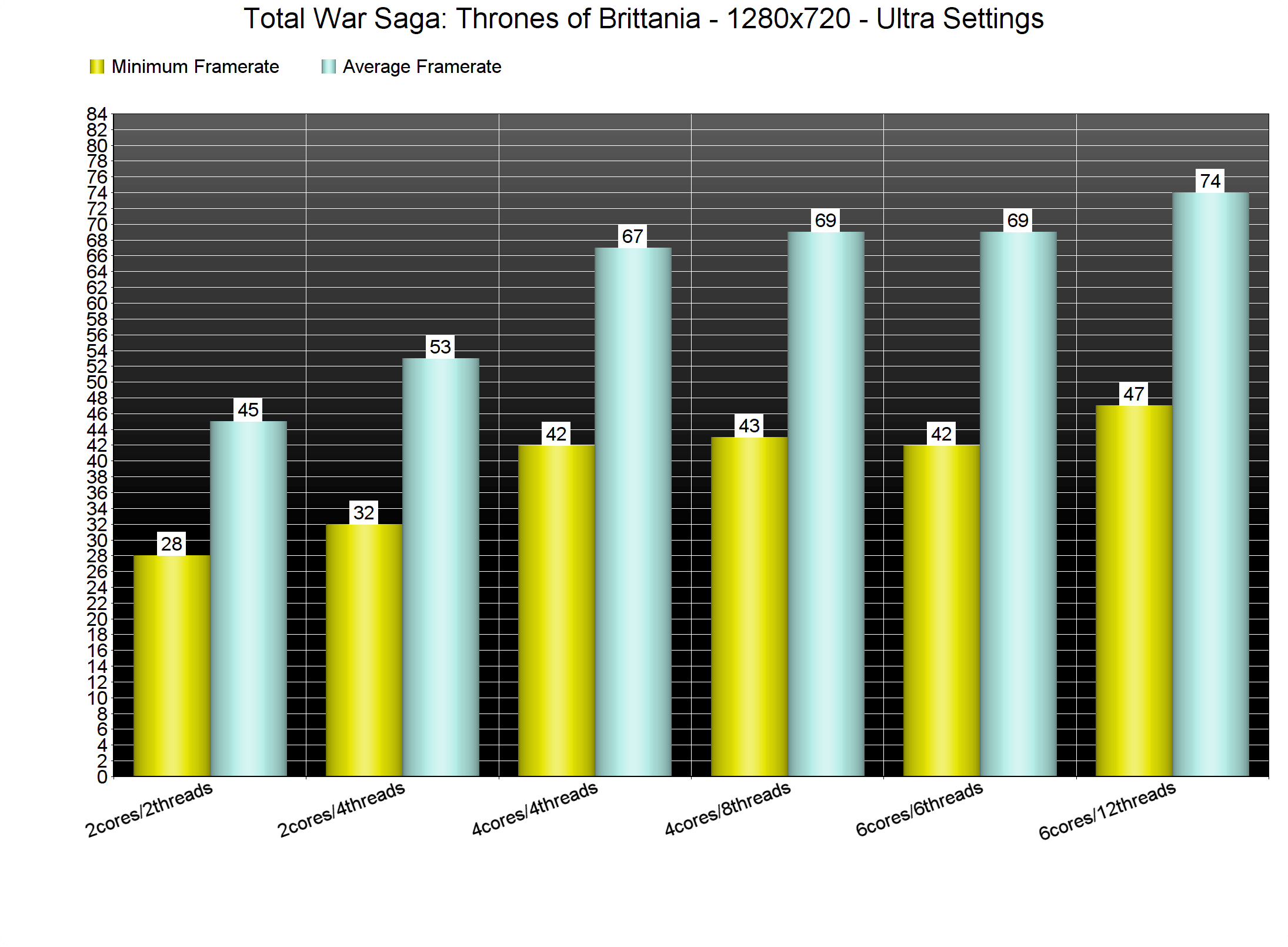

In order to find out how the game performs on a variety of CPUs, we simulated a dual-core and a quad-core CPU. For our CPU tests, we used our GTX980Ti as the drivers for the AMD GPUs bring an additional CPU hit under DX11. Unfortunately, on Ultra settings, PC gamers will need a top of the line CPU in order to enjoy a constant 60fps experience. Our Intel i7 4930K was unable to provide a smooth gaming experience as there were drops below 50fps. Still, and since this is a strategy game, all our PC configurations were able to offer a 30fps experience (even our simulated dual-core system). We also noticed a slight performance boost when Hyper Threading was enabled on our i7 4930K.

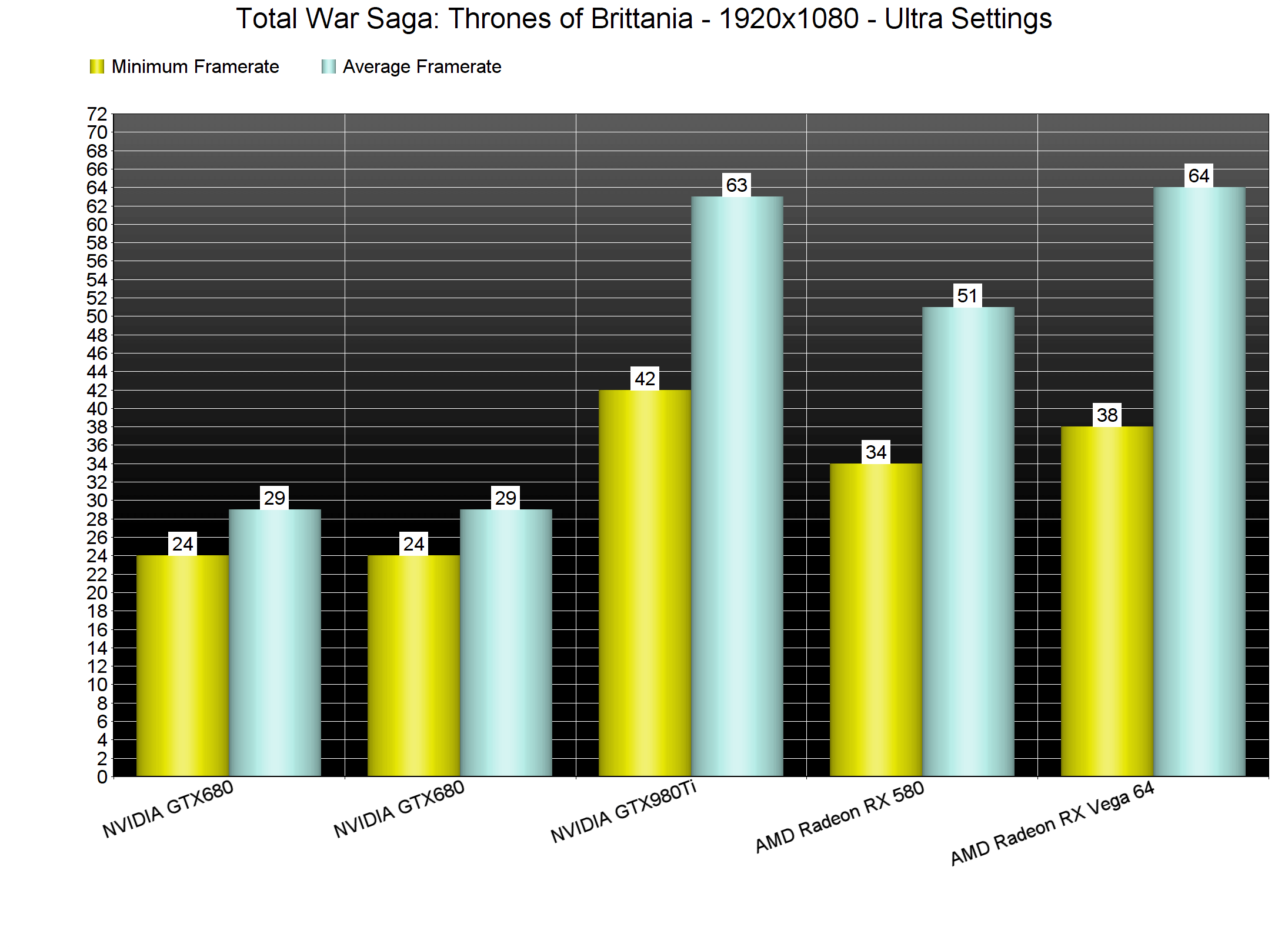

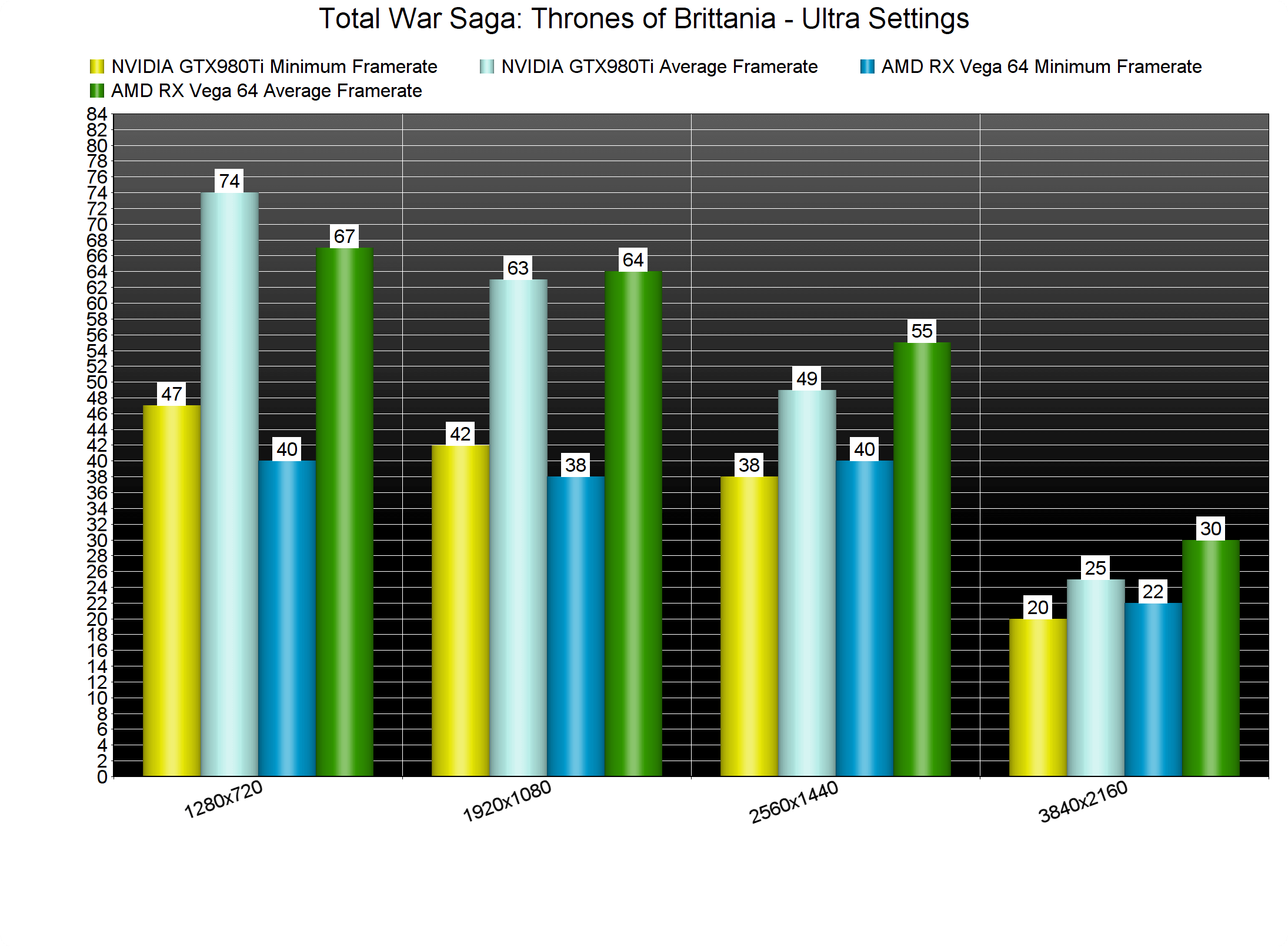

As we’ve already said, AMD’s drivers bring an additional CPU hit in most DX11 games. Also, and contrary to the previous Total War games, Total War Saga: Thrones of Britannia does not support DX12. As such, at 1080p on Ultra settings, our AMD RX Vega 64 was almost as fast as the GTX980Ti, however, the minimum framerates were higher on the GTX980Ti. The RX580 was able to run the game’s benchmark with a minimum of 34fps and an average of 51fps. On the other hand, our GTX690 – due to the lack of an SLI profile – was unable to offer a 30fps experience.

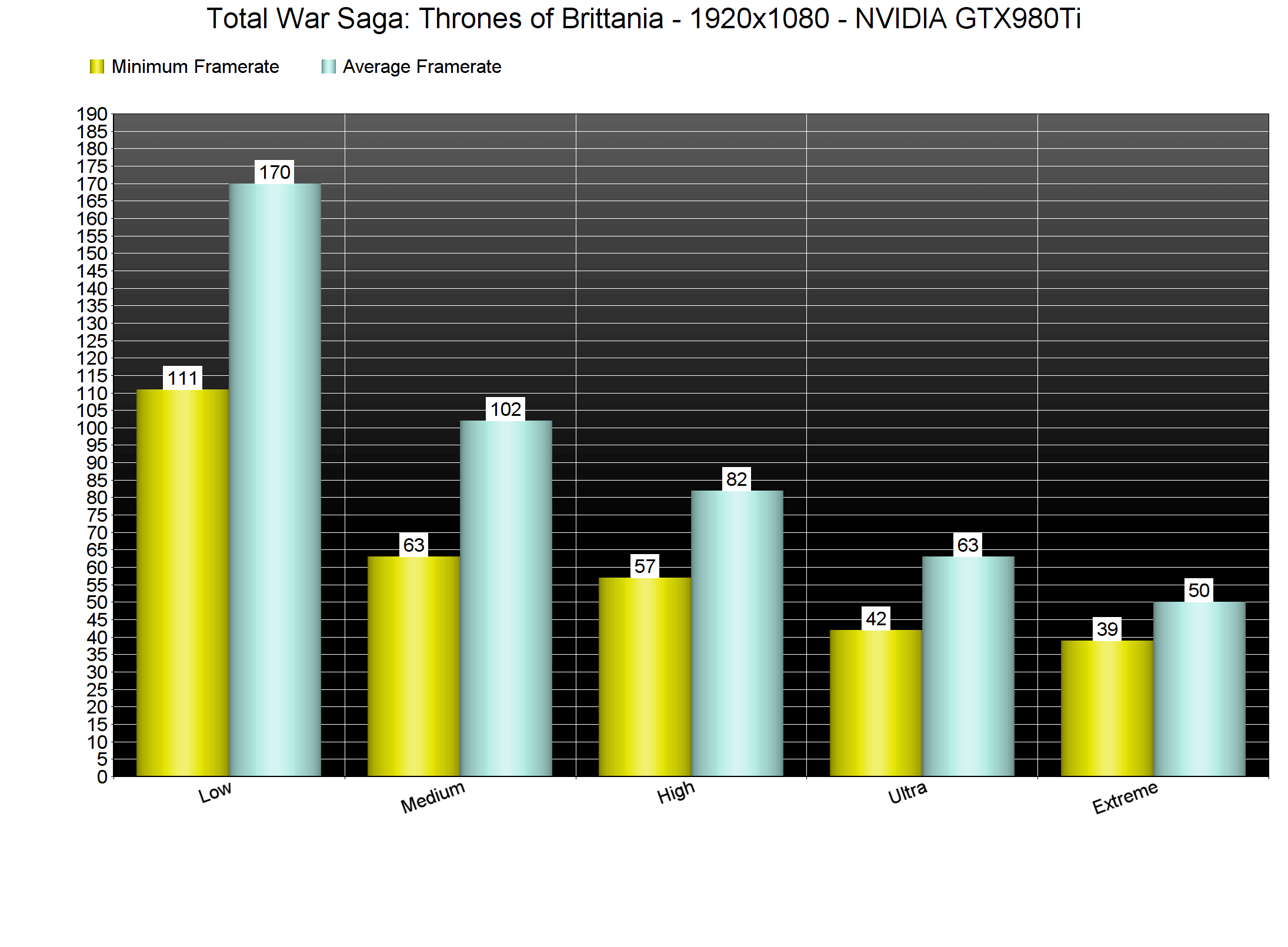

Total War Saga: Thrones of Britannia comes with five graphical presets: Low, Medium, High, Ultra and Extreme. Our GTX980Ti was able to offer a smooth gaming experience on Low, Medium and High. On Ultra settings our minimum framerate dropped to 42fps and on Extreme settings, our minimum framerate dropped to 39fps.

For our resolution scaling results, we used both the AMD RX Vega 64 and the NVIDIA GTX980Ti. And the results were really interesting. As we can see, on lower resolutions (720p and 1080p) the GTX980Ti offers a better overall experience. However, once we up our resolution to 1440p and 4K, the tables are turned and AMD RX Vega 64 leads the charts. So while at 1440p the AMD RX Vega 64 does provide a smoother gaming experience, NVIDIA’s GTX980 is able to offer better minimum framerates for 1080p (and lower) resolutions.

In conclusion, Total War Saga: Thrones of Britannia can be played on a variety of PC systems. However, those seeking for a constant 60fps experience will need CPUs with great IPC (as the game seems unable to take advantage of more than four CPU cores/threads and relies heavily on one core/thread). This is something that plagued all previous Total War games so we are not really surprised by it. Still, and after a whole year since the release of Total War: Warhammer 2, we kind of expected things to get better. And unfortunately, performance-wise, Total War Saga: Thrones of Britannia has not advanced at all.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email