Gearbox has just released Gunfire’s third-person action-survival shooter, Remnant 2. Remnant 2 is powered by Unreal Engine 5, takes advantage of Nanite, and it’s time to examine its performance on PC.

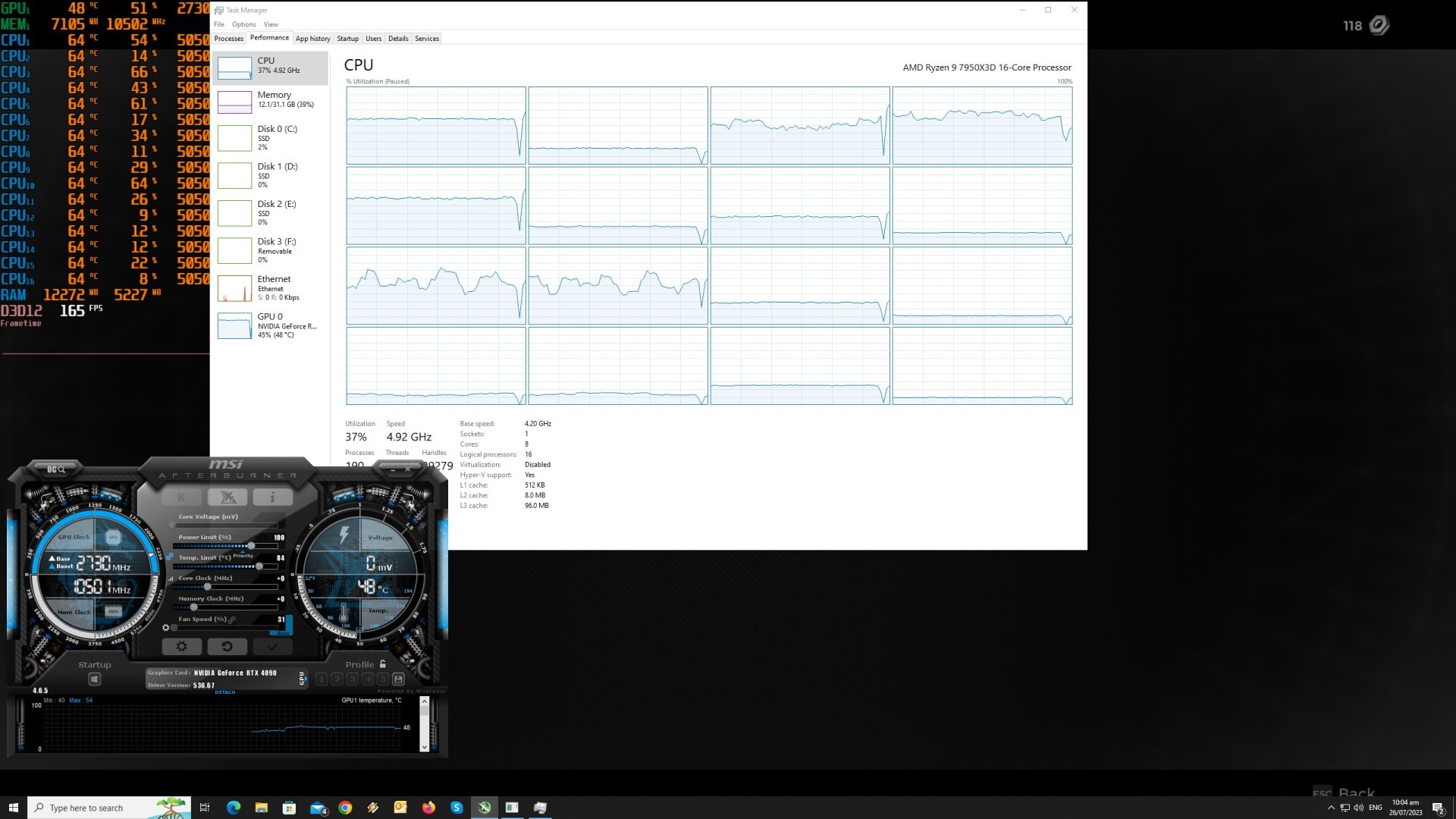

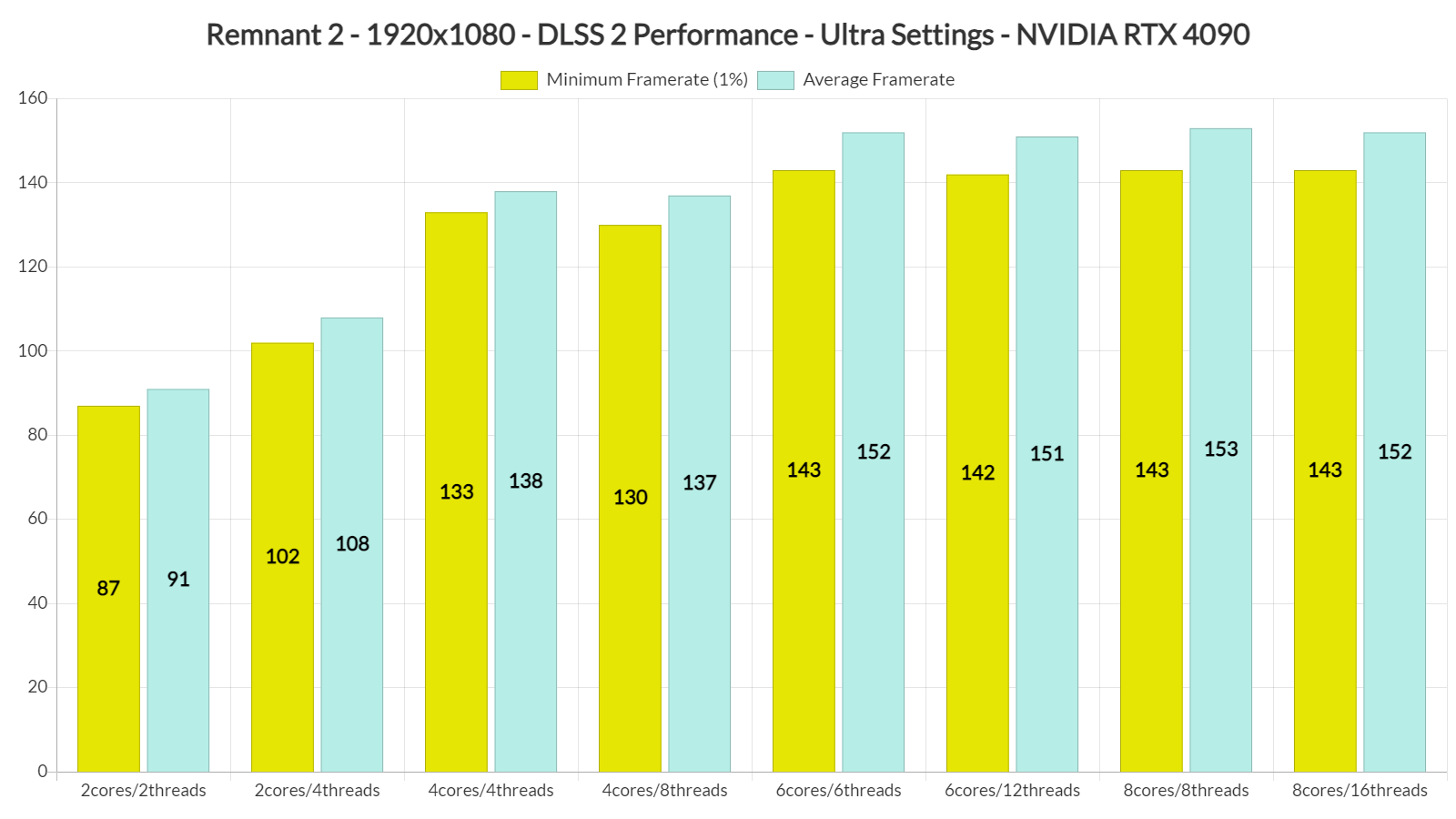

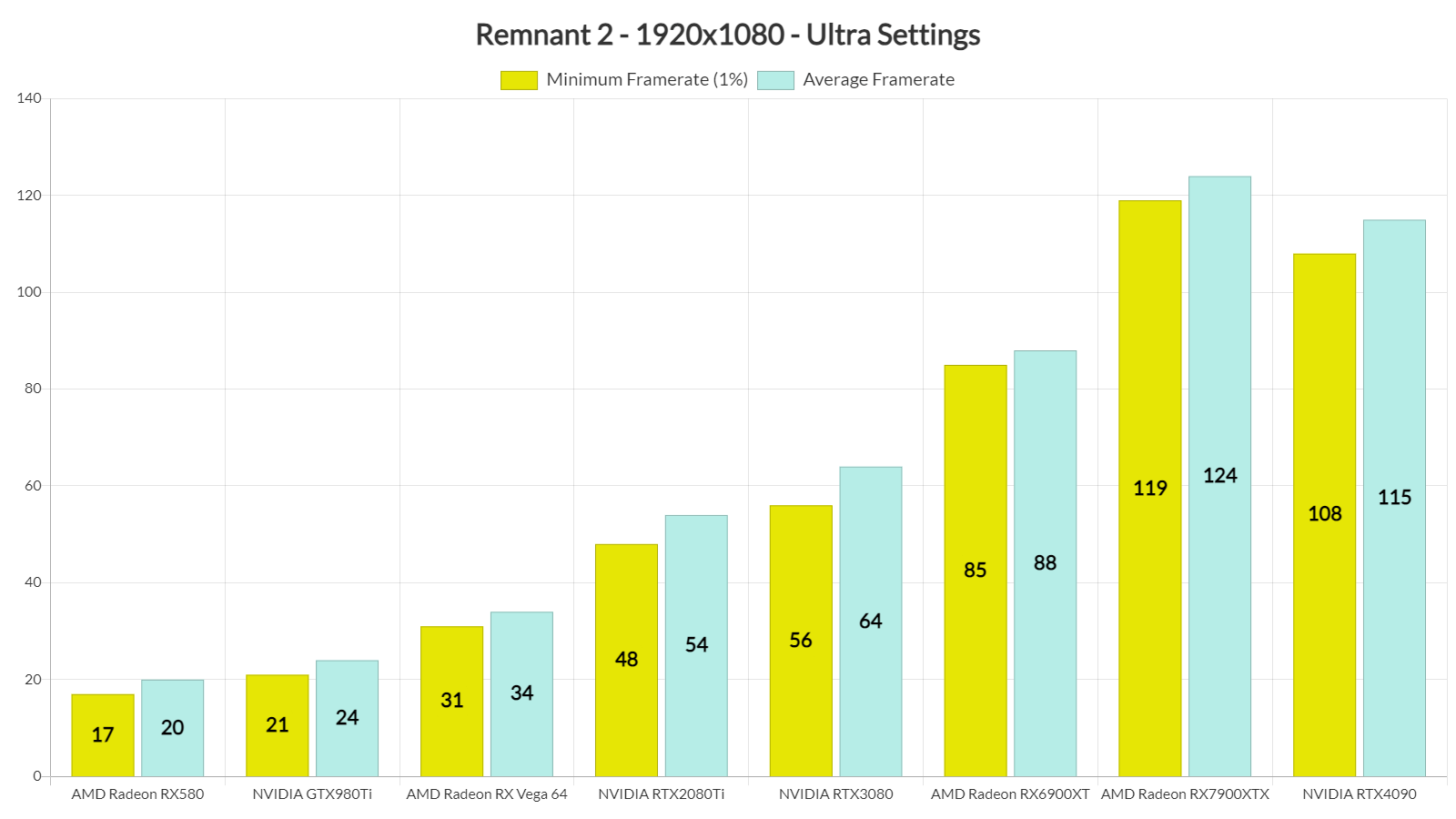

For our Remnant 2 PC Performance Analysis, we used an AMD Ryzen 9 7950X3D, 32GB of DDR5 at 6000Mhz, AMD’s Radeon RX580, RX Vega 64, RX 6900XT, RX 7900XTX, NVIDIA’s GTX980Ti, RTX 2080Ti, RTX 3080 and RTX 4090. We also used Windows 10 64-bit, the GeForce 536.67 and the Radeon Software Adrenalin 2020 Edition 23.7.1 drivers. Moreover, we’ve disabled the second CCD on our 7950X3D.

Gunfire Games has added a few graphics settings to tweak. PC gamers can adjust the quality of Shadows, Post-Processing, Foliage, Effects and View Distance. The game also supports all PC upscaling techniques like DLSS 2/3, FSR 2.0 and XeSS. Furthermore, Gunfire has included a FOV Modifier.

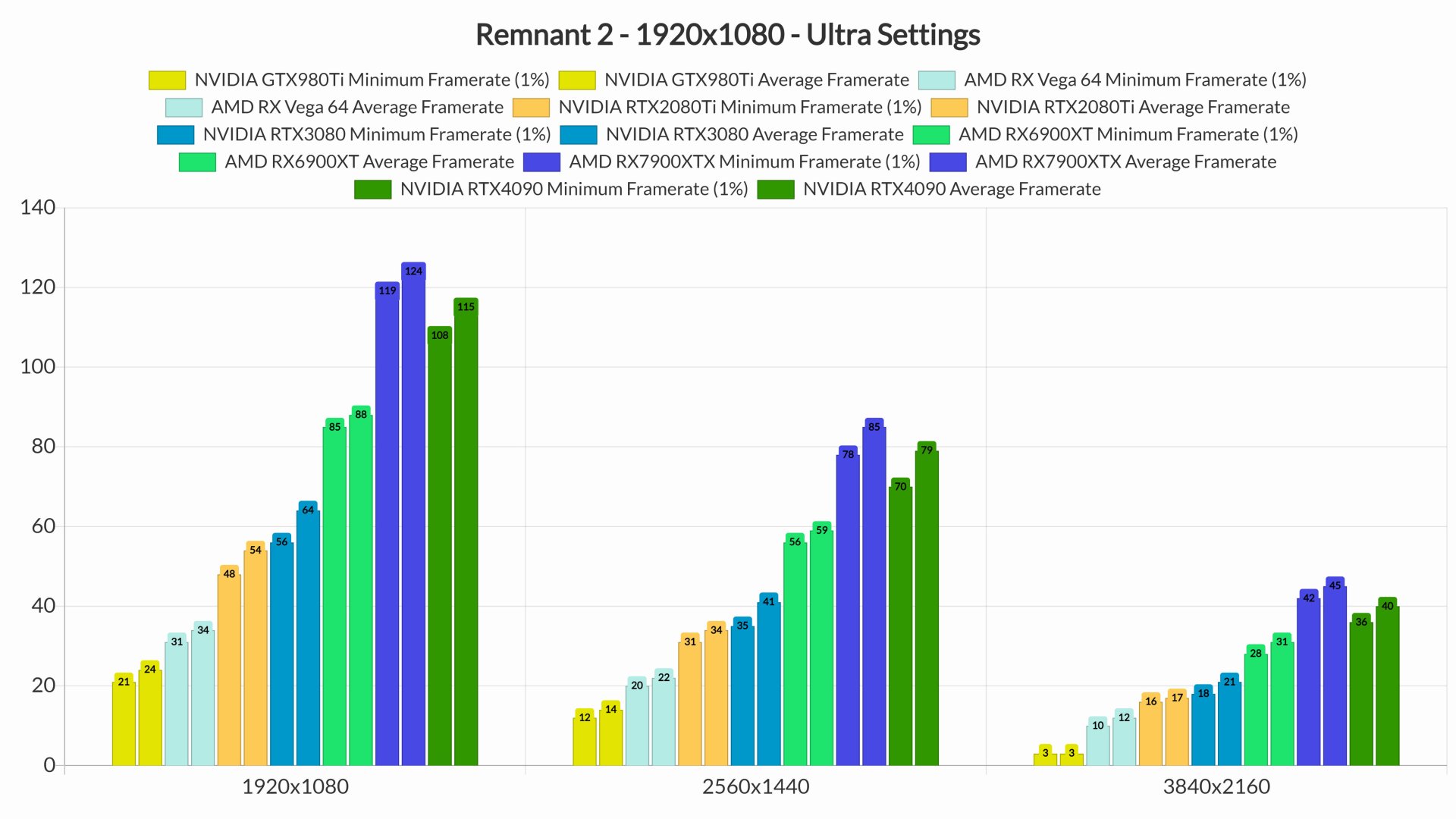

Remnant 2 does not feature any built-in benchmark tool. Thus, for both our CPU and GPU tests, we benchmarked the game’s central hub, Ward 13. This area features a lot of NPCs, making it ideal for our tests. For our CPU benchmarks, we also lowered our resolution to 1080p and enabled DLSS 2 Performance Mode (so that we could avoid any possible GPU bottlenecks).

In order to find out how the game scales on multiple CPU threads, we simulated a dual-core, a quad-core and a hexa-core CPU. And, similarly to Layers of Fear, Remnant 2 does not require a high-end CPU. As we can see, even our simulated dual-core system was able to provide framerates higher than 80fps at 1080p/DLSS 2 Performance/Ultra Settings.

Unreal Engine 5’s Nanite can improve overall CPU performance, making the game run smoother on a wide range of CPU configurations. By taking advantage of Nanite, this game was silky smooth, without any noticeable traversal stutters. So, not only does Nanite eliminate geometry pop-ins, but it also reduces traversal stutters. What’s also interesting here is that you can disable Nanite when setting the in-game graphics options to Low. And, on Low settings, the game suddenly suffers from major traversal stutters. We were able to replicate this numerous times so yes, Nanite will play a major part in reducing traversal stutters in future UE5 games.

Now while Remnant 2 does not need a high-end CPU, it certainly requires a powerful GPU. At native 1080p/Ultra Settings, the only GPUs that could run the game with 60fps were the AMD RX6900XT, AMD RX7900XTX and the NVIDIA RTX4090. Our NVIDIA RTX3080 came close to a 60fps experience, though there were drops to the mid-50s.

It’s also worth noting that Remnant 2 performs incredibly well on AMD’s GPUs. In fact, the AMD RX7900XTX can beat the NVIDIA RTX4090 in all native resolutions. We’ve already informed NVIDIA about this, so hopefully they will be able to improve performance via future drivers.

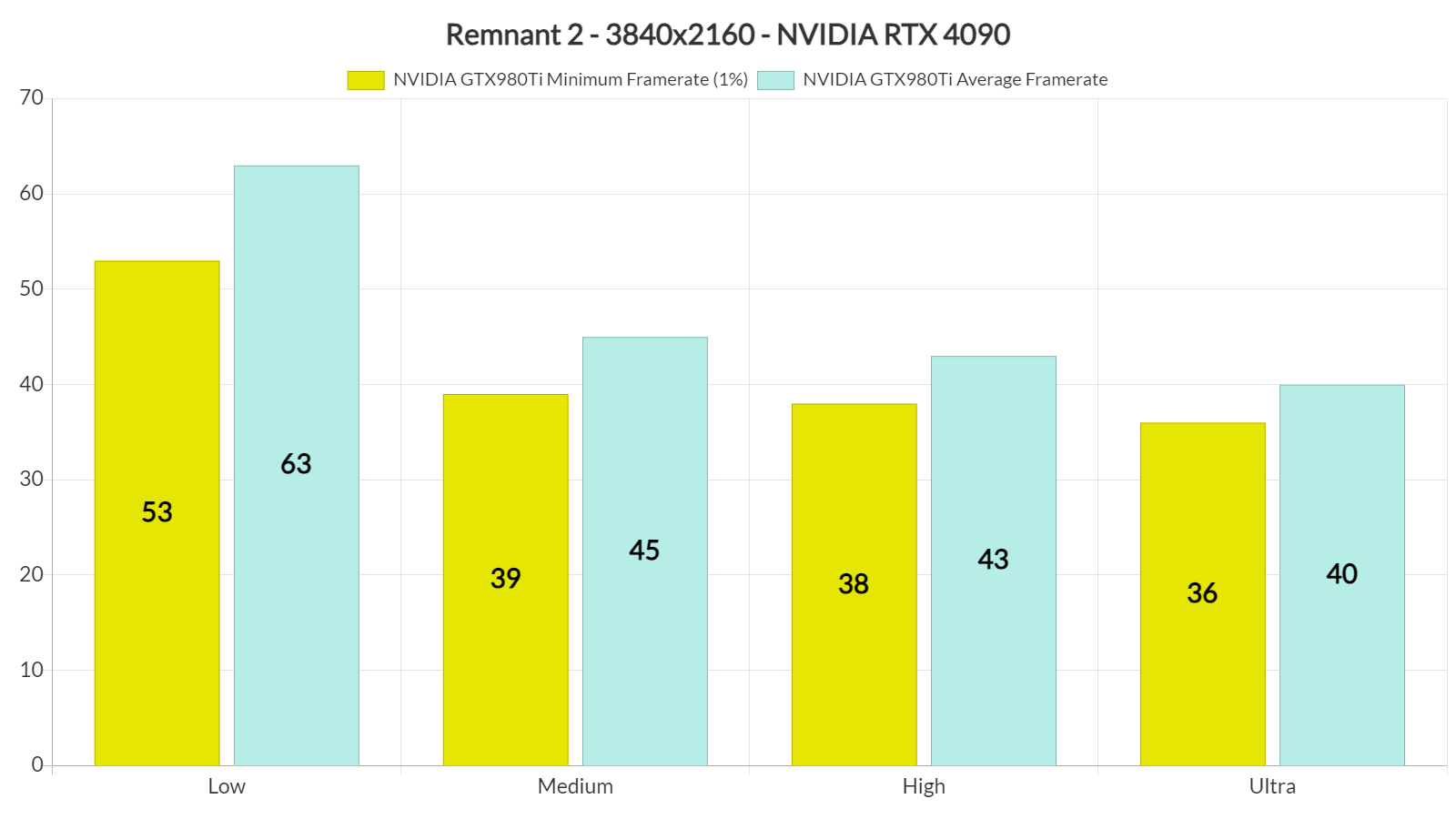

At native 1440p/Ultra Settings, the only GPUs that could offer a 60fps experience were the AMD Radeon RX 7900XTX and the NVIDIA RTX 4090. And as for native 4K/Ultra, there wasn’t any GPU that could offer a smooth gaming experience.

But what about scalability? Well, the game was running with the exact same framerates at both Ultra, High and Medium on our RTX 4090. At first glance, you’d assume that this was due to a CPU bottleneck. However, even at Medium Settings, our RTX 4090 was used to its fullest. We don’t know what is going on here, however, we are not the only ones witnessing this odd behavior.

Graphics-wise, Remnant 2 looks great but it does not justify its ridiculously high GPU requirements. The game has a lot of high-quality textures, and thanks to Nanite, there aren’t any objects/NPCs/grass pop-ins. The game also has some amazing lighting/global illumination effects in some areas. However, its AO solution is not that great. Contrary to other games, in Remnant 2, SSAO completely disappears from the edges of the screen while panning the camera. Gunfire needs to fix this (or add support for RTAO).

All in all, Remnant 2 is a mixed bag. On one hand, we have a game that runs on a wide range of CPUs, takes advantage of Nanite, and does not have any shader compilation or traversal stutters. On the other hand, though, the game can stress even the highest-end graphics cards, despite the fact that it does not use any ray-tracing effects.

Thankfully, PC gamers can enable upscaling techniques in order to improve overall performance. And for those wondering, the performance of Remnant 2 on current-gen consoles is not that great either. Unless, of course, you’re fine with a game being rendered at 794p. So, if you have the hardware, Remnant 2 can be fully enjoyed on PC via DLSS, FSR or XeSS. However, Gunfire should not be using these upscalers as a crutch. NVIDIA should also improve the game’s performance on its hardware.

Here is hoping that Gunfire will improve the performance of Remnant 2 via future updates!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email