After a lot of controversy surrounding its micro-transactions, Middle-earth: Shadow of War has been released on the PC. The game uses the Denuvo anti-tamper tech and is powered by Monolith’s in-house Firebird Engine. As such, it’s time now to see how this title performs on the PC.

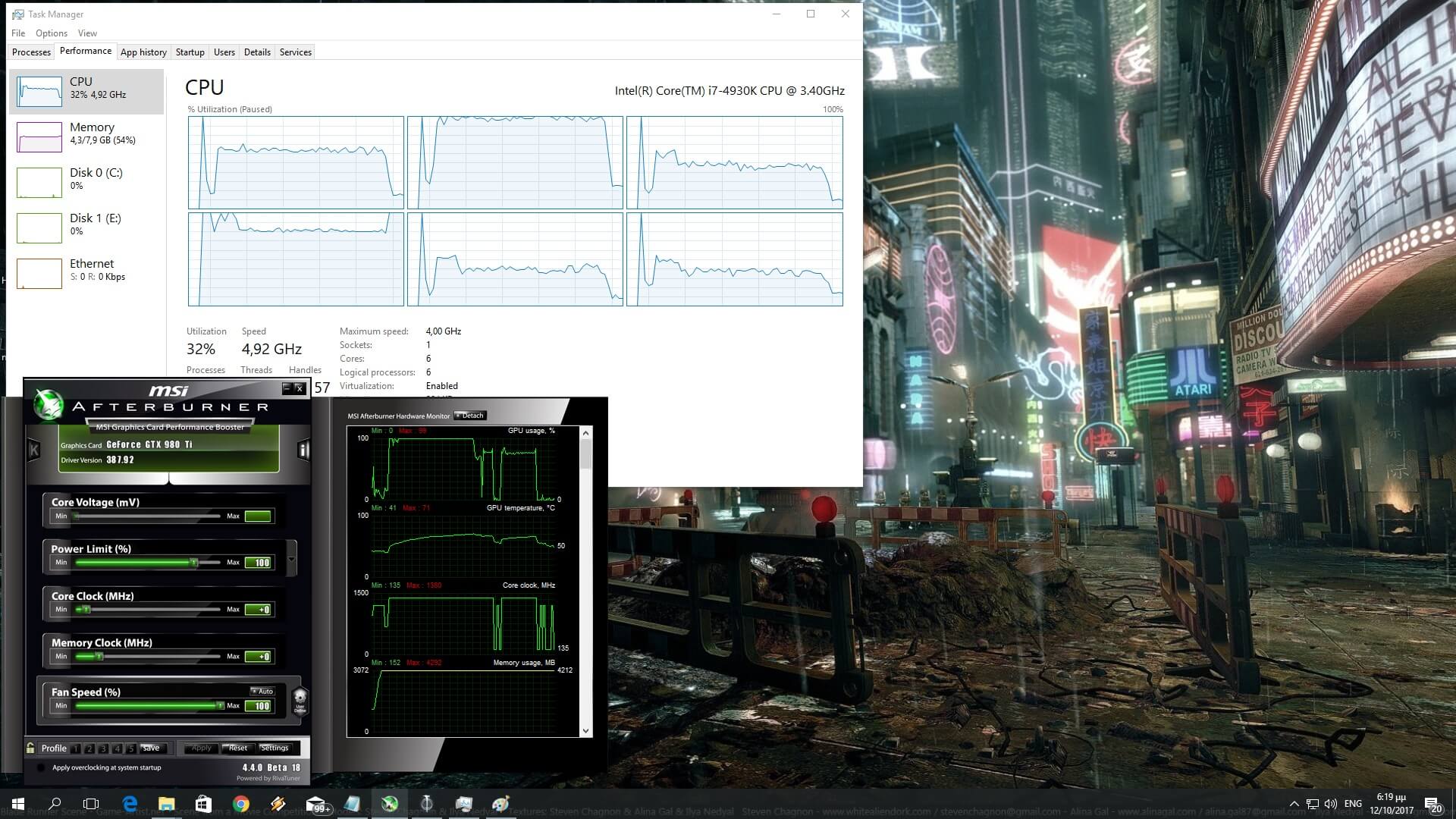

For this PC Performance Analysis, we used an Intel i7 4930K (overclocked at 4.2Ghz) with 8GB RAM, AMD’s Radeon RX580, NVIDIA’s GTX980Ti and GTX690, Windows 10 64-bit and the latest version of the GeForce and Catalyst drivers. Thankfully, NVIDIA has included an SLI profile for this title. This means that those with such systems won’t have to mess around with third-party tools in order to enable it.

Monolith has included a nice amount of graphics settings. PC gamers can adjust the quality of Lighting, Meshes, Anti-Aliasing, Shadows, Texture Filtering, Textures, Ambient Occlusion and Vegetation Range. Players can also enable/disable Motion Blur, Depth of Field, Tessellation and Large Page Mode.

[nextpage title=”GPU, CPU metrics, Graphics, Benchmark video & Screenshots”]

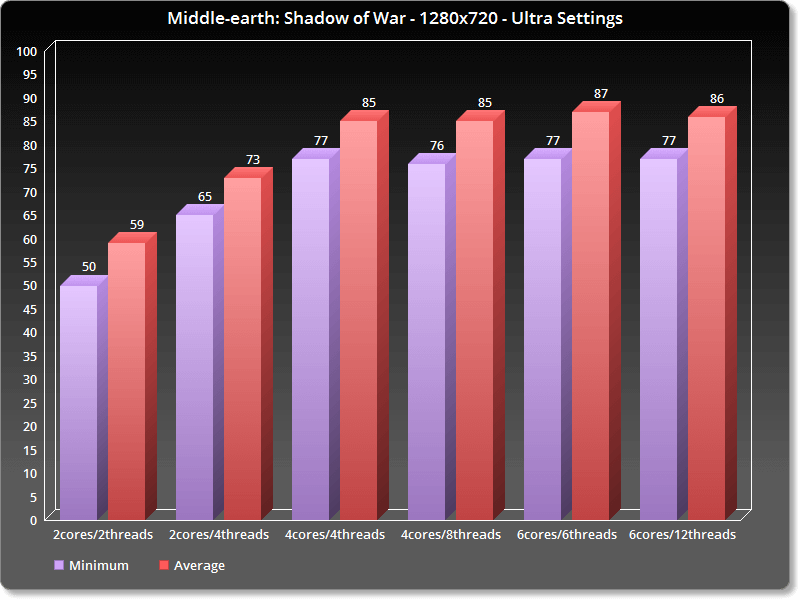

In order to find out how the game performs on a variety of CPUs, we simulated a dual-core and a quad-core CPU. For our CPU tests, we chose a highly populated area of the first chapter, and we dropped our resolution to 720p. In case you’re wondering, we’ve done this so we can eliminate any possible GPU limitation during this scenario. And Middle-earth: Shadow of War appears to be suffering from CPU scaling issues. As we can see, only two of our six CPU cores were stressed while running this game.

Due to the game’s inability to scale – and take advantage of multiple CPU cores – we were CPU limited on pretty much all systems. Despite that though, Middle-earth: Shadow of War does not require a high-end CPU for 60fps. That is of course when your CPU has a pretty good IPC performance. We’ve heard some complaints from Ryzen owners and there is a high chance they are CPU limited due to these scaling issues.

Our simulated dual-core system was able to offer an almost enjoyable experience. With Hyper Threading disabled, our simulated dual-core pushed an average of 61fps and a minimum of 50fps. With Hyper Threading enabled, our performance increased and we were able to get a constant 60fps experience on Ultra settings at 1080p. On the other hand, our six-core and our simulated quad-core systems performed similarly, suggesting that the game does not take advantage of more than four CPU cores/threads.

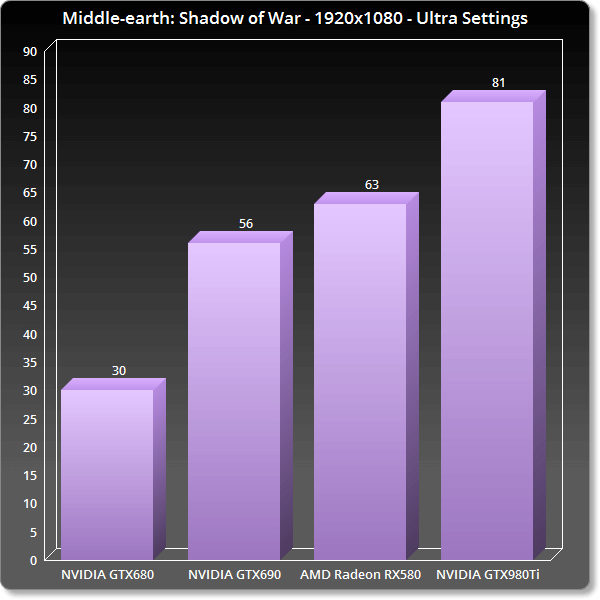

Regarding its GPU requirements, our AMD Radeon RX580 was unable to offer a smooth gaming experience on Ultra settings at 1080p. While it was able to run the built-in benchmark with an average of 63fps, the framerate dropped at 40fps during some scenes. Surprisingly enough, there were also some framerate drops on our NVIDIA GTX980Ti. NVIDIA’s GPU was able to push an average of 81fps, however we did notice some drops to 52fps. Do note that the first two chapters of the game performed better. Our GTX980Ti ran the first two chapters with a minimum of 70fps. As such, this benchmark may be a stress test or it may reflect the performance of later stages.

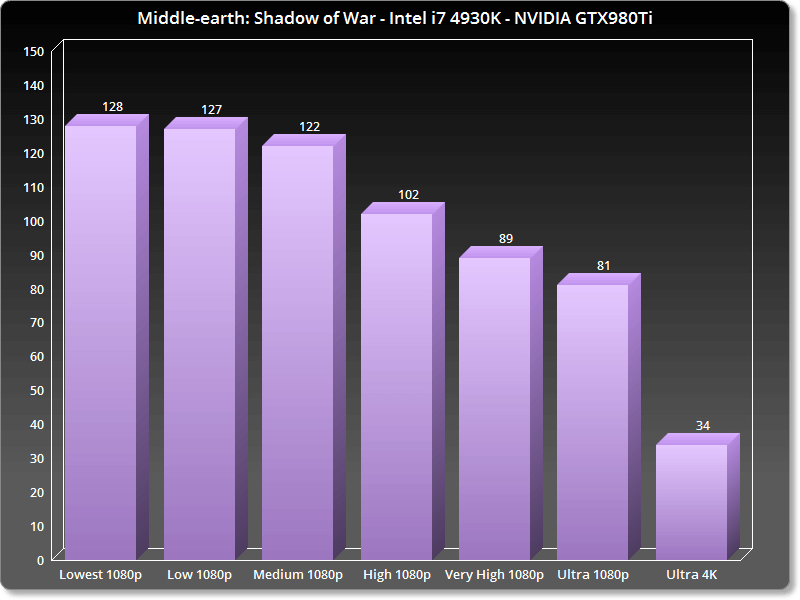

Middle-earth: Shadow of War comes with six presets. These are: Lowest, Low, Medium, High, Very High and Ultra. Given the number of presets, we were expecting the game to be scalable on older hardware. However, the performance gains between Ultra and Lowest is only 47fps. Not only that, but the game looks horrendous even on Medium settings. So yeah, we were expecting better performance on lower settings than what we’re getting.

Monolith has also released a 4K High Resolution Texture Pack. This pack requires more than 8GB of VRAM, so you’d normally think that there would be a huge difference in quality between High and Ultra. However, there is almost no difference between them. Below you can find some comparison screenshots between High (left) and Ultra (right) textures. Can you spot the differences?

Graphics wise, Middle-earth: Shadow of War looks great. Most characters are highly detailed, the Orcs look great, and the environments are cool. We did notice some pop-ins of shadows and objects but let’s not forget that this is a game with some really huge environments. As such, we can’t expect LOD values similar to those of games’ with more linear levels. Still, it would have been great if Monolith pushed the boundaries of some settings (like LOD, lighting, tessellation) even more with its Ultra settings. So while Middle-earth: Shadow of War is not as visually spectacular as The Witcher 3, it still looks great.

All in all, Middle-earth: Shadow of War performs quite good on the PC. While the game uses the Denuvo anti-tamper tech, we did not notice any stuttering issues (or anything other side-effects). The game also works great with the mouse+keyboard. Like most triple-A releases, the game allows players to rebind most keys. There are also proper on-screen keyboard indicators. Performance on the lower settings could have been better, and owners of CPUs with low IPC performance may encounter performance issues. There is definitely room for improvement here, no doubt about that. However, we believe that the majority of PC gamers won’t encounter many performance issues with it.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email