Fallout 4 is a highly anticipated game that will, undoubtedly, be a huge commercial success. Powered by an enhanced version of the Creation Engine, Fallout 4 supports new graphical features and looks better than Skyrim or Fallout 3/New Vegas (vanilla versions). The game has just been released on the PC and it’s time to see how this title performs on the PC platform.

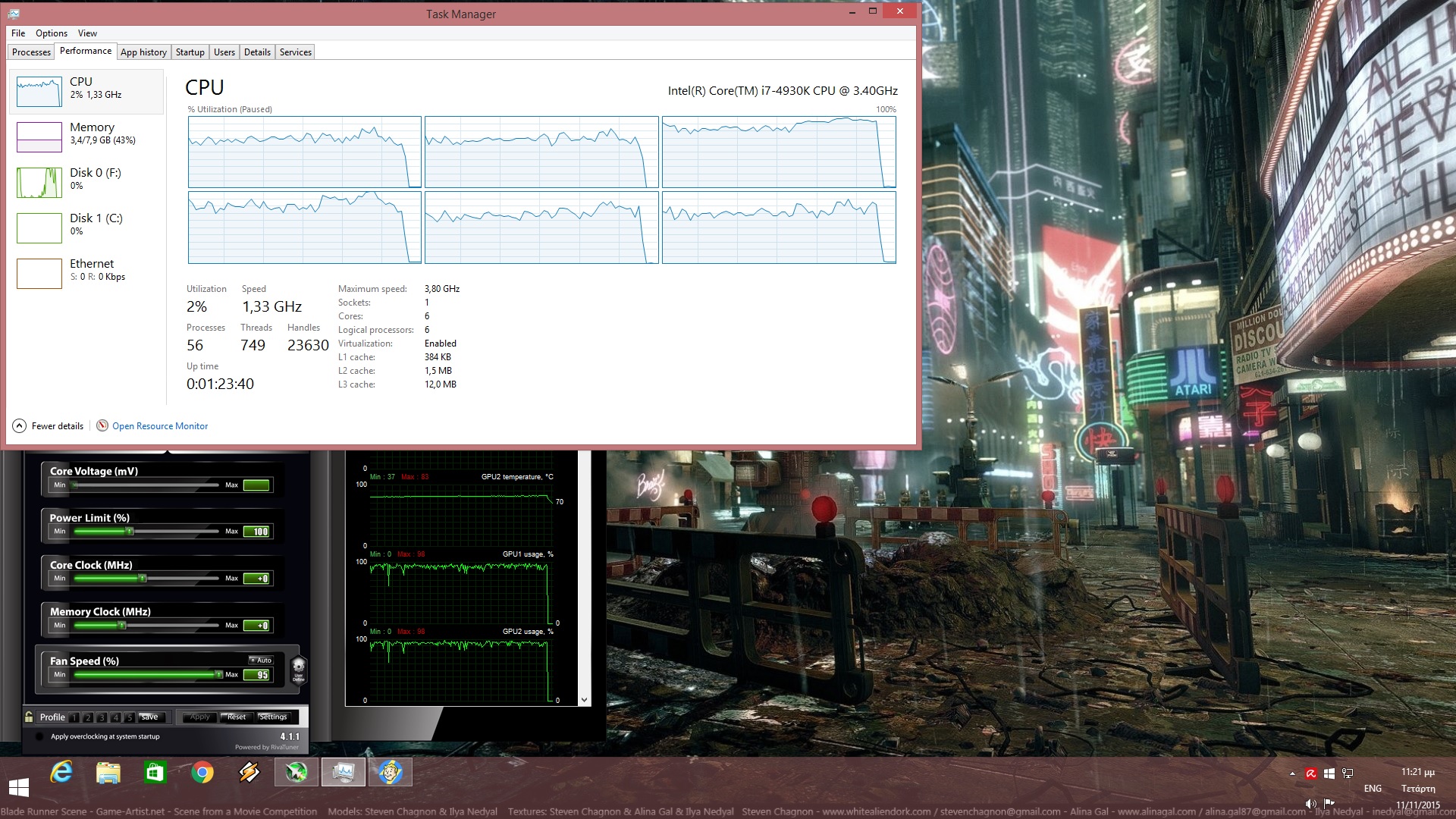

As always, we used an Intel i7 4930K (turbo boosted at 4.0Ghz) with 8GB RAM, NVIDIA’s GTX690, Windows 8.1 64-bit and the latest WHQL version of the GeForce drivers. NVIDIA has not included any SLI profile for this title as of yet, however PC gamers can quite easily enable it. All you have to do is head over at NVIDIA’s Control Panel, find the game, and force “alternate frame rendering 2”. While this is still not a perfect solution, SLI scaling is great for the most part. As you can see below, there are scenes in which SLI scaling drops to 60-70%, so here is hoping that NVIDIA will release an official SLI profile in the next few days/weeks.

Despite the fact that we’re dealing with an open-world title, Fallout 4 is friendly to older CPUs and requires a high-end GPU in order to shine. Bethesda did an incredible work overhauling Creation’s multi-threading capabilities and as a result of that, the game scales on more than four CPU cores. With Hyper Threading enabled, we witnessed even greater scaling to twelve threads, so kudos to the team for improving the most annoying performance issue of Skyrim.

We are also happy to report that the game performs exceptionally well even on modern-day dual-core CPUs. Fallout 4 almost ran with constant 60fps on our simulated dual-core system. There were minor drops to 40-50fps, but that’s really nothing to really complain about (for those gaming on such old CPUs). Ironically enough, Fallout 4 is the third open-world title that does not really require a high-end CPU (Mad Max and Metal Gear Solid V: The Phantom Pain). Whether this has anything to do with the underwhelming CPU raw power of current-gen consoles remains a mystery. Still, this is good news to all owners of older CPUs as they will be able to upgrade to high-end GPUs without the fear of bottlenecking them.

As we’ve already said, Fallout 4 is a “GPU bound” title. With SLI disabled, a single GTX680 was simply unable to offer a constant 60fps experience at 1080p with Ultra settings. In order to hit that mark, we had to lower our settings to Medium. Those who can deal with various drops to 50fps can enable High settings (but switch their AA option from TAA to FXAA). With SLI enabled, the game ran without major performance issues, though as we already said there were some scenes in which SLI scaling was not as good as we had hoped.

As you may already know, Fallout 4 is locked at 60fps (that is unless you disable Vsync via NVIDIA’s Control Panel. Thanks Sean). And since Bethesda has tied the game’s engine speed to its framerate, we highly recommended leaving that cap in place. Apart from some really weird physics bugs that occurred, we had major syncing issues during dialogues, lock picking becomes really sensitive and ultra fast, and we got stuck/locked after using terminals when we unlocked the game’s framerate. Yes, a higher framerate would be a nice welcome but let’s be honest here; a constant 60fps is not that bad.

Bethesda has included proper on-screen keyboard indicators and PC gamers can navigate all menus with the mouse. However, there is no option to turn off the game’s annoying mouse acceleration or change its FOV. Thankfully, as we’ve already reported, there are workarounds to these issues. Still, some proper in-game options would be nice. Oh, and there are no in-game graphics options. This means that in order to find the ideal options for your own PC system, you will have to completely exit the game, tweak its graphics options, and re-launch it.

Graphics wise, Fallout 4 looks way better than what we all initially thought. Fallout 4 does not come close to the visuals of The Witcher 3: Wild Hunt, however it’s far from being described as an “old-gen” title. Bethesda has implemented physically based rendering, volumetric lighting, screen space ambient occlusion and screen space reflections, as well as a new cloth simulation system. The end result is pleasing to the eye, though there are some shortcomings here and there. For instance, there is noticeable pop-in even with Ultra settings enabled. Moreover, the difference between Medium and Ultra textures is as minimal as it can get. And the dog looks awful compared to the wolves of The Witcher 3: Wild Hunt or to DD of Metal Gear Solid V: The Phantom Pain.

All in all, Fallout 4 is a nice surprise. While the game does not sport the best visuals we’ve ever seen in an open-world title, it – more or less – does its job. Fallout 4 does not require a high-end CPU even though it’s an open-world game and scales incredibly well on multiple CPU cores. Bethesda has implemented a lot of modern-day graphical techniques and since the game is open to mods, we expect to see some really incredible things in the next couple of months.

There are some minor path-finding issues and it’s really annoying that there is a 60fps cap, however the PC version of Fallout 4 is stable, more polished and was released in a better state than Skyrim. So well done Bethesda, though let’s hope that you will release some patches to address the minor issues currently affecting the PC version.

Those interested can purchase this game from GMG via the following button.

Enjoy!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email