Last week, THQ Nordic released Destroy All Humans! 2 – Reprobed on PC. The game uses Unreal Engine 4 and supports both NVIDIA’s DLSS and AMD’s FSR 2.0 AI upscaling techniques. As such, and prior to our PC Performance Analysis, we’ve decided to benchmark and compare them.

For these benchmarks and comparison screenshots, we used an Intel i9 9900K with 16GB of DDR4 at 3800Mhz and NVIDIA’s RTX 3080. We also used Windows 10 64-bit, and the GeForce 516.94 driver.

Destroy All Humans! 2 – Reprobed does not feature any built-in benchmark tool. Thus, we’ve decided to benchmark the first mission which has numerous explosions and a respectable number of NPCs.

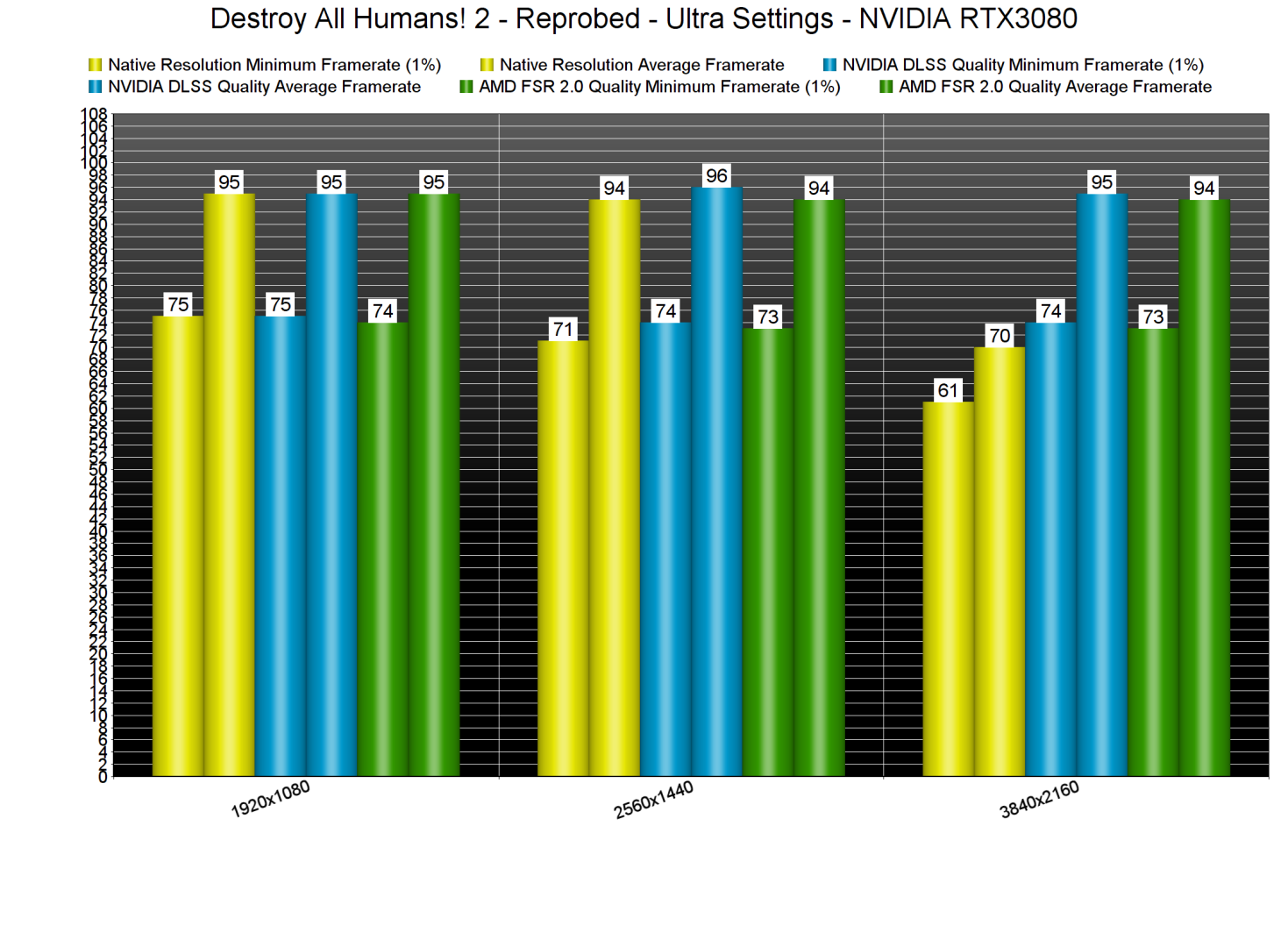

Our benchmark scene put a lot of pressure on our CPU and as such, we were CPU bottlenecked at both 1080p and 1440p.

To be honest, I was kind of surprised by these CPU requirements. After all, we’ve seen other Unreal Engine 4-powered games that run significantly better than Destroy All Humans! 2 – Reprobed.

Now the good news here is that the Intel i9 9900K had no trouble at all maintaining a constant 70fps experience. Truth be told, there were scenes in which our framerates skyrocketed at 130fps. However, our demanding benchmark scenario can give us a better idea of how later stages may run. So, if you target framerates higher than 120fps at all times, you’ll need a high-end modern-day CPU.

Since we were CPU-bottlenecked, DLSS and FSR made no difference at all at 1080p and 1440p. So the most interesting results are those of 4K/Ultra. At native 4K, our RTX3080 could drop at 61fps at times. By enabling DLSS or FSR, though, we were able to get a respectable performance boost.

Below you can also find some comparison screenshots between native 4K (left), NVIDIA DLSS Quality (middle) and AMD FSR 2.0 Quality (right). NVIDIA’s DLSS tech does wonders in this game as it can provide a better image than native 4K. Take for instance the distant cables in the first comparison, which appear more defined in the DLSS image. If you have an RTX GPU, you must enable DLSS (as it retains the quality of a 4K image and improves overall performance). The only downside is the additional specular aliasing, from which both DLSS and FSR suffer. For example, take a look at the metallic parts of the car in the first comparison. You’ll see that there is more aliasing in these areas when using DLSS and FSR. Overall, though, DLSS offers the best image/performance ratio, followed by FSR and then native 4K.

Stay tuned for our PC Performance Analysis which will most likely go live later this week!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email