Rebellion’s Strange Brigade has just been released on the PC and we are happy to report that it is the first game supporting both the DirectX 12 and the Vulkan APIs. As such, and perhaps for the first time, we are able to benchmark these APIs side by side and see how NVIDIA’s and AMD’s hardware perform with them.

For our tests, we used an Intel i7 4930K (overclocked at 4.2Ghz) with 8GB RAM, AMD’s Radeon RX Vega 64 and NVIDIA’s GTX980Ti, Windows 10 64-bit and the latest version of the GeForce and Catalyst drivers.

Contrary to most DX11 games, Strange Brigade ran silky smooth – and way faster – on AMD’s hardware. This further confirms our claims that AMD’s DX11 drivers are currently really unoptimized and come with a significant CPU overhead (at least compared to the ones that NVIDIA is offering for its graphics cards).

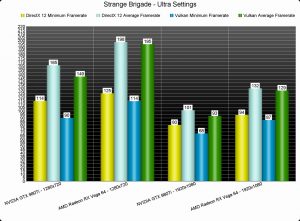

We’ve tested two resolutions; 1280×720 and 1920×1080. The game comes with an in-game benchmark and for both scenarios we set our graphics settings to Ultra. In both of these resolutions, the AMD Radeon RX Vega 64 was able to beat the NVIDIA GeForce GTX980Ti by around 30fps.

The AMD Radeon RX Vega 64 did not favour any API in Strange Brigade as both DX12 and Vulkan performed almost similarly (DX12 was a bit faster). On the other hand, our NVIDIA GeForce 980Ti clearly favoured DX12 over Vulkan. At 720p, DX12 was faster by around 20fps and at 1080 Microsoft’s API was faster by around 10fps.

Our full PC Performance Analysis will go live later today or tomorrow, so stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email