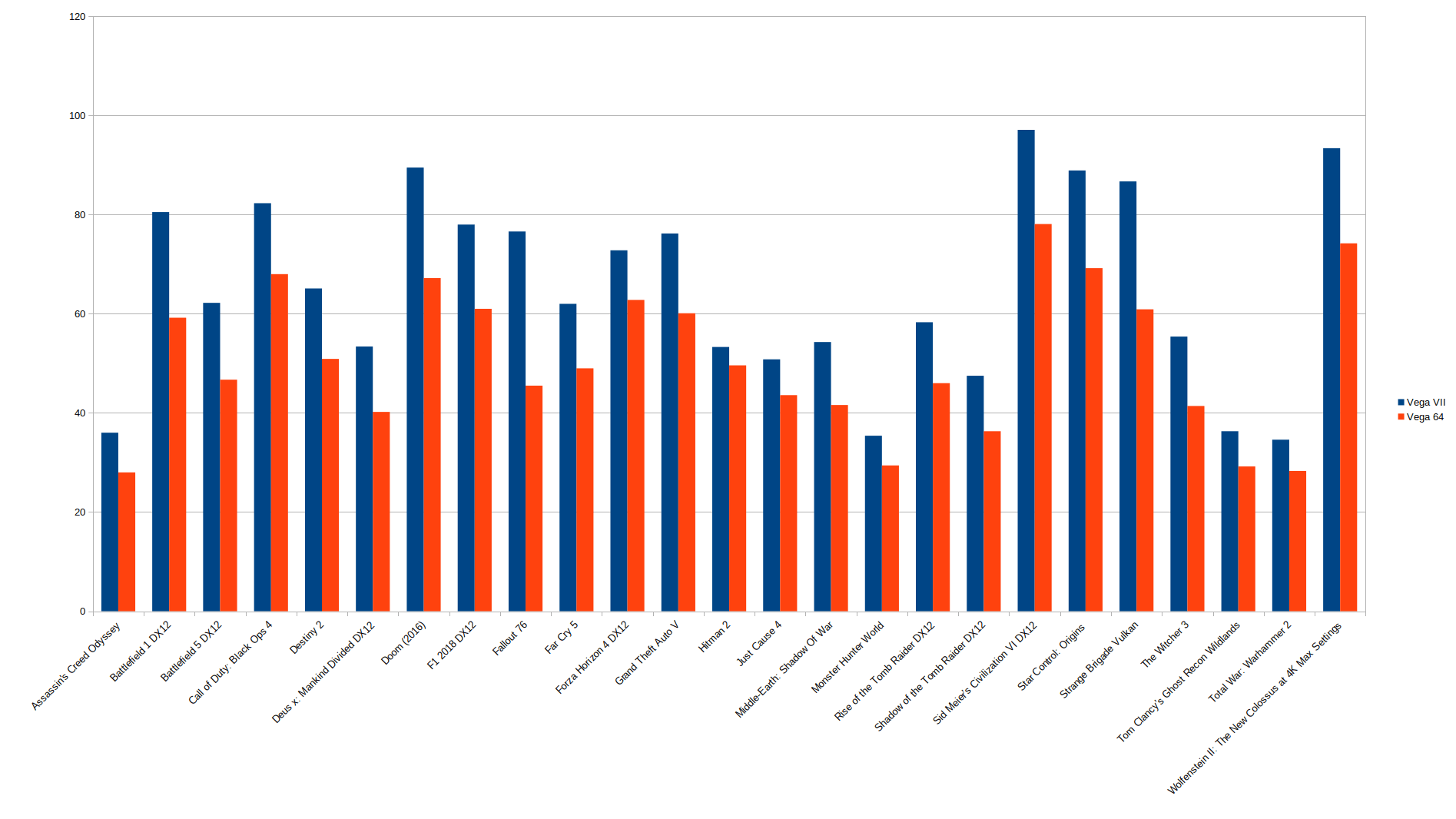

AMD has shared the first official gaming benchmarks for AMD Radeon VII. The red team has benchmarked the Radeon VII against the Radeon RX Vega 64 in 25 games, and the results overall fall in line with what most of us expected from this new AMD GPU.

Now while AMD claimed that this new GPU targets 4K gaming, it’s obvious that in some titles it cannot come close to it. For example, in Assassin’s Creed Odyssey, Monster Hunter World, Tom Clancy’s Ghost Recon Wildlands the AMD Radeon VII pushes an average framerate of 36fps (in 4K on max settings) and in Total War: Warhammer 2 it pushes 35fps.

There are some games in which the new AMD Radeon VII can offer a 60fps experience, however you should keep in mind that these are average and not minimum framerates (meaning that there are most likely deeps below 60fps in these games).

Perhaps the most promising benchmarks are those for Battlefield 1 DX12, Call of Duty Black Ops 4, Doom, Star Control Origins, Strange Brigade and Wolfenstein 2 in which the AMD Radeon VII could push more than 80fps.

For these benchmarks, AMD used an Intel i7 7700K and 16GB DDR4-3000, and run the games on Max settings in 4K.

The AMD Radeon VII releases on February 7th, will be priced at $699 and we are pretty sure that there will be some third-party benchmarks prior to its launch so stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email