It appears that AMD had worked with one Fortnite Creative Island modder @MAKAMAKES to create a custom Battle Arena in ‘Fortnite creative’. This leak comes via Hothardware.

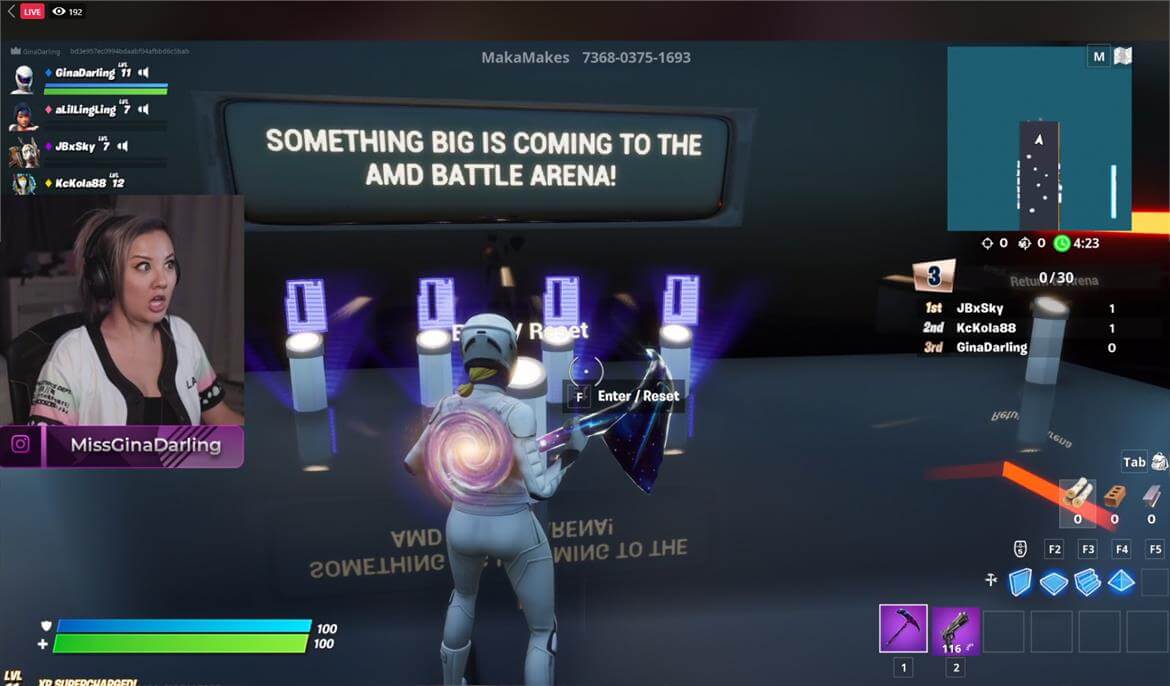

Now, one AMD-sponsored Fortnite Streamer @MissGinaDarling has discovered an Easter Egg in one of the new Fortnite Maps. The Easter Egg appears to be a hidden quest that requires the 6000 code to be entered.

Basically, players have the option of playing three different game modes, and in one of the maps, there appears to be a baked-in Easter egg having references to Big Navi model numbering, or at least that appears to be the case for now. In the end a message is being shown which reads the following: “SOMETHING BIG IS COMING TO THE AMD BATTLE ARENA!”. This could also basically mean a reference to the upcoming BIG Navi GPU, an indirect confirmation from AMD.

To quote TomsHardware, “AMD’s Scott Herkelman, CVP & GM at AMD Radeon, congratulated Gina on finding the easter egg, which surely has to mean something.”

The 6000 numbering makes sense logically if we compare it with the AMD Radeon RX 5000 series of cards. Fortnite’s AMD Easter Egg is hidden in a Battle Arena map, which is basically an AMD-themed stadium. We expect the Big Navi consumer graphics cards to launch before the next-generation consoles hit the market, which are also based on the same RNDA2 architecture. The next-gen consoles are scheduled for a launch in November, so we expect AMD to announce the Big Navi cards around this timeframe.

Right under your noses 🙂 got to wonder what else we've already put out there that you just haven't discovered yet… https://t.co/xaDmeipUyu

— Frank Azor (@AzorFrank) September 4, 2020

According to HotHardware:

“To figure out how this Easter egg was found, you also have to get into the AMD Battle Arena. The AMD Battle Arena map is a Fortnite map where AMD fans get to duke it out in an AMD-themed stadium. You must get into either a Free-For-All or Capture The Flag Map to find the Easter egg.” “In any case, if you want to get into these games and find the Easter egg for yourself, you can do so by going into creative mode and entering the code 8651-9841-1639 to get to the map”.

Aside from this Easter egg, a contest is also being run as well, in which if a user captures an in-game action clip, then they can share that clip on social media with the tag #AMDSweepstakesEntry to get a chance to win a Maingear PC powered by AMD.

Hello, my name is NICK Richardson. I’m an avid PC and tech fan since the good old days of RIVA TNT2, and 3DFX interactive “Voodoo” gaming cards. I love playing mostly First-person shooters, and I’m a die-hard fan of this FPS genre, since the good ‘old Doom and Wolfenstein days.

MUSIC has always been my passion/roots, but I started gaming “casually” when I was young on Nvidia’s GeForce3 series of cards. I’m by no means an avid or a hardcore gamer though, but I just love stuff related to the PC, Games, and technology in general. I’ve been involved with many indie Metal bands worldwide, and have helped them promote their albums in record labels. I’m a very broad-minded down to earth guy. MUSIC is my inner expression, and soul.

Contact: Email