Square Enix has just released Final Fantasy 16 on PC. Now as I’ve said, even though the game does not have any Ray Tracing effects, it’s quite demanding at 4K/Max Settings. The good news is that FF XVI supports NVIDIA DLSS 3, AMD FSR 3.0 and Intel XeSS. As such, we’ve decided to benchmark and compare them.

For these first benchmarks, we used an AMD Ryzen 9 7950X3D, 32GB of DDR5 at 6000Mhz, and NVIDIA’s RTX 4090. We also used Windows 10 64-bit, and the GeForce 561.09 driver. Moreover, we’ve disabled the second CCD on our 7950X3D.

Final Fantasy XVI does not have a built-in benchmark tool. So, for our tests, we used the first Titan fight and the garden/palace area. These areas appear to be among the most demanding locations. As such, these are the areas we’ll also use for our upcoming PC Performance Analysis.

Let’s start with some comparison screenshots. Native 4K is one the left, NVIDIA DLSS 3 Quality is in the middle, and AMD FSR 3.0 is on the right.

At first glance, NVIDIA DLSS 3 Quality appears to be slightly blurrier than Native 4K. However, DLSS 3 Quality offers way better anti-aliasing. Take a look at how much smoother the window looks in the following comparison. Or how much better the tent ropes look. Thanks to its superior AA, DLSS 3 Quality looks, overall, better than Native 4K.

In this game, AMD FSR 3.0 suffers from major artifacts. While we did not notice major ghosting, almost all of the particles are a complete mess. Look how pixelated and “low-res” the whole scene looks with FSR 3.0 in the following comparison. Intel XeSS does not suffer from this issue. As you can see in the second comparison, Intel XeSS handles better the game’s particles.

So, if you have an RTX GPU, you should stick with NVIDIA DLSS 3 as its implementation is great. If you don’t have an RTX GPU but you can maintain 60fps with Super Resolution, you should use Intel XeSS over AMD FSR 3.0. AMD FSR 3.0 with Frame Generation should be your last option.

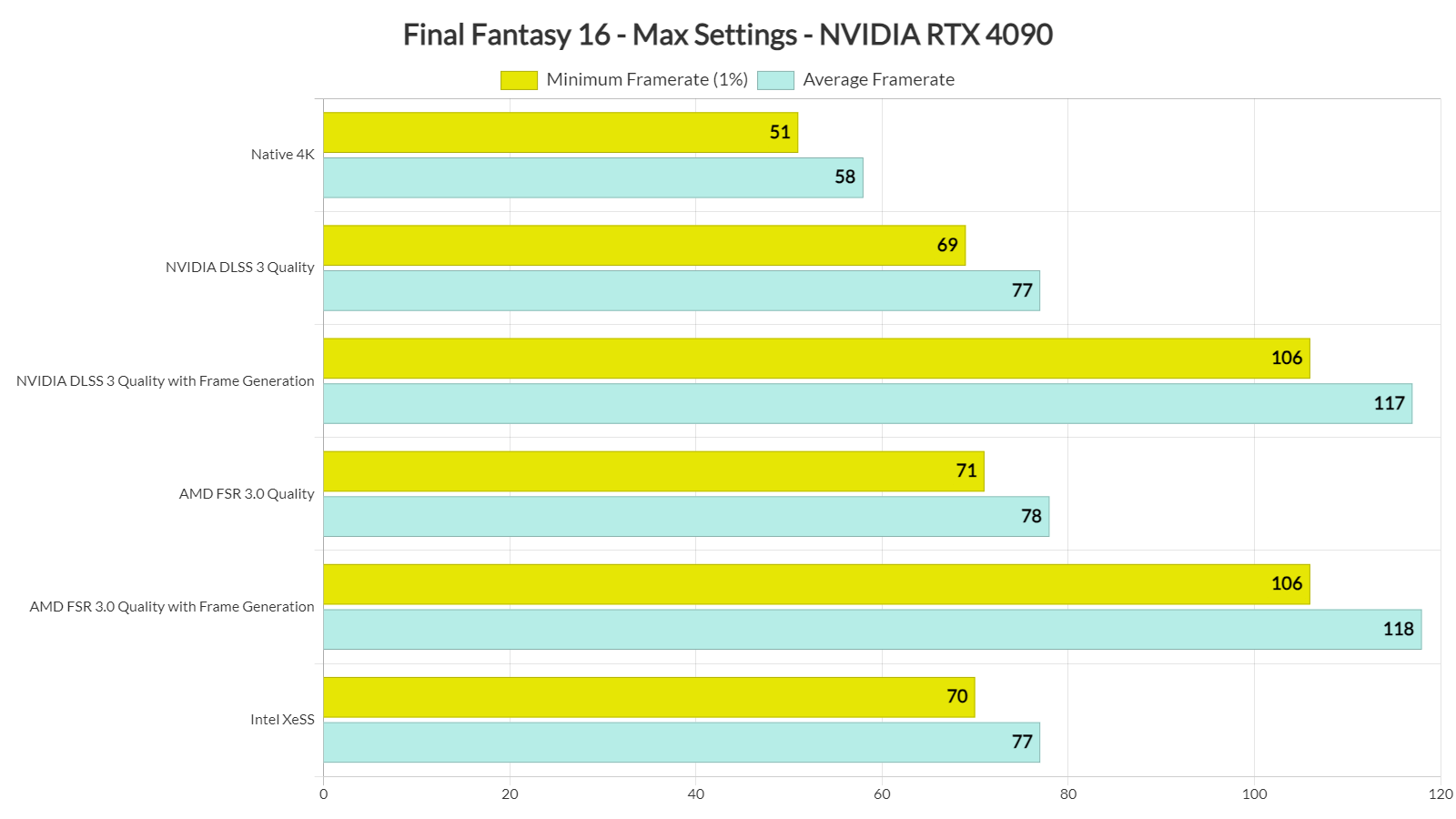

Performance-wise, all upscaling techniques perform the same. This is a surprise as in numerous games, NVIDIA DLSS 3 can be slower than AMD FSR 3.0. That’s not the case in this title though.

With DLSS 3 Quality Super Resolution, our NVIDIA RTX 4090 could reach frame rates above 70fps. Then, when we used Frame Generation, we got over 100fps all the time.

In my opinion, the best way to play FF XVI on an NVIDIA RTX 4090 is with DLAA and DLSS 3 Frame Generation. With this combo, you’ll get framerates higher than 80fps at all times. And, thanks to DLAA, you’ll get a great image.

Our PC Performance Analysis will go live later this week. So, stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email