Bethesda has just lifted the review embargo for Indiana Jones and the Great Circle. Powered by the latest version of idTech, it’s time now to benchmark it and examine its performance on the PC.

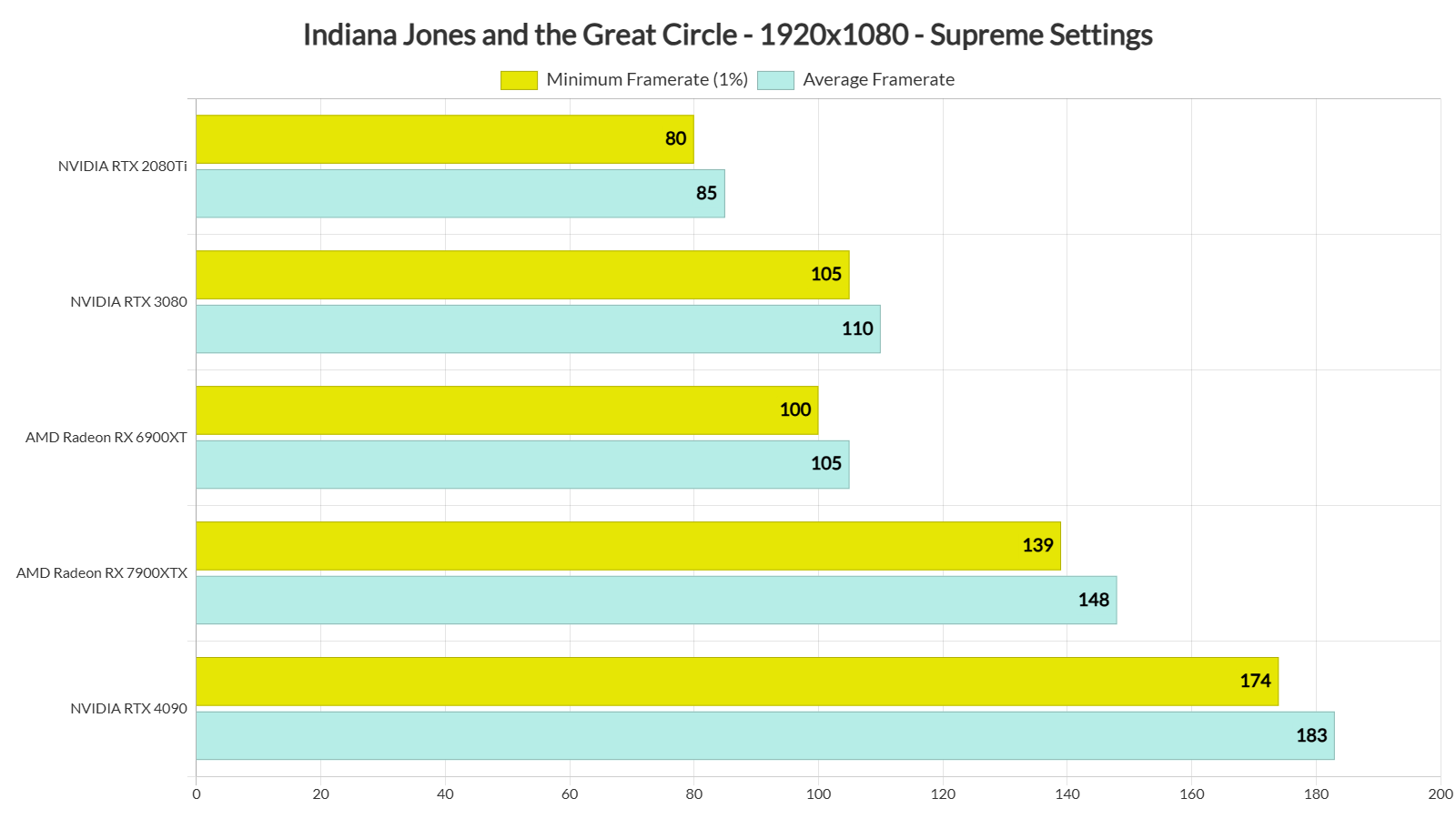

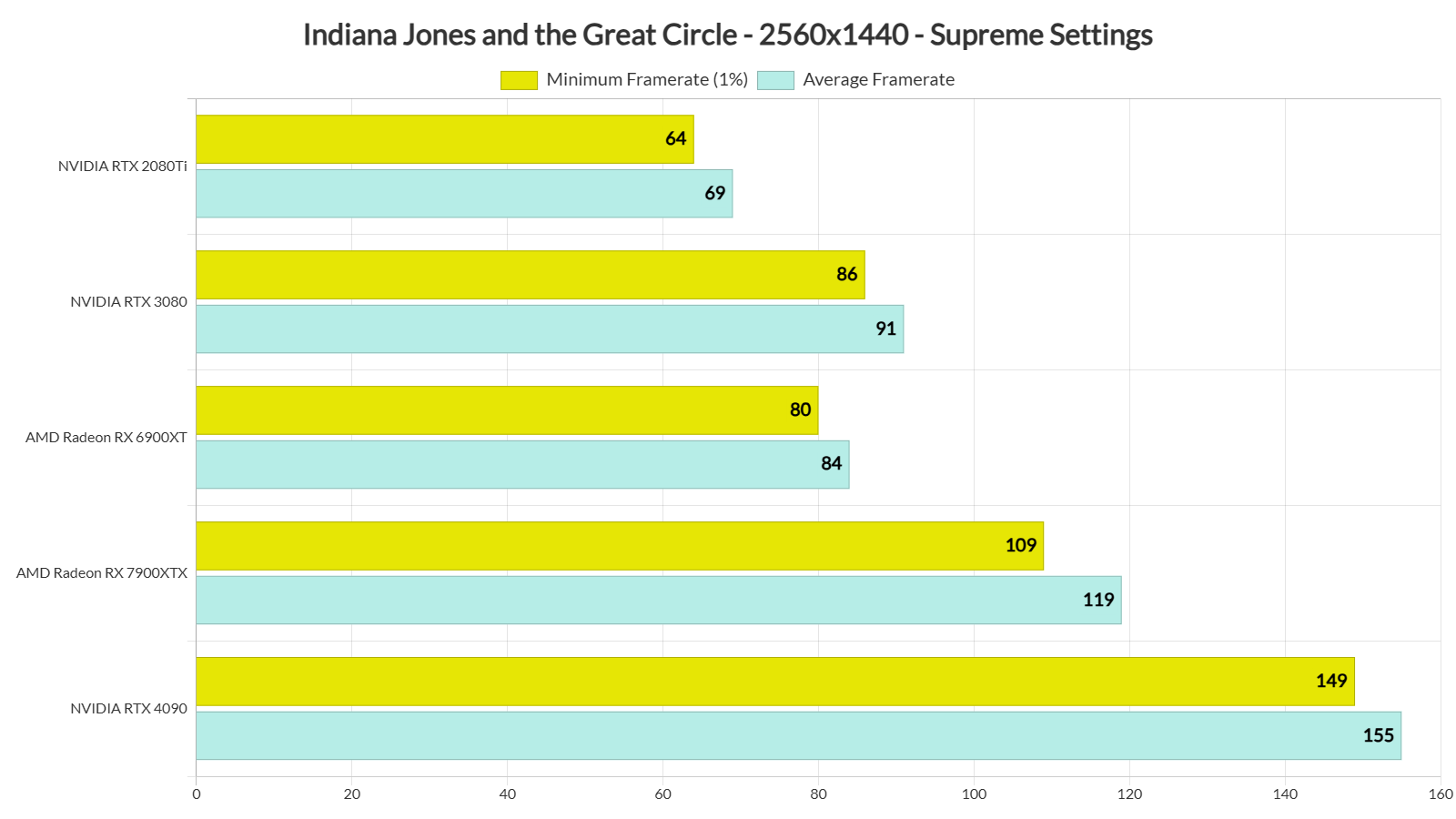

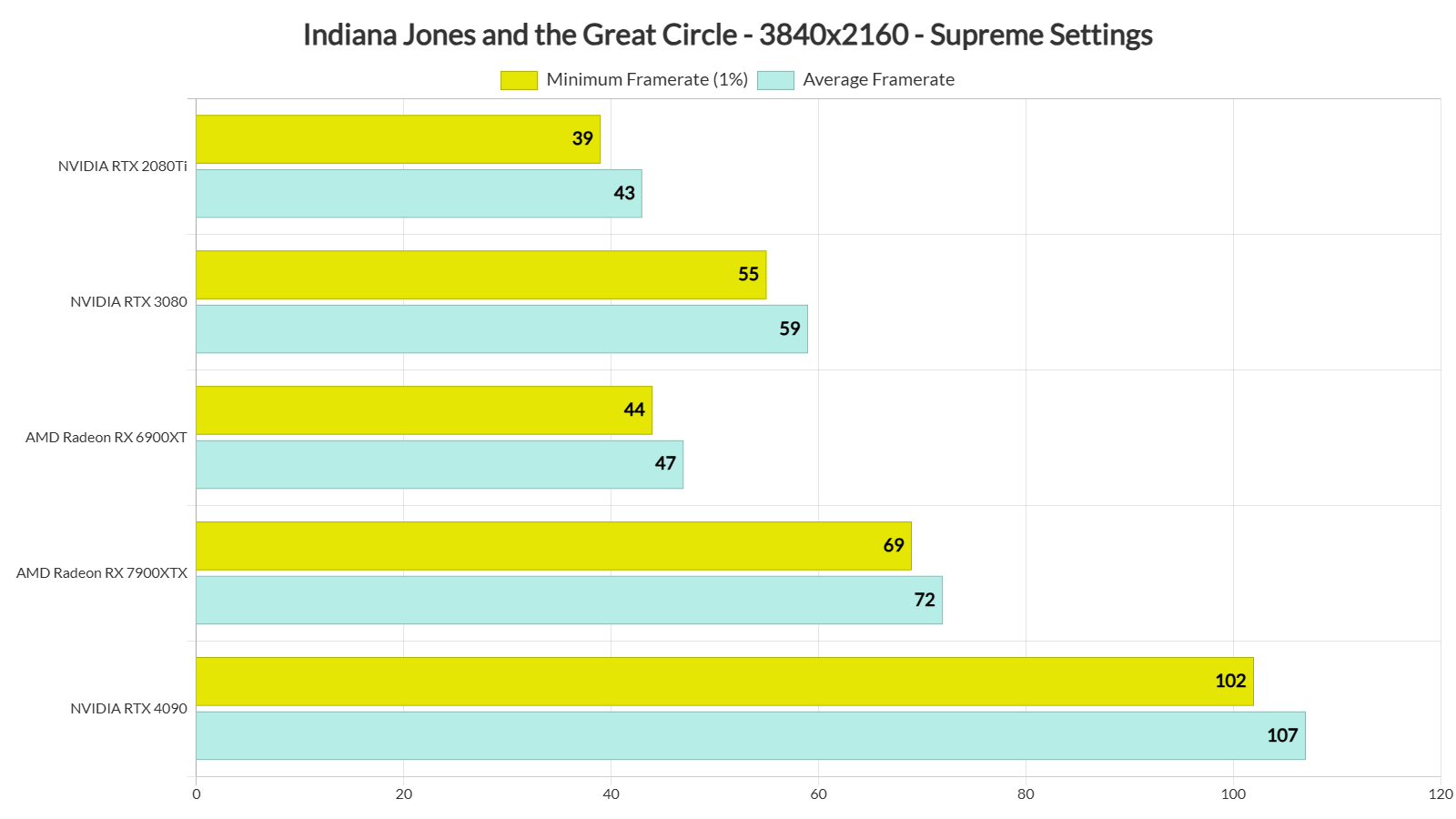

For our benchmarks, we used an AMD Ryzen 9 7950X3D, 32GB of DDR5 at 6000Mhz, AMD’s Radeon RX 6900XT, RX 7900XTX, as well as NVIDIA’s RTX 2080Ti, RTX 3080 and RTX 4090. We also used Windows 10 64-bit, the GeForce 566.14, and the Radeon Adrenalin Edition 24.10.1 drivers. Moreover, we’ve disabled the second CCD on our 7950X3D.

Indiana Jones and the Great Circle requires a GPU that supports hardware Ray Tracing. As such, the game could not launch at all on the AMD Radeon RX580, Vega 64 and NVIDIA GTX980Ti.

MachineGames has added a lot of graphics settings to tweak. PC gamers can adjust the quality of Texture Pool Size, Shadows, Global Illumination, Water, Hair, Volumetrics and more. There are also options to disable Chromatic Aberration, Film Grain, Motion Blur and DoF. The game also supports DLSS 3 with Frame Generation. However, there is no support for AMD FSR 3.0 or Intel XeSS. That’s a bummer as it shouldn’t be that hard to support those techs (as there is already support for DLSS 3).

I should also note that the game does not currently support Full Ray Tracing/Path Tracing. NVIDIA told us that the devs will add support for Path Tracing on December 9th. Now although there is no support for Path Tracing, the game uses by default Ray Traced Global Illumination. That’s why it requires a GPU that supports hardware Ray Tracing. Plus, the final version will not have Denuvo (even though the review build we’re benchmarking has it).

MachineGames has not included any built-in benchmark tool. So, for our tests, we used the “Castel Sant’ Angelo” Mission. This mission appeared to be more demanding than all the previous areas. So, it should give us a pretty good idea of how the rest of the game runs.

During the first two hours, there wasn’t any scene that could tax the CPU. As such, we were GPU-limited the entire time, even at 1080p/Supreme. This is why we won’t have any CPU benchmarks (I may add them at a later date if I find a scene that can stress the CPU). Just take a look at the CPU core usage in the following screenshots.

All of our GPUs were able to push framerates higher than 80FPS at both 1080p/Supreme and 1440p/Supreme. Yes, even the NVIDIA RTX2080Ti can provide a smooth gaming experience at Native 1440p. This is great news as the game will run great on a wide range of GPUs.

As for Native 4K/Supreme, the only GPUs that were able to provide a smooth gaming experience were the AMD Radeon RX 7900XTX and the NVIDIA RTX 4090. The NVIDIA RTX 4090 is obviously in a league of its own, but the AMD Radeon RX 7900XTX was also able to push framerates over 69FPS at all times.

Graphics-wise, Indiana Jones and the Great Circle looks absolutely stunning in close-ups. The character models and the environments look amazing. RTGI also makes the game look incredible. Just look at some of the indoor screenshots below (which showcase what RTGI can do). This is why more and more games should be using RTGI from the get-go. At times, Indiana Jones and the Great Circle pushes some of the best visuals I’ve seen.

However, the game suffers from A LOT of pop-ins. Right now, there are lighting, shadow and object pop-ins. Lighting and shadows form right in front of you. This is something you can clearly see in the following video. Due to these pop-ins, the game may put off some PC gamers. I can’t stress enough how aggressive the LODs are in this game. This is something I really hope Path Tracing will address when it becomes available.

It’s also worth noting that some objects have really low-resolution textures. From the looks of it, a bug is preventing some objects from using higher-quality textures. Here’s an example. Take a look at those awful plants. This bug occurs on both AMD and NVIDIA GPUs. Bethesda is aware of it, and is working on a fix.

All in all, Indiana Jones and the Great Circle runs incredibly well on the PC. A lot of GPUs will be able to run it with over 60FPS at both Native 1080p and 1440p. And, thanks to DLSS 3, NVIDIA RTX40 series owners can enjoy it with over 140FPS at 4K/Supreme with DLAA and Frame Generation. Oh, and the game does not suffer from any shader compilation or traversal stutters. This is a silky smooth game.

The only downside is the awful pop-ins that occur right in front of view. I don’t know why MachineGames did not provide higher LOD options for the Supreme settings. It’s a shame really because in the big outdoor environments, the constant pop-in can become really annoying.

Enjoy and stay tuned for more!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email