It appears that MSI may have leaked the first gaming benchmarks for the upcoming AMD Ryzen 9 9950X3D CPU. Since these are coming straight from MSI, they are legit. As with most “first-party” benchmarks, though, we suggest taking them with a grain of salt. So, let’s dive in.

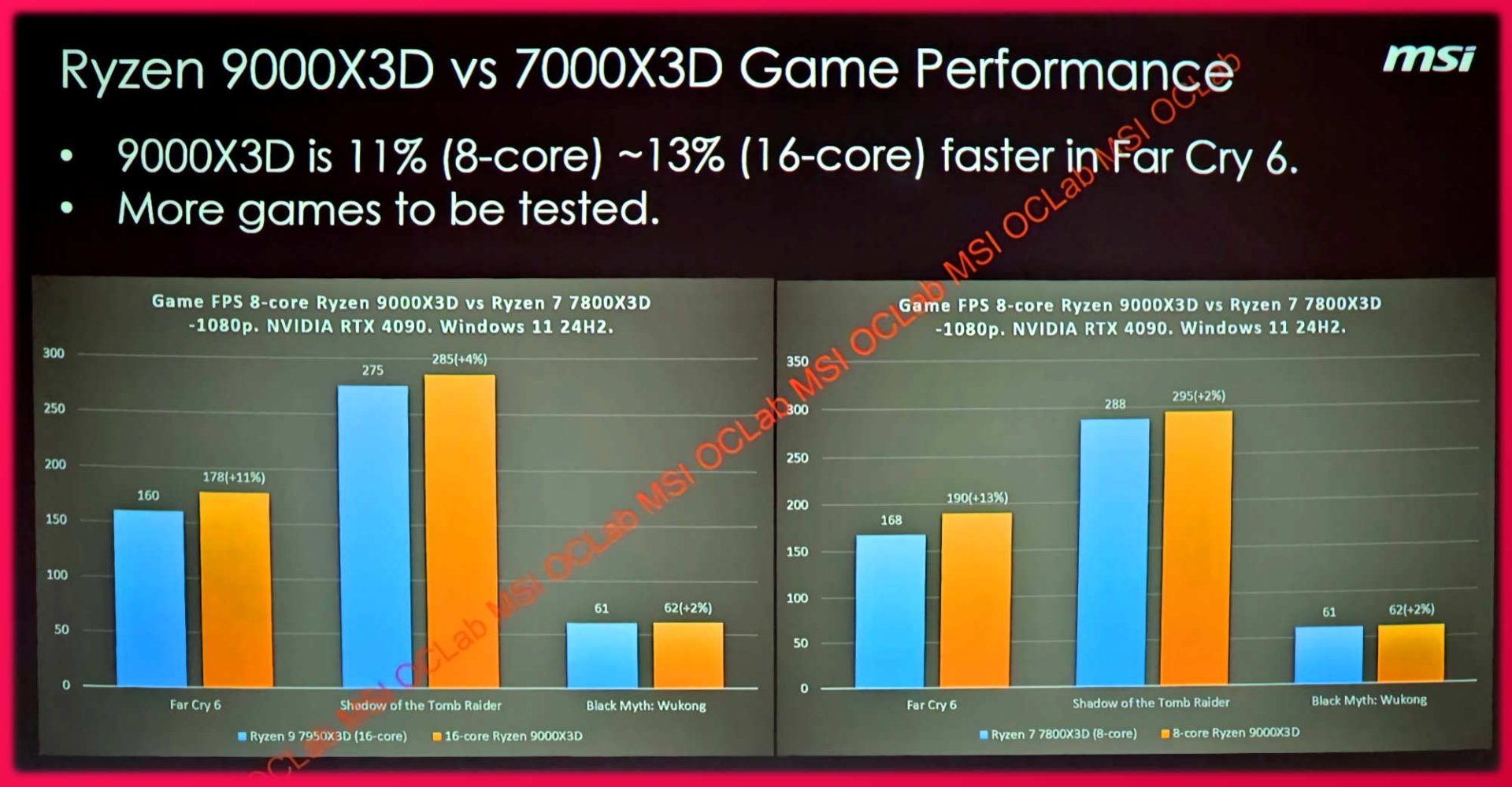

MSI has benchmarked three games at 1080p. These are Black Myth: Wukong, Shadow of the Tomb Raider and Far Cry 6. The MSI graphs include both the AMD Ryzen 7 9800X3D and the 9950X3D. MSI has not named them, but this is obvious from the graphs and the CPUs with which the team compares the AMD Ryzen 9 9000X3D CPUs.

So, the AMD Ryzen 9 9950X3D appears to be 11% faster in Far Cry 6, 4% faster in Shadow of the Tomb Raider, and 2% faster in Black Myth: Wukong. On the other hand, the AMD Ryzen 7 9800X3D seems to be 13% faster than the AMD Ryzen 7 7800X3D in Far Cry 6, and 2% faster in Shadow of the Tomb Raider and Black Myth: Wukong.

Now I know that the SOTR and Black Myth: Wukong may disappoint some gamers. However, there are some things you should keep in mind.

You see, the built-in benchmarks for both Shadow of the Tomb Raider and Black Myth: Wukong are mostly GPU-bound, even at 1080p. So, even though MSI has used an NVIDIA RTX 4090, we are basically looking at GPU bottlenecks in these two games.

On the other hand, we know that Far Cry 6 is a game that relies heavily on one CPU core/thread. That was one of our biggest gripes with it when it came out. Due to this CPU optimization issue, FC6 is CPU-bound even at 1080p or 1440p on high-end GPUs like the RTX 4090. So, a performance increase of 11-13% is great news.

Again, these appear to be early gaming benchmarks. So, take them with a grain of salt. My advice is to best wait for some third-party gaming benchmarks.

Rumor has it that AMD will reveal or launch its new 3D V-cache CPUs on October 25th. As with all rumors, though, I suggest taking it with a grain of salt. Man, there is a lot of salt in this article, right?

Anyway, although the benchmarks are legit as they come from MSI, the release date rumor seems fishy.

Stay tuned for more!

Thanks Hardwareluxx

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email