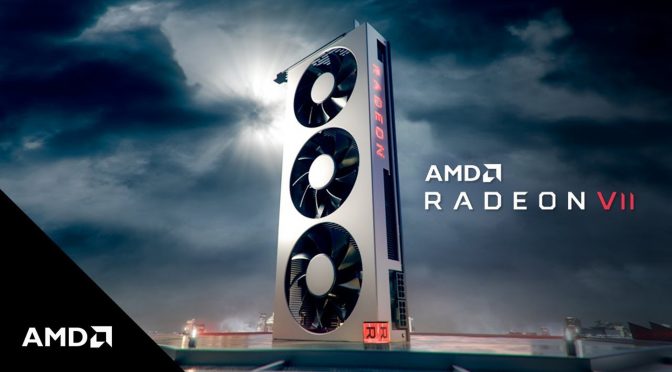

The embargo for AMD Radeon VII’s reviews will lift later today and it appears that the first gaming benchmarks for it have been leaked online. These benchmarks are coming from HD-Technologia and from the looks of it AMD’s latest high-end GPU offers similar performance to the NVIDIA GeForce RTX2080.

HD-Technologia used an Intel Core i7-7700K (4.2GHz) with an ASUS Z270F Gaming motherboard, 16GB Corsair Vengeance LPX (2x8GB) DDR4-3000MHz, 2TB Seagate Barracuda 7200 RPM and the AMD 18.50-RC14-190117/NVIDIA Driver 417.71 WHQL drivers.

As you’d expect, the NVIDIA GeForce RTX2080 wins some benchmarks that favour NVIDIA’s GPUs and the AMD Radeon VII wins others that favour AMD’s graphics cards. However, and for the most part, we can say that – at least according to these benchies – these two GPUs offer similar performance.

Now what’s really important to note here is that contrary to the RTX2080, the AMD Radeon VII does not offer support for real-time ray tracing. Furthermore, and while DirectML could be used for super sampling, developers have not experimented with it. As such, DLSS may be used in more games, thus giving RTX2080 the edge over the AMD Radeon VII in more games.

Both the AMD Radeon VII and the NVIDIA GeForce RTX2080 have a MSRP of $699 and according to some reports, overall availability for the AMD Radeon VII will be limited. As such, I don’t really know why someone would choose AMD’s offering over NVIDIA’s as the latter comes with more features, same price and same performance.

But anyway, I’m pretty sure that more publications will reveal their benchmarks later today. Until then, here are the benchmarks from HD-Technologia.

Kudos to our reader Metal Messiah for bringing this to our attention!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email